Back to Courses

Data Analysis Courses - Page 56

Showing results 551-560 of 998

Serverless Data Processing with Dataflow: Operations

In the last installment of the Dataflow course series, we will introduce the components of the Dataflow operational model. We will examine tools and techniques for troubleshooting and optimizing pipeline performance. We will then review testing, deployment, and reliability best practices for Dataflow pipelines. We will conclude with a review of Templates, which makes it easy to scale Dataflow pipelines to organizations with hundreds of users. These lessons will help ensure that your data platform is stable and resilient to unanticipated circumstances.

Modeling Risk and Realities

Useful quantitative models help you to make informed decisions both in situations in which the factors affecting your decision are clear, as well as in situations in which some important factors are not clear at all. In this course, you can learn how to create quantitative models to reflect complex realities, and how to include in your model elements of risk and uncertainty. You’ll also learn the methods for creating predictive models for identifying optimal choices; and how those choices change in response to changes in the model’s assumptions. You’ll also learn the basics of the measurement and management of risk. By the end of this course, you’ll be able to build your own models with your own data, so that you can begin making data-informed decisions. You’ll also be prepared for the next course in the Specialization.

Assisting Public Sector Decision Makers With Policy Analysis

Develop data analysis skills that support public sector decision-makers by performing policy analysis through all phases of the policymaking process. You will learn how to apply data analysis techniques to the core public sector principles of efficiency, effectiveness, and equity. Through authentic case studies and data sets, you will develop analytical skills commonly used to analyze and assess policies and programs, including policy options analysis, microsimulation modeling, and research designs for program and policy evaluation. You will also learn intermediate technical skills, such as Chi-squared tests and contingency tables, comparing samples through t-tests and ANOVA, applying Tukey's honest significant difference to correct for multiple tests, understanding p-values, and visualizing simulations of statistical functions to help answer questions policymakers ask such as “What should we do?” and “Did it work?” In addition, you will practice statistical testing and create ggplot visuals for two real-world datasets using the R programming language.

All coursework is completed in RStudio in Coursera without the need to install additional software.

This is the third of four courses within the Data Analytics in the Public Sector with R Specialization. The series is ideal for current or early career professionals working in the public sector looking to gain skills in analyzing public data effectively. It is also ideal for current data analytics professionals or students looking to enter the public sector.

Design Strategies for Maximizing Total Data Quality

By the end of this third course in the Total Data Quality Specialization, learners will be able to:

1. Learn about design tools and techniques for maximizing TDQ across all stages of the TDQ framework during a data collection or a data gathering process.

2. Identify aspects of the data generating or data gathering process that impact TDQ and be able to assess whether and how such aspects can be measured.

3. Understand TDQ maximization strategies that can be applied when gathering designed and found/organic data.

4. Develop solutions to hypothetical design problems arising during the process of data collection or data gathering and processing.

This specialization as a whole aims to explore the Total Data Quality framework in depth and provide learners with more information about the detailed evaluation of total data quality that needs to happen prior to data analysis. The goal is for learners to incorporate evaluations of data quality into their process as a critical component for all projects. We sincerely hope to disseminate knowledge about total data quality to all learners, such as data scientists and quantitative analysts, who have not had sufficient training in the initial steps of the data science process that focus on data collection and evaluation of data quality. We feel that extensive knowledge of data science techniques and statistical analysis procedures will not help a quantitative research study if the data collected/gathered are not of sufficiently high quality.

This specialization will focus on the essential first steps in any type of scientific investigation using data: either generating or gathering data, understanding where the data come from, evaluating the quality of the data, and taking steps to maximize the quality of the data prior to performing any kind of statistical analysis or applying data science techniques to answer research questions. Given this focus, there will be little material on the analysis of data, which is covered in myriad existing Coursera specializations. The primary focus of this specialization will be on understanding and maximizing data quality prior to analysis.

Data Science in Real Life

Have you ever had the perfect data science experience? The data pull went perfectly. There were no merging errors or missing data. Hypotheses were clearly defined prior to analyses. Randomization was performed for the treatment of interest. The analytic plan was outlined prior to analysis and followed exactly. The conclusions were clear and actionable decisions were obvious. Has that every happened to you? Of course not. Data analysis in real life is messy. How does one manage a team facing real data analyses? In this one-week course, we contrast the ideal with what happens in real life. By contrasting the ideal, you will learn key concepts that will help you manage real life analyses.

This is a focused course designed to rapidly get you up to speed on doing data science in real life. Our goal was to make this as convenient as possible for you without sacrificing any essential content. We've left the technical information aside so that you can focus on managing your team and moving it forward.

After completing this course you will know how to:

1, Describe the “perfect” data science experience

2. Identify strengths and weaknesses in experimental designs

3. Describe possible pitfalls when pulling / assembling data and learn solutions for managing data pulls.

4. Challenge statistical modeling assumptions and drive feedback to data analysts

5. Describe common pitfalls in communicating data analyses

6. Get a glimpse into a day in the life of a data analysis manager.

The course will be taught at a conceptual level for active managers of data scientists and statisticians. Some key concepts being discussed include:

1. Experimental design, randomization, A/B testing

2. Causal inference, counterfactuals,

3. Strategies for managing data quality.

4. Bias and confounding

5. Contrasting machine learning versus classical statistical inference

Course promo:

https://www.youtube.com/watch?v=9BIYmw5wnBI

Course cover image by Jonathan Gross. Creative Commons BY-ND https://flic.kr/p/q1vudb

Network Analysis for Marketing Analytics

Network analysis is a long-standing methodology used to understand the relationships between words and actors in the broader networks in which they exist. This course covers network analysis as it pertains to marketing data, specifically text datasets and social networks. Learners walk through a conceptual overview of network analysis and dive into real-world datasets through instructor-led tutorials in Python. The course concludes with a major project.

This course can be taken for academic credit as part of CU Boulder’s Master of Science in Data Science (MS-DS) degree offered on the Coursera platform. The MS-DS is an interdisciplinary degree that brings together faculty from CU Boulder’s departments of Applied Mathematics, Computer Science, Information Science, and others. With performance-based admissions and no application process, the MS-DS is ideal for individuals with a broad range of undergraduate education and/or professional experience in computer science, information science, mathematics, and statistics. Learn more about the MS-DS program at https://www.coursera.org/degrees/master-of-science-data-science-boulder.

Design and Build a Data Warehouse for Business Intelligence Implementation

The capstone course, Design and Build a Data Warehouse for Business Intelligence Implementation, features a real-world case study that integrates your learning across all courses in the specialization. In response to business requirements presented in a case study, you’ll design and build a small data warehouse, create data integration workflows to refresh the warehouse, write SQL statements to support analytical and summary query requirements, and use the MicroStrategy business intelligence platform to create dashboards and visualizations.

In the first part of the capstone course, you’ll be introduced to a medium-sized firm, learning about their data warehouse and business intelligence requirements and existing data sources. You’ll first architect a warehouse schema and dimensional model for a small data warehouse. You’ll then create data integration workflows using Pentaho Data Integration to refresh your data warehouse. Next, you’ll write SQL statements for analytical query requirements and create materialized views to support summary data management. For data integration workflows and analytical queries, you can use either Oracle or PostgreSQL. Finally, you will use MicroStrategy OLAP capabilities to gain insights into your data warehouse. In the completed project, you’ll have built a small data warehouse containing a schema design, data integration workflows, analytical queries, materialized views, dashboards and visualizations that you’ll be proud to show to your current and prospective employers.

Analysis of Variance with ANOVA in Google Sheets

In every domain it is critical to decision making to understand whether differences exist between two or more groups of things or outcomes. In this course you will test for differences with the One-Way ANOVA analysis of variance technique. You will also learn how to design the test and apply tactics so that you are able to confidently report statistical significance between and within groups if it exists. For this course, we will work side-by-side in the free-to-use software Google Sheets.

By the end of this course, you will be able to apply ANOVA and post hoc tests using any spreadsheet software and you will be able to confidently report levels of statistical significance in groups.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Practical Machine Learning

One of the most common tasks performed by data scientists and data analysts are prediction and machine learning. This course will cover the basic components of building and applying prediction functions with an emphasis on practical applications. The course will provide basic grounding in concepts such as training and tests sets, overfitting, and error rates. The course will also introduce a range of model based and algorithmic machine learning methods including regression, classification trees, Naive Bayes, and random forests. The course will cover the complete process of building prediction functions including data collection, feature creation, algorithms, and evaluation.

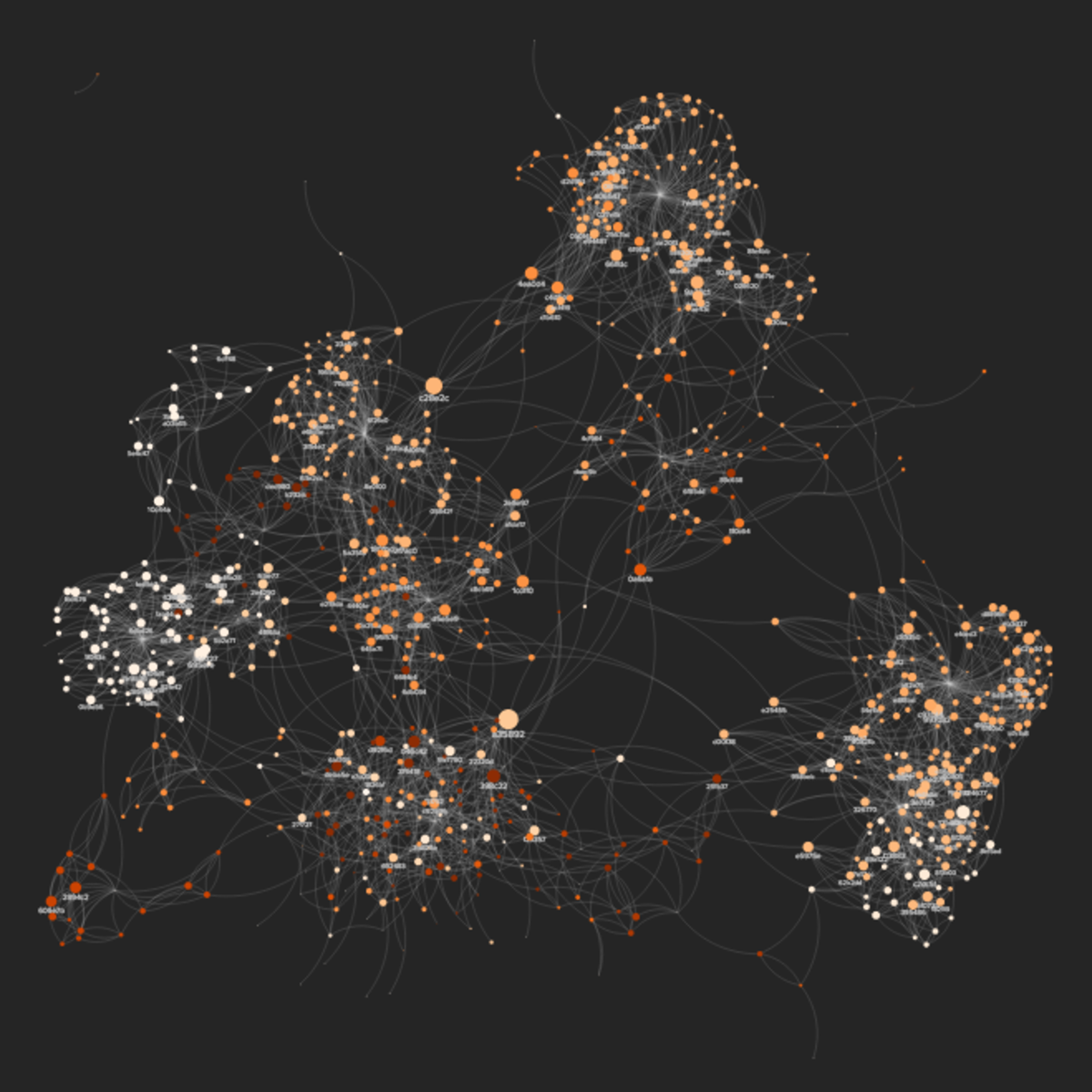

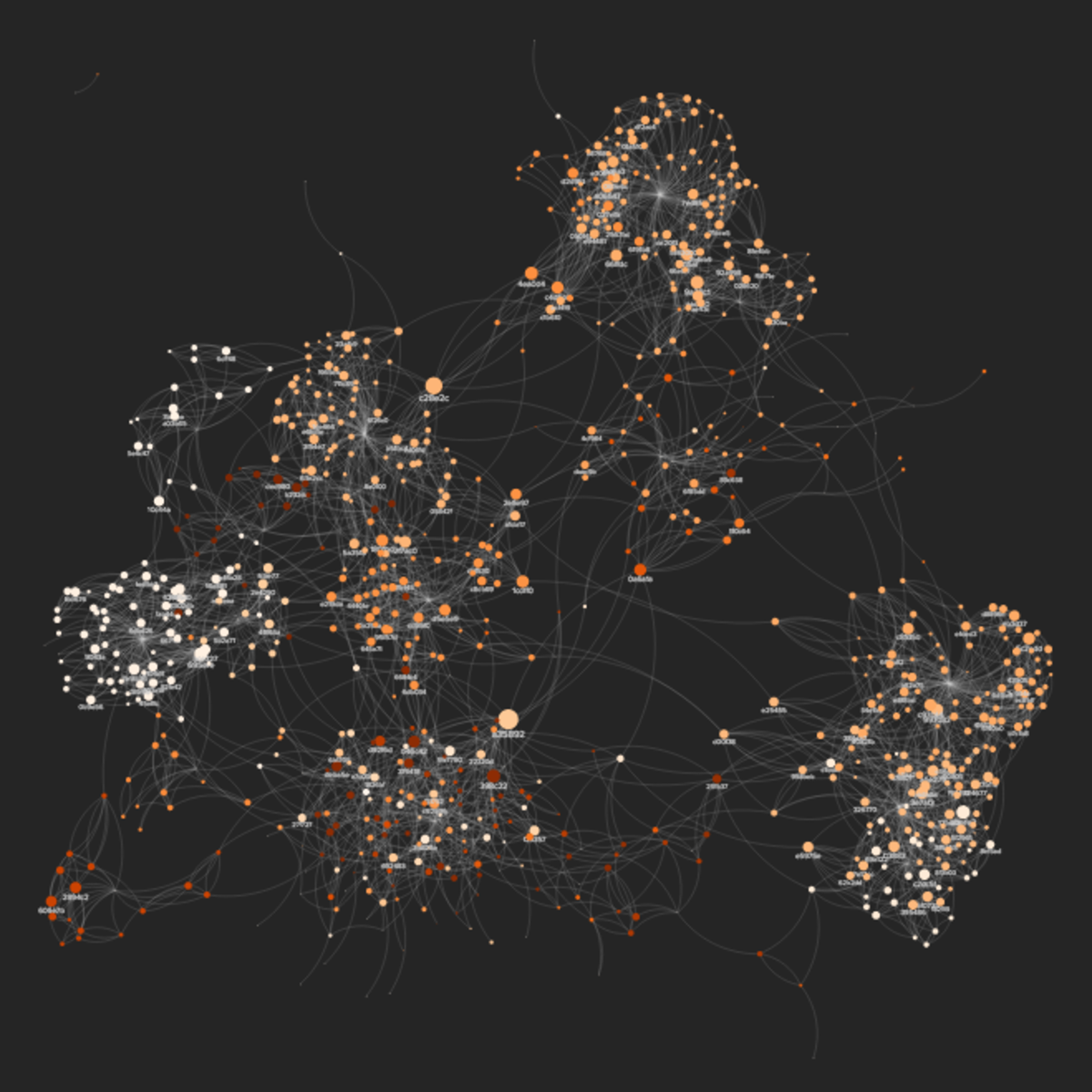

Introduction to Systems and Network Mapping with Kumu

In this 1-hour long project-based course, you will create an interactive multi-elements relationship map, as well as design visualizations for a real-world social network, based on metrics analyses.

Besides helping you to make sense of complex data, relationship maps like the ones we will build here are a great medium to visually present Causal Loop and Stock and Flow diagrams, as well as non-linear dynamics within an ecosystem.

This project will also introduce you to some of the basic concepts behind network theory, which will inform the analyses and interpretations of the maps you will create.

The art and craft of creating and communicating relationship maps is applicable to a wide range of areas, from design and software engineering, to organization consultancy and community building. And this project is an accessible opportunity for anyone to get some hands-on practice and knowledge on this subject. So let's map!

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved