Back to Courses

Data Management Courses - Page 36

Showing results 351-360 of 399

Data Analysis Using Python

This course provides an introduction to basic data science techniques using Python. Students are introduced to core concepts like Data Frames and joining data, and learn how to use data analysis libraries like pandas, numpy, and matplotlib. This course provides an overview of loading, inspecting, and querying real-world data, and how to answer basic questions about that data. Students will gain skills in data aggregation and summarization, as well as basic data visualization.

Introduction to Computer Vision with TensorFlow

This is a self-paced lab that takes place in the Google Cloud console. In this lab you create a computer vision model that can recognize items of clothing and then explore what affects the training model.

Database Operations in MariaDB Using Python From Infosys

Have you ever imagined performing various operations on a database without writing a single query? Did you know that Python can be used to accomplish these task effortlessly?

By taking this Guided Project, you will be able to accomplish exactly this task!

“Database Operations in MariaDB using Python” is for any system or database administrator who wants to automate their daily tasks of performing routine operations on a database.

By the end of this 1-hour long Guided Project, you will have created scripts written in Python to perform various database operations in a MariaDB server.

Get ready to easily CREATE, READ, UPDATE and DELETE employee data from a table within a company’s server.

Brought to you by Infosys, a global leader in next-generation digital services and consulting, this project is created by a certified Technology Associate in Python Programming, and Technology Analyst at Infosys.

Let's get started!

CRUD Operations using MongoDB NoSQL

Unlike relational databases, NoSQL databases such as MongoDB store data as collections of fields, rather than rows and columns. It mimics the way humans think of objects and provides a smooth interface for applications that are object-oriented. Each ‘object’ is stored in JSON format in a data structure called a Document. The Document may represent a single word and its definition for example. A Document is stored in a Collection, which contains one to many Documents. The MongoDB database then contains one to many Collections.

In this course, you will create a MongoDB database collection containing words and their definitions. You will then retrieve data from the collection, update data, remove document data from the collection, and finally delete a document, a collection, and a database.

Google Cloud Pub/Sub: Qwik Start - Console

This is a self-paced lab that takes place in the Google Cloud console. This hands-on lab shows you how to publish and consume messages with a pull subscriber, using the Google Cloud Platform Console. Watch the short video Simplify Event Driven Processing with Cloud Pub/Sub.

Getting started with Azure IOT Hub

In this 1-hour guided project, you will create an IoT hub & an IoT device in Azure and use a Raspberry Pi web simulator to send telemetry data to the IoT hub. You will then create an Azure Cloud storage account to store the telemetry data and also create a Stream Analytics job to fetch the data in a CSV file. In the final task, you will analyze and visualize the temperature and humidity telemetry data through line charts and area charts using Microsoft Excel online. In this guided project, you will learn everything you need to know to get started with Azure IoT Hub.

In order to complete this project successfully, you need an Azure account. If you do not have an Azure account, you will be prompted to create a Free Tier Microsoft Azure account in the project.

Building Batch Pipelines in Cloud Data Fusion

This is a self-paced lab that takes place in the Google Cloud console. This lab will teach you how to use the Pipeline Studio in Cloud Data Fusion to build an ETL pipeline. Pipeline Studio exposes the building blocks and built-in plugins for you to build your batch pipeline, one node at a time. You will also use the Wrangler plugin to build and apply transformations to your data that goes through the pipeline.

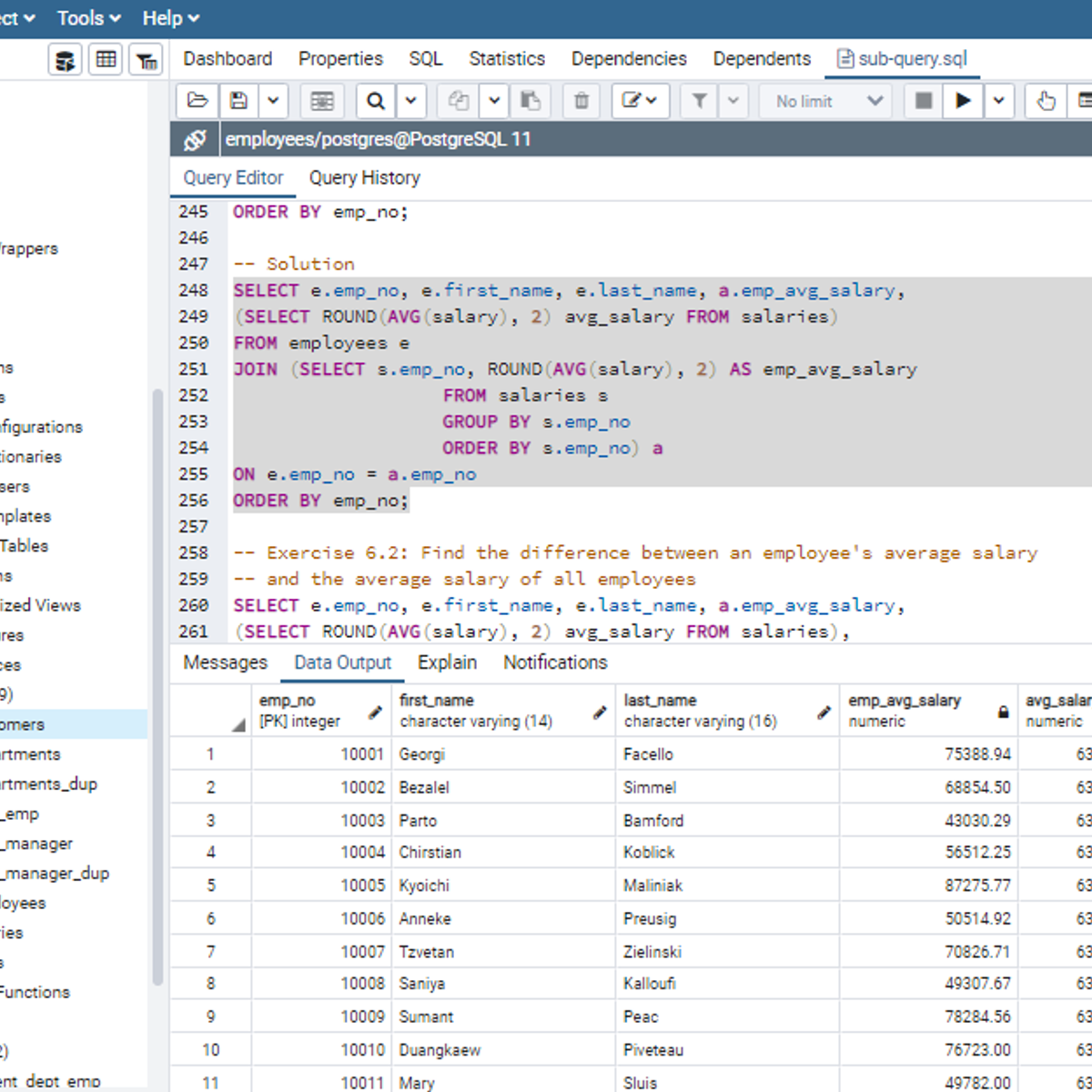

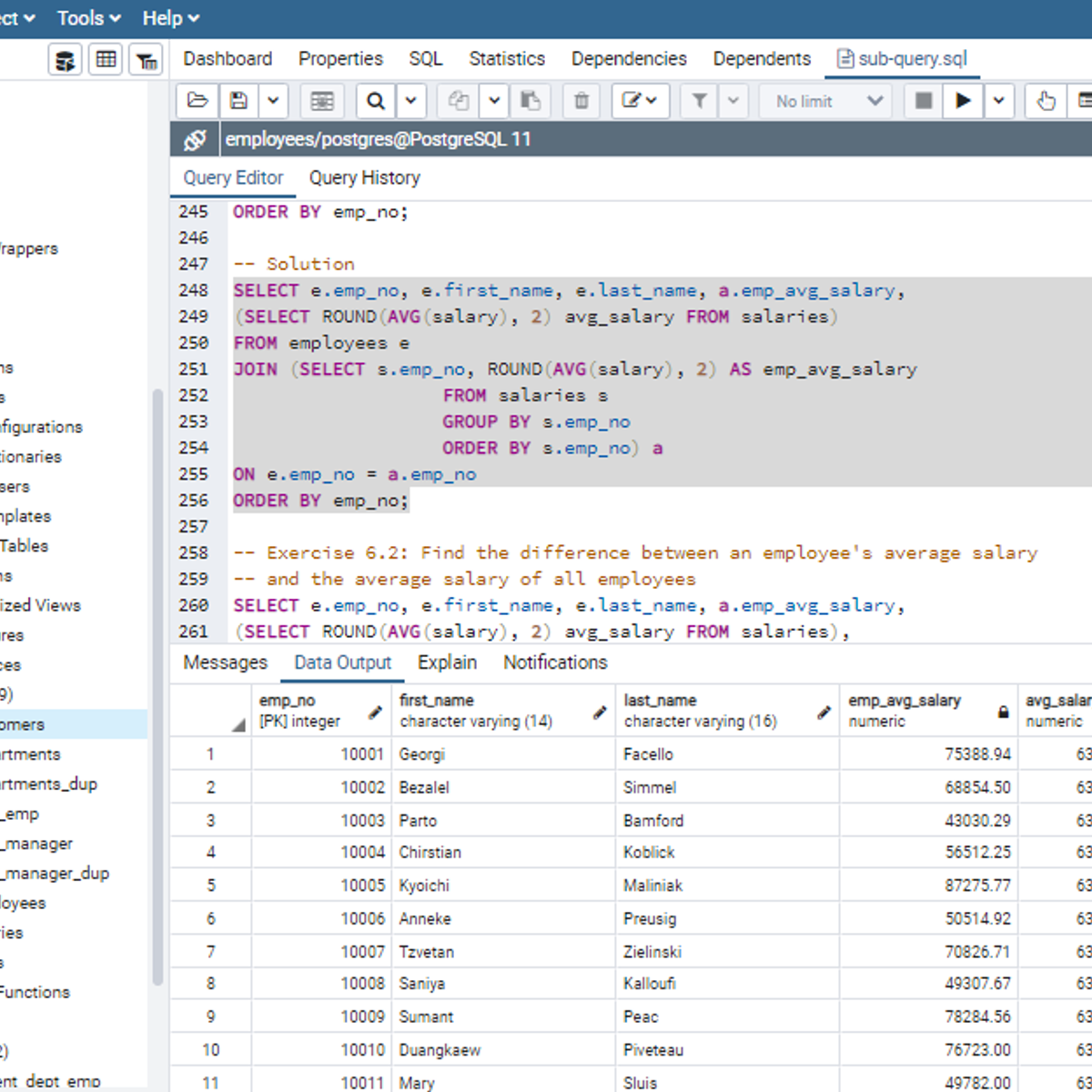

Working with Subqueries in SQL

Welcome to this project-based course, Working with Subqueries in SQL. In this project, you will learn how to use SQL subqueries extensively to query tables in a database.

By the end of this 2-and-a-half-hour-long project, you will be able to use subqueries in the WHERE clause, FROM clause, and the SELECT clause to retrieve the desired result from a database. In this project, we will move systematically by first introducing the use of subqueries in the WHERE clause. Then, we will use subqueries in the FROM and SELECT clause by writing slightly complex queries for real-life applications. Be assured that you will get your hands really dirty in this project because you will get to work on a lot of exercises to reinforce your knowledge of the concept.

Also, for this hands-on project, we will use PostgreSQL as our preferred database management system (DBMS). Therefore, to complete this project, it is required that you have prior experience with using PostgreSQL. Similarly, this project is an advanced SQL concept; so, a good foundation for writing SQL queries is vital to complete this project.

If you are not familiar with writing queries in SQL and want to learn these concepts, start with my previous guided projects titled “Querying Databases using SQL SELECT statement," and “Mastering SQL Joins.” I taught these guided projects using PostgreSQL. So, taking these projects will give the needed requisite to complete this Working with Subqueries in SQL project. However, if you are comfortable writing queries in PostgreSQL, please join me on this wonderful ride! Let’s get our hands dirty!

Exploring and Preparing your Data with BigQuery

In this course, we see what the common challenges faced by data analysts are and how to solve them with the big data tools on Google Cloud. You’ll pick up some SQL along the way and become very familiar with using BigQuery and Dataprep to analyze and transform your datasets.

This is the first course of the From Data to Insights with Google Cloud series. After completing this course, enroll in the Creating New BigQuery Datasets and Visualizing Insights course.

Redacting Sensitive Data with Cloud Data Loss Prevention

This is a self-paced lab that takes place in the Google Cloud console. In this lab, you will learn the basic capabilities of Cloud Data Loss Prevention and the various ways it can be used to protect data.

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved