Back to Courses

Computer Science Courses - Page 183

Showing results 1821-1830 of 2309

Developing Android Apps with App Inventor

The course will give students hands-on experience in developing interesting Android applications. No previous experience in programming is needed, and the course is suitable for students with any level of computing experience. MIT App Inventor will be used in the course. It is a blocks-based programming tool that allows everyone, even novices, to start programming and build fully functional apps for Android devices. Students are encouraged to use their own Android devices for hands-on testing and exploitation.

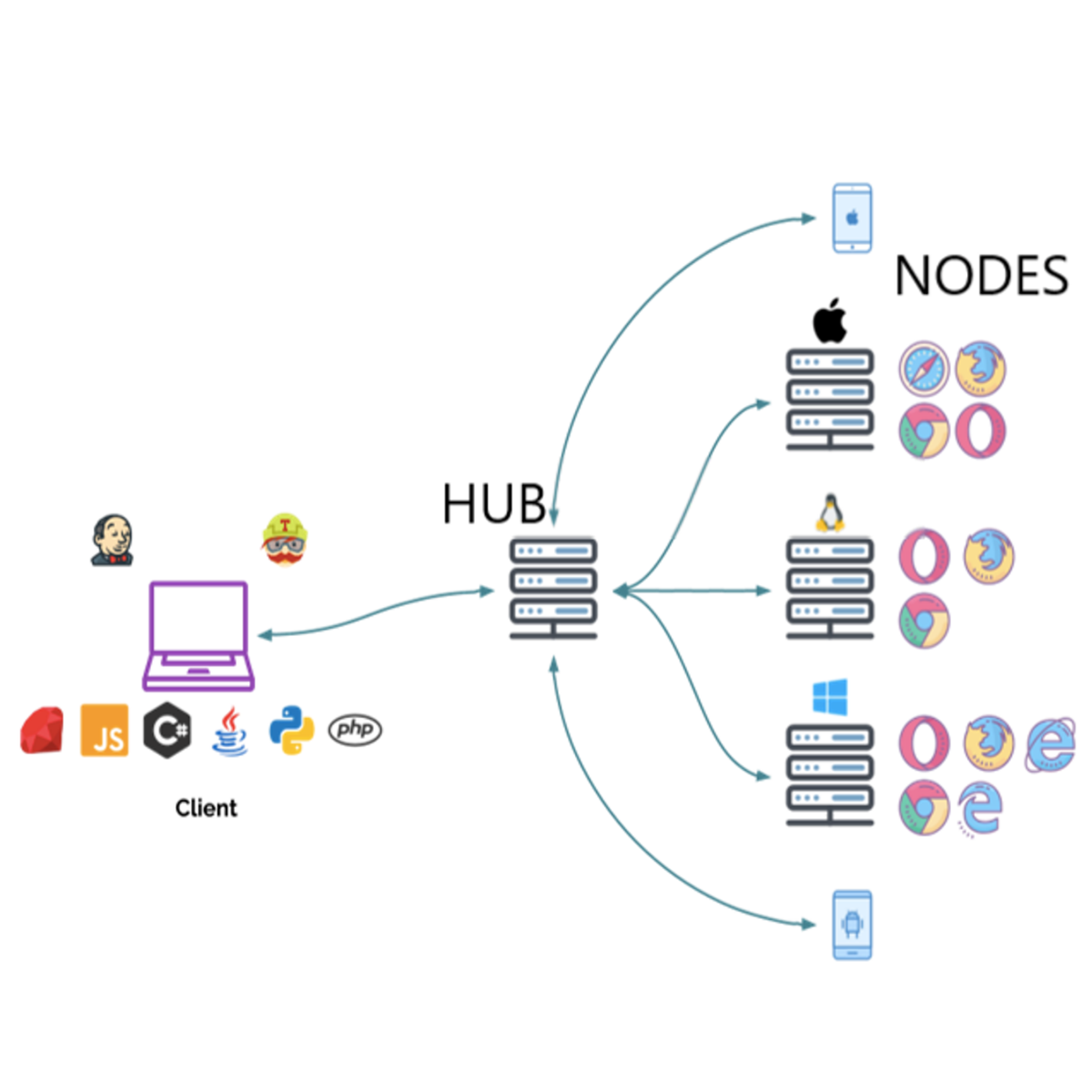

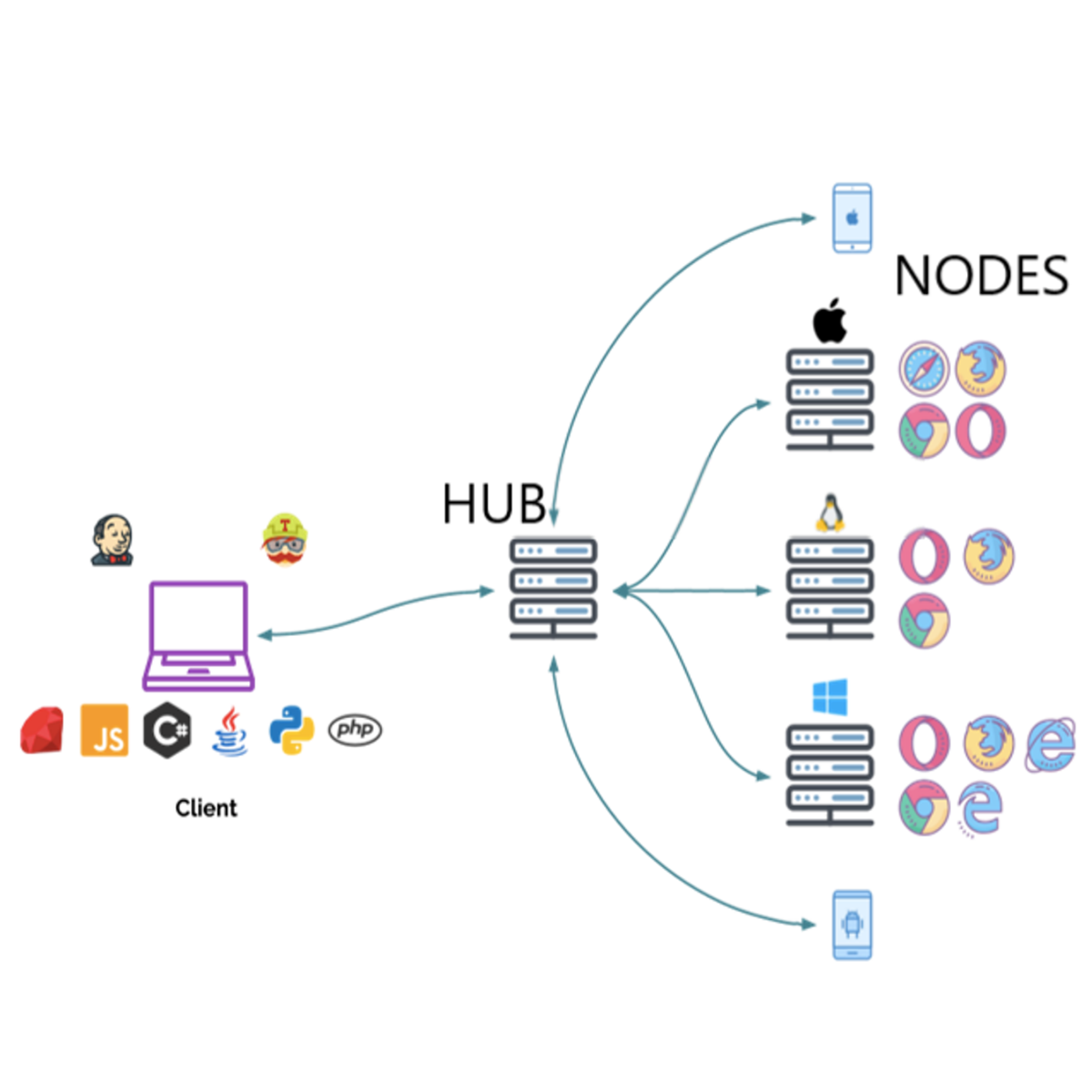

Selenium Grid - Running Selenium tests in parallel

“Selenium automates browser” That’s it! What you do with this power, is up to you!

Selenium Grid is one of the components of Selenium which allows users to run tests in parallel on multiple browsers, multiple operating systems, and machines.

Running Tests in parallel reduce the time and cost of execution of tests and results in better ROI.

Through hands-on, practical experience, you will go through concepts like Setting up Selenium Grid hub and node network and running Selenium tests parallelly on multiple browsers.

How to Combine Multiple Images in Adobe Photoshop

By the end of this project, you’ll be able to combine multiple images into one, using Adobe Photoshop. Inside Photoshop, you can quickly import, move, and create customized composite images.

During this project, you’ll get used to navigating some important Photoshop tools and practice importing images. Then you’ll use layers, masks, and blend modes to turn multiple images into a single new composite. Once you’re finished creating your composite, you’ll learn how to export your work in one piece or automatically turn layers into separate files.

By the end of the project, you’ll be able to produce eye-catching composites in minutes.

Managing Network Security

Almost every organization uses computer networks to share their information and to support their business operations. When we allow network access to data it is exposed to threats from inside and outside of the organization. This course examines the threats associated with using internal and external networks and how to manage the protection of information when it’s accessible via networks.

In this course, a learner will be able to:

● Describe the threats to data from information communication technology (ICT)

● Identify the issues and practices associated with managing network security

● Identify the practices, tools, and methodologies associated with assessing network security

● Describe the components of an effective network security program

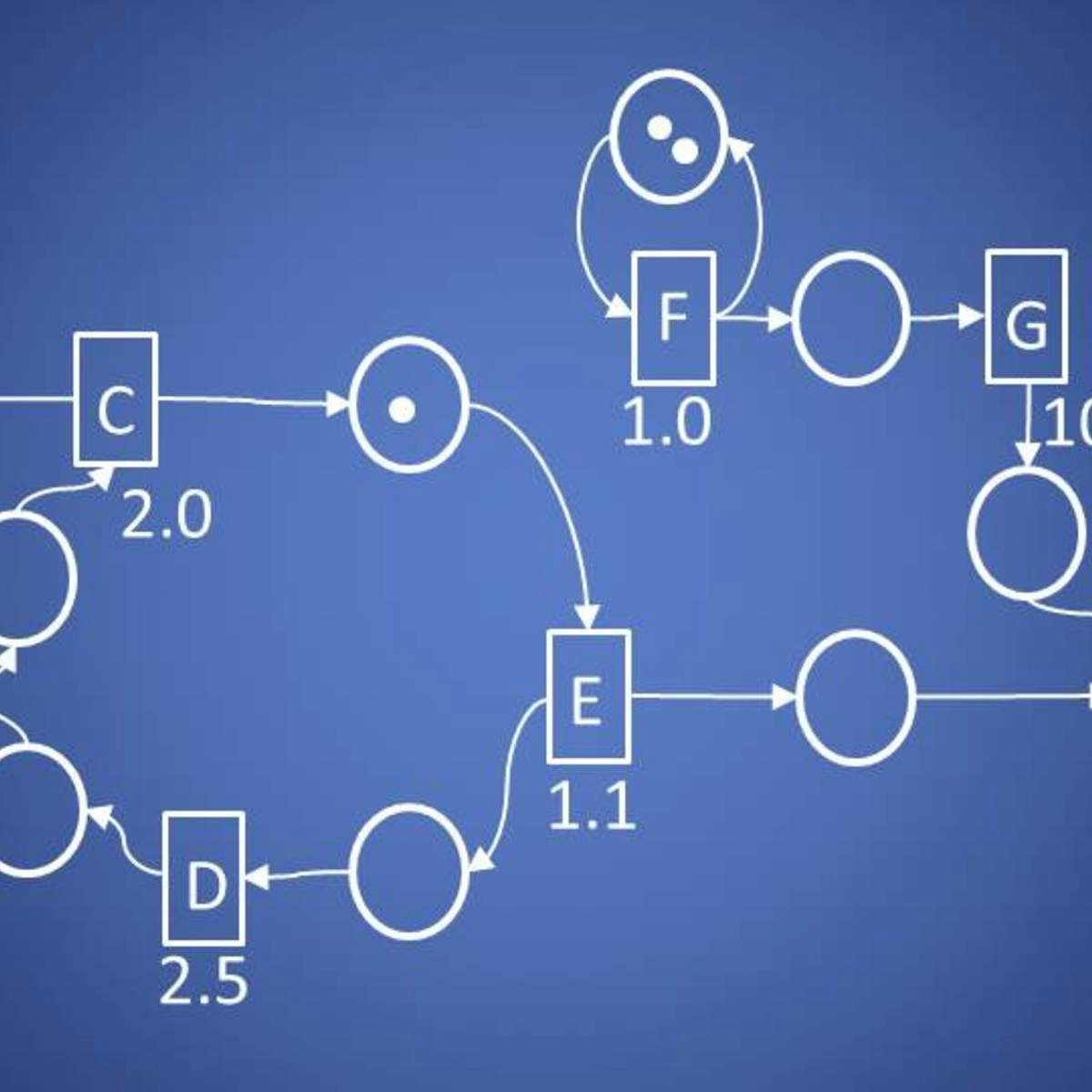

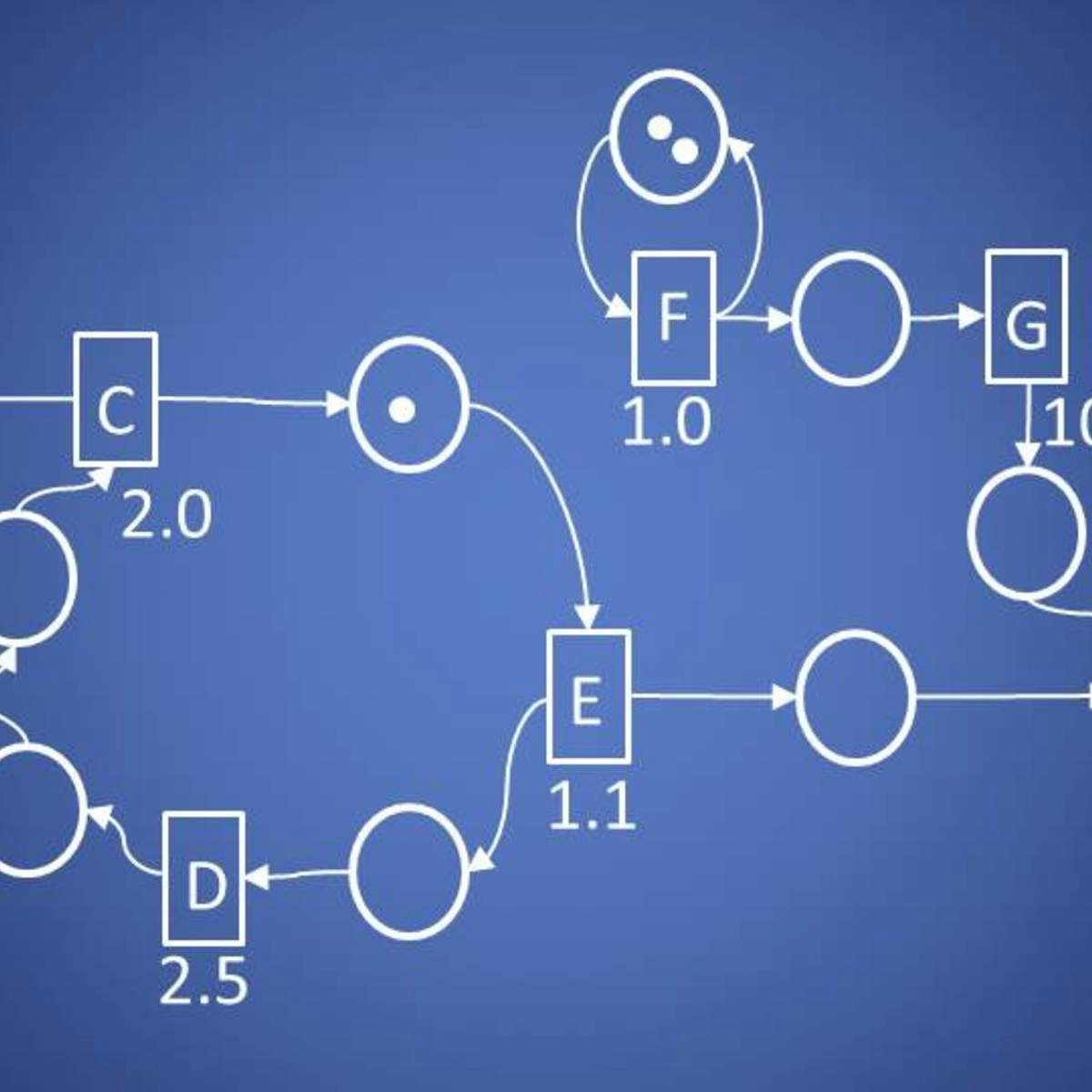

Quantitative Formal Modeling and Worst-Case Performance Analysis

Welcome to Quantitative Formal Modeling and Worst-Case Performance Analysis. In this course, you will learn about modeling and solving performance problems in a fashion popular in theoretical computer science, and generally train your abstract thinking skills.

After finishing this course, you have learned to think about the behavior of systems in terms of token production and consumption, and you are able to formalize this thinking mathematically in terms of prefix orders and counting functions. You have learned about Petri-nets, about timing, and about scheduling of token consumption/production systems, and for the special class of Petri-nets known as single-rate dataflow graphs, you will know how to perform a worst-case analysis of basic performance metrics, like throughput, latency and buffering.

Disclaimer: As you will notice, there is an abundance of small examples in this course, but at first sight there are not many industrial size systems being discussed. The reason for this is two-fold. Firstly, it is not my intention to teach you performance analysis skills up to the level of what you will need in industry. Rather, I would like to teach you to think about modeling and performance analysis in general and abstract terms, because that is what you will need to do whenever you encounter any performance analysis problem in the future. After all, abstract thinking is the most revered skill required for any academic-level job in any engineering discipline, and if you are able to phrase your problems mathematically, it will become easier for you to spot mistakes, to communicate your ideas with others, and you have already made a big step towards actually solving the problem. Secondly, although dataflow techniques are applicable and being used in industry, the subclass of single-rate dataflow is too restrictive to be of practical use in large modeling examples. The analysis principles of other dataflow techniques, however, are all based on single-rate dataflow. So this course is a good primer for any more advanced course on the topic.

This course is part of the university course on Quantitative Evaluation of Embedded Systems (QEES) as given in the Embedded Systems master curriculum of the EIT-Digital university, and of the Dutch 3TU consortium consisting of TU/e (Eindhoven), TUD (Delft) and UT (Twente). The course material is exactly the same as the first three weeks of QEES, but the examination of QEES is at a slightly higher level of difficulty, which cannot (yet) be obtained in an online course.

Real-World Cloud PM 2 of 3: Managing, Innovating, Pricing

Sponsored by AMAZON WEB SERVICES (AWS). Learn real-world technical and business skills for product managers or any job family involved in the rapidly expanding cloud computing industry. Ace the AWS Certified Cloud Practitioner Exam.

This course is the 2nd in a 3-course Specialization. Complete the first course before attempting this one.

Featuring

* NANCY WANG, GM of AWS Data Protection Services, AWS; Founder and CEO, Advancing Women in Tech (AWIT)

* GORDON YU, Technical Product Manager, AWS; General Counsel and Coursera Director, AWIT

Teaching Impacts of Technology: Data Collection, Use, and Privacy

In this course you’ll focus on how constant data collection and big data analysis have impacted us, exploring the interplay between using your data and protecting it, as well as thinking about what it could do for you in the future. This will be done through a series of paired teaching sections, exploring a specific “Impact of Computing” in your typical day and the “Technologies and Computing Concepts” that enable that impact, all at a K12-appropriate level.

This course is part of a larger Specialization through which you’ll learn impacts of computing concepts you need to know, organized into 5 distinct digital “worlds”, as well as learn pedagogical techniques and evaluate lesson plans and resources to utilize in your classroom. By the end, you’ll be prepared to teach pre-college learners to be both savvy and effective participants in their digital world.

In this particular digital world (personal data), you’ll explore the following Impacts & Technology pairs --

Impacts (Show me what I want to see!): Internet Privacy, Custom Ads, Personalization of web pages

Technologies and Computing Concepts: Cookies, Web vs Internet, https, Web Servers

Impacts (Use my data…. But protect it!): Common Cybersecurity knowledge levels, ISP data collection, Internet design, finding out what is known about you online, software terms and services

Technology and Computing Concepts: DNS, Cryptography (ciphers, hashing, encryption, SSL), Deep and Dark Web

Impacts (What could my data do for me in the future?): What is Big Data, Machine Learning finds new music, Wearable technologies.

Technology and Computing Concepts: AI vs ML, Supervised vs Unsupervised learning, Neural Networks, Recommender systems, Speech recognition

In the pedagogy section for this course, in which best practices for teaching computing concepts are explored, you’ll learn how to apply Bloom’s taxonomy to create meaningful CS learning objectives, the importance of retrieval-based learning, to build learning activities with online simulators, and how to use “fun” books to teach computing.

In terms of CSTA K-12 computer science standards, we’ll primarily cover learning objectives within the “impacts of computing” concept, while also including some within the “networks and the Internet” concepts and the “data and analysis” concept. Practices we cover include “fostering and inclusive computing culture”, “recognizing and defining computational problems”, and “communicating about computing”.

Build a Guessing Game Application using C++

In this project you will create a guessing game application that pits the computer against the user. You will create variables, static methods, decision constructs, and loops in C++ to create the game.

C++ is a language developed to provide an Object-Oriented version of the C language. Learning C++ then gives the programmer a wide variety of career paths to choose from. It is used in many applications, from text editors to games to device drivers to Web Server code such as SQL Server. There is no more efficient higher level language than C, and C can be used within C++ code, since the C++ compiler supports C as well.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

IBM z/OS Rexx Programming

This course is designed to teach you the basic skills required to write programs using the REXX language in z/OS. The course covers the TSO extensions to REXX and interaction with other environments such as the MVS console, running REXX in batch jobs, and compiling REXX.

A total of 11 hands-on labs on an IBM Z server (via remote Skytap access) are part of this course.

On successful completion of the course, learners can earn theor badge. Details here- https://www.credly.com/org/ibm/badge/ibm-z-os-rexx-programming

ETL and Data Pipelines with Shell, Airflow and Kafka

After taking this course, you will be able to describe two different approaches to converting raw data into analytics-ready data. One approach is the Extract, Transform, Load (ETL) process. The other contrasting approach is the Extract, Load, and Transform (ELT) process. ETL processes apply to data warehouses and data marts. ELT processes apply to data lakes, where the data is transformed on demand by the requesting/calling application.

Both ETL and ELT extract data from source systems, move the data through the data pipeline, and store the data in destination systems. During this course, you will experience how ELT and ETL processing differ and identify use cases for both.

You will identify methods and tools used for extracting the data, merging extracted data either logically or physically, and for importing data into data repositories. You will also define transformations to apply to source data to make the data credible, contextual, and accessible to data users. You will be able to outline some of the multiple methods for loading data into the destination system, verifying data quality, monitoring load failures, and the use of recovery mechanisms in case of failure.

Finally, you will complete a shareable final project that enables you to demonstrate the skills you acquired in each module.