Back to Courses

Data Science Courses - Page 44

Showing results 431-440 of 1407

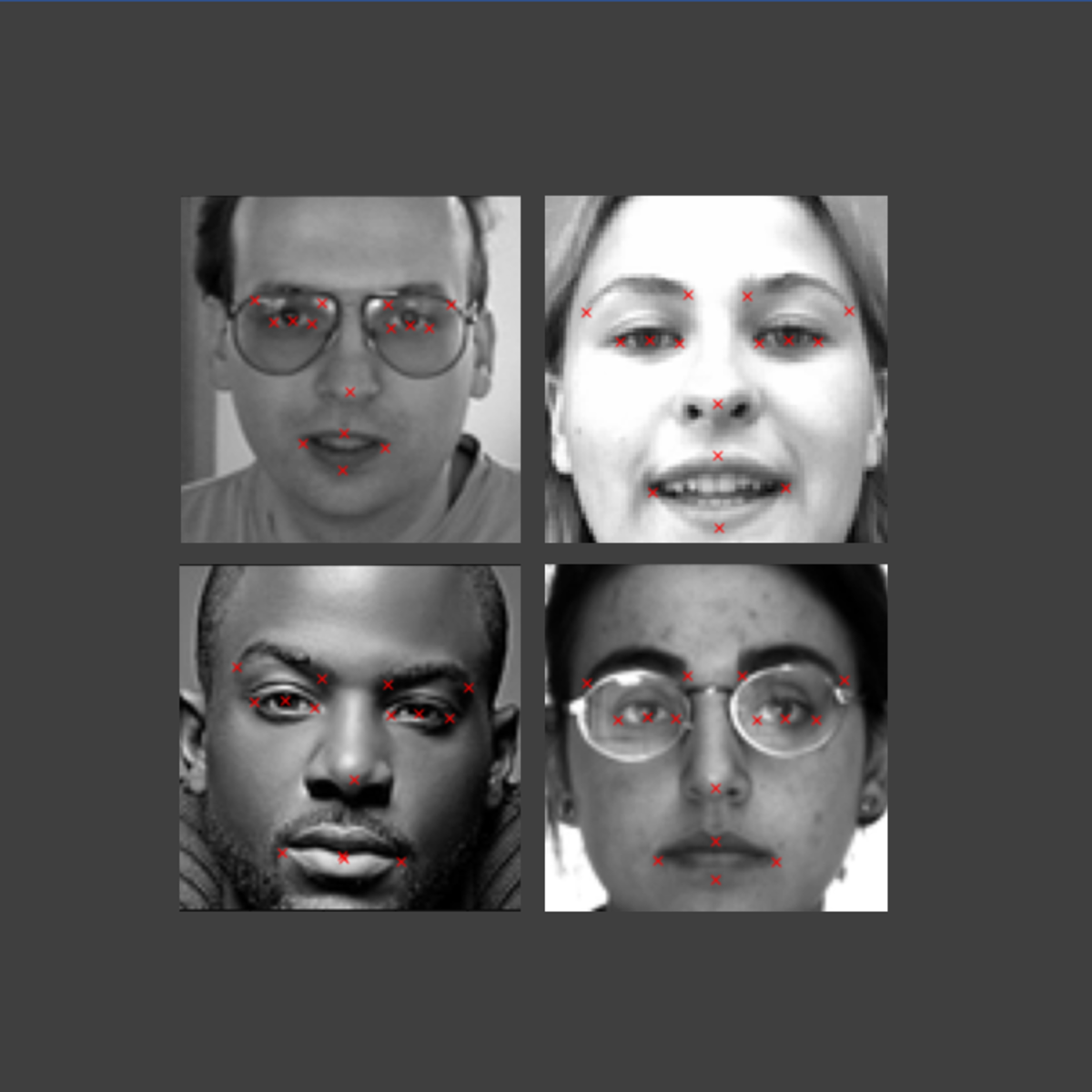

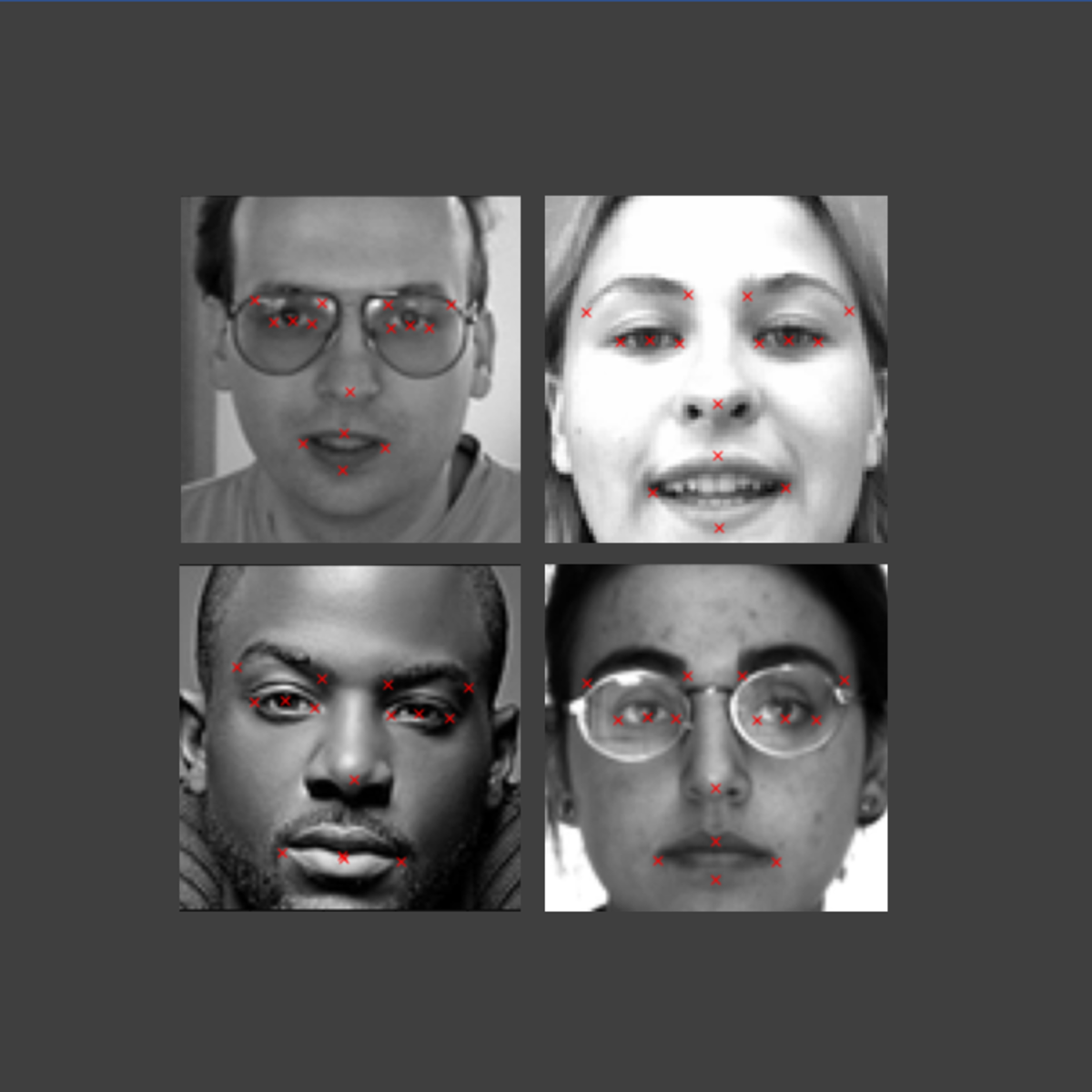

Emotion AI: Facial Key-points Detection

In this 1-hour long project-based course, you will be able to:

- Understand the theory and intuition behind Deep Learning, Convolutional Neural Networks (CNNs) and Residual Neural Networks.

- Import Key libraries, dataset and visualize images.

- Perform data augmentation to increase the size of the dataset and improve model generalization capability.

- Build a deep learning model based on Convolutional Neural Network and Residual blocks using Keras with Tensorflow 2.0 as a backend.

- Compile and fit Deep Learning model to training data.

- Assess the performance of trained CNN and ensure its generalization using various KPIs.

- Improve network performance using regularization techniques such as dropout.

Computer Vision with Embedded Machine Learning

Computer vision (CV) is a fascinating field of study that attempts to automate the process of assigning meaning to digital images or videos. In other words, we are helping computers see and understand the world around us! A number of machine learning (ML) algorithms and techniques can be used to accomplish CV tasks, and as ML becomes faster and more efficient, we can deploy these techniques to embedded systems.

This course, offered by a partnership among Edge Impulse, OpenMV, Seeed Studio, and the TinyML Foundation, will give you an understanding of how deep learning with neural networks can be used to classify images and detect objects in images and videos. You will have the opportunity to deploy these machine learning models to embedded systems, which is known as embedded machine learning or TinyML.

Familiarity with the Python programming language and basic ML concepts (such as neural networks, training, inference, and evaluation) is advised to understand some topics as well as complete the projects. Some math (reading plots, arithmetic, algebra) is also required for quizzes and projects. If you have not done so already, taking the "Introduction to Embedded Machine Learning" course is recommended.

This course covers the concepts and vocabulary necessary to understand how convolutional neural networks (CNNs) operate, and it covers how to use them to classify images and detect objects. The hands-on projects will give you the opportunity to train your own CNNs and deploy them to a microcontroller and/or single board computer.

Optimization for Decision Making

In this data-driven world, companies are often interested in knowing what is the "best" course of action, given the data. For example, manufacturers need to decide how many units of a product to produce given the estimated demand and raw material availability? Should they make all the products in-house or buy some from a third-party to meet the demand? Prescriptive Analytics is the branch of analytics that can provide answers to these questions. It is used for prescribing data-based decisions. The most important method in the prescriptive analytics toolbox is optimization. This course will introduce students to the basic principles of linear optimization for decision-making. Using practical examples, this course teaches how to convert a problem scenario into a mathematical model that can be solved to get the best business outcome. We will learn to identify decision variables, objective function, and constraints of a problem, and use them to formulate and solve an optimization problem using Excel solver and spreadsheet.

Data Wrangling, Analysis and AB Testing with SQL

This course allows you to apply the SQL skills taught in “SQL for Data Science” to four increasingly complex and authentic data science inquiry case studies. We'll learn how to convert timestamps of all types to common formats and perform date/time calculations. We'll select and perform the optimal JOIN for a data science inquiry and clean data within an analysis dataset by deduping, running quality checks, backfilling, and handling nulls. We'll learn how to segment and analyze data per segment using windowing functions and use case statements to execute conditional logic to address a data science inquiry. We'll also describe how to convert a query into a scheduled job and how to insert data into a date partition. Finally, given a predictive analysis need, we'll engineer a feature from raw data using the tools and skills we've built over the course. The real-world application of these skills will give you the framework for performing the analysis of an AB test.

Create audio transcripts with Amazon Transcribe

In this guided project, you will learn how to use Amazon Transcribe to generate your own audio transcripts quickly.

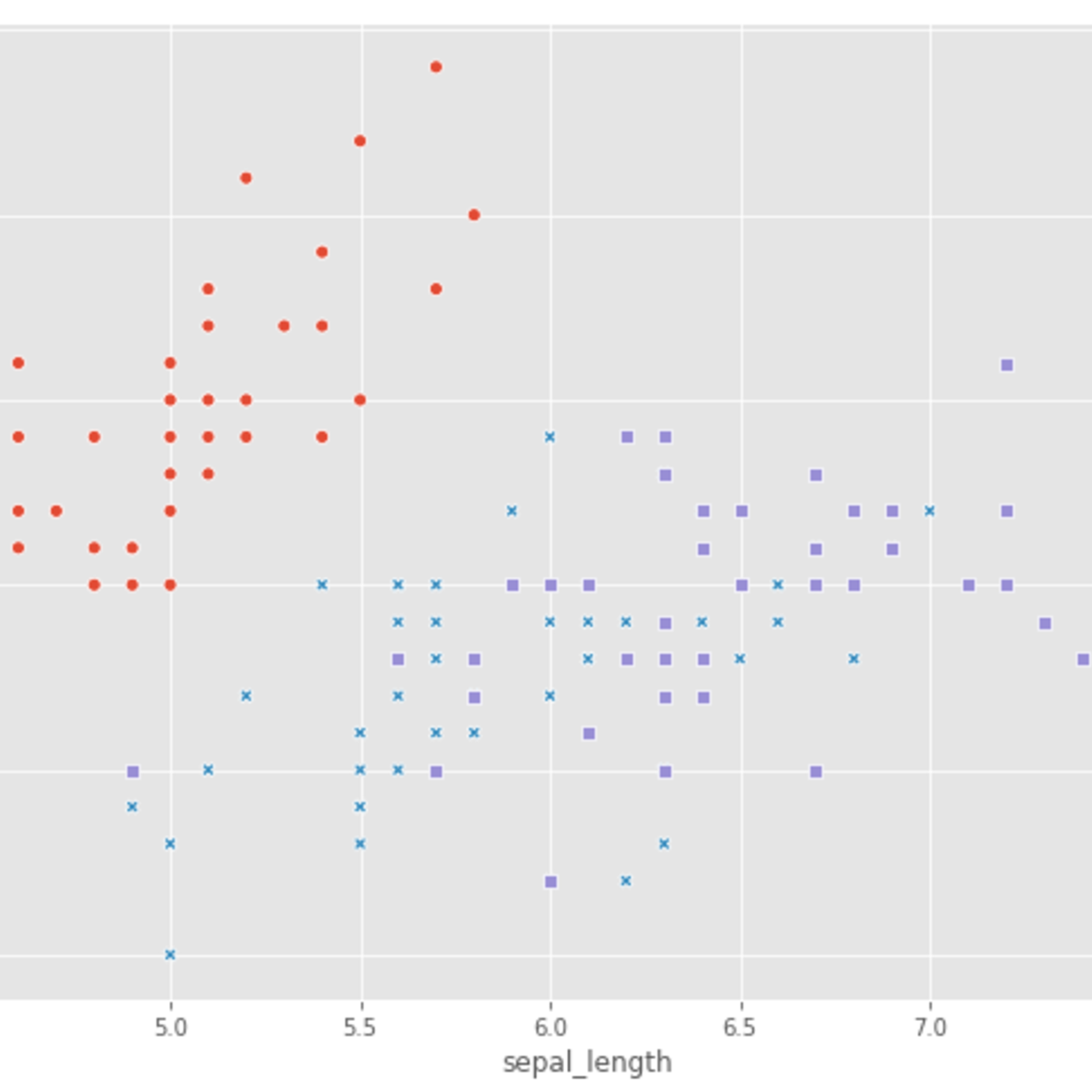

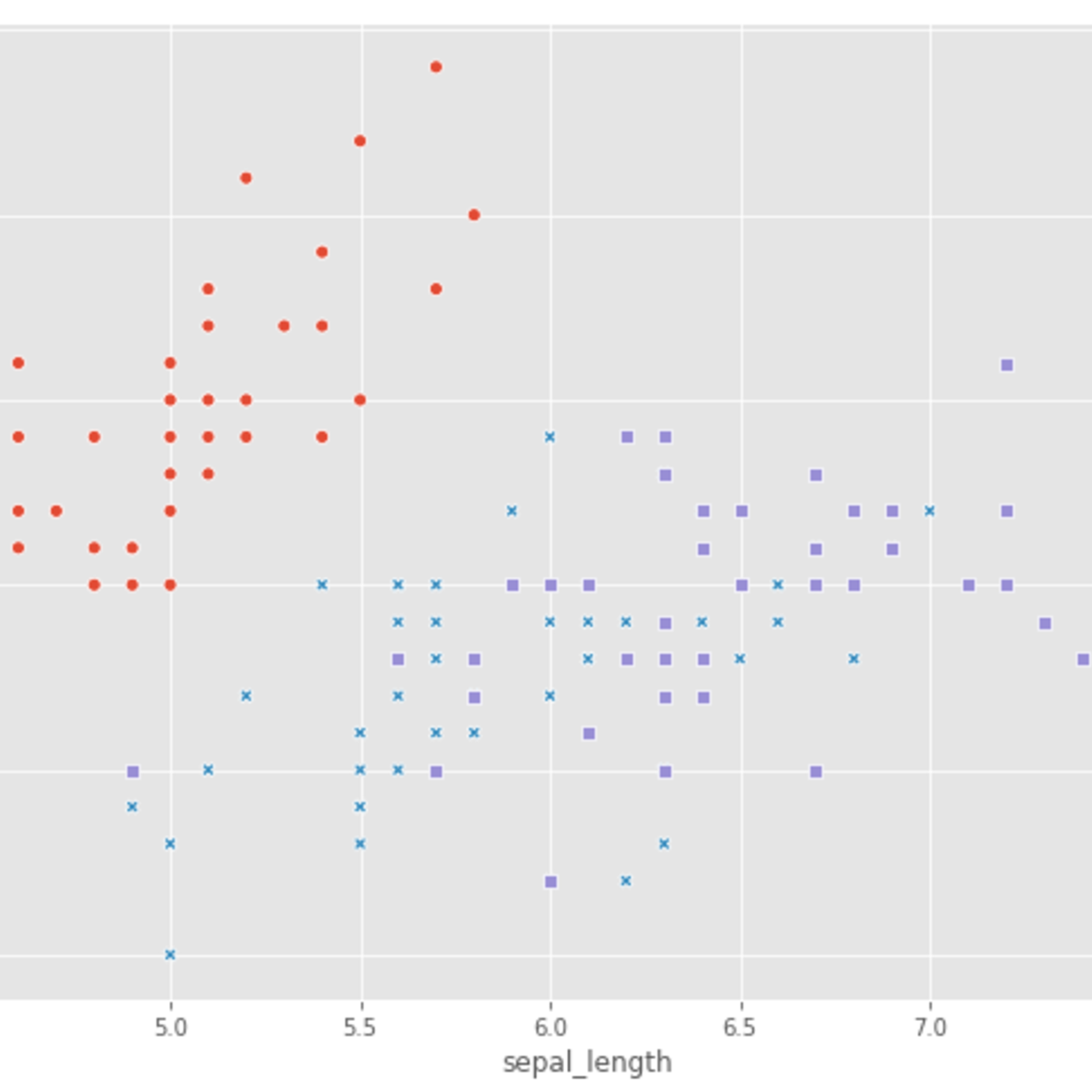

Principal Component Analysis with NumPy

Welcome to this 2 hour long project-based course on Principal Component Analysis with NumPy and Python. In this project, you will do all the machine learning without using any of the popular machine learning libraries such as scikit-learn and statsmodels. The aim of this project and is to implement all the machinery of the various learning algorithms yourself, so you have a deeper understanding of the fundamentals. By the time you complete this project, you will be able to implement and apply PCA from scratch using NumPy in Python, conduct basic exploratory data analysis, and create simple data visualizations with Seaborn and Matplotlib. The prerequisites for this project are prior programming experience in Python and a basic understanding of machine learning theory.

This course runs on Coursera's hands-on project platform called Rhyme. On Rhyme, you do projects in a hands-on manner in your browser. You will get instant access to pre-configured cloud desktops containing all of the software and data you need for the project. Everything is already set up directly in your internet browser so you can just focus on learning. For this project, you’ll get instant access to a cloud desktop with Python, Jupyter, NumPy, and Seaborn pre-installed.

Meaningful Predictive Modeling

This course will help us to evaluate and compare the models we have developed in previous courses. So far we have developed techniques for regression and classification, but how low should the error of a classifier be (for example) before we decide that the classifier is "good enough"? Or how do we decide which of two regression algorithms is better?

By the end of this course you will be familiar with diagnostic techniques that allow you to evaluate and compare classifiers, as well as performance measures that can be used in different regression and classification scenarios. We will also study the training/validation/test pipeline, which can be used to ensure that the models you develop will generalize well to new (or "unseen") data.

Building Resilient Streaming Analytics Systems on Google Cloud

Processing streaming data is becoming increasingly popular as streaming enables businesses to get real-time metrics on business operations. This course covers how to build streaming data pipelines on Google Cloud. Pub/Sub is described for handling incoming streaming data. The course also covers how to apply aggregations and transformations to streaming data using Dataflow, and how to store processed records to BigQuery or Cloud Bigtable for analysis. Learners will get hands-on experience building streaming data pipeline components on Google Cloud using QwikLabs.

Image Compression with K-Means Clustering

In this project, you will apply the k-means clustering unsupervised learning algorithm using scikit-learn and Python to build an image compression application with interactive controls. By the end of this 45-minute long project, you will be competent in pre-processing high-resolution image data for k-means clustering, conducting basic exploratory data analysis (EDA) and data visualization, applying a computationally time-efficient implementation of the k-means algorithm, Mini-Batch K-Means, to compress images, and leverage the Jupyter widgets library to build interactive GUI components to select images from a drop-down list and pick values of k using a slider.

This course runs on Coursera's hands-on project platform called Rhyme. On Rhyme, you do projects in a hands-on manner in your browser. You will get instant access to pre-configured cloud desktops containing all of the software and data you need for the project. Everything is already set up directly in your internet browser so you can just focus on learning. For this project, you’ll get instant access to a cloud desktop with Python, Jupyter, and scikit-learn pre-installed.

Notes:

- You will be able to access the cloud desktop 5 times. However, you will be able to access instructions videos as many times as you want.

- This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Google Data Analytics Capstone: Complete a Case Study

This course is the eighth course in the Google Data Analytics Certificate. You’ll have the opportunity to complete an optional case study, which will help prepare you for the data analytics job hunt. Case studies are commonly used by employers to assess analytical skills. For your case study, you’ll choose an analytics-based scenario. You’ll then ask questions, prepare, process, analyze, visualize and act on the data from the scenario. You’ll also learn other useful job hunt skills through videos with common interview questions and responses, helpful materials to build a portfolio online, and more. Current Google data analysts will continue to instruct and provide you with hands-on ways to accomplish common data analyst tasks with the best tools and resources.

Learners who complete this certificate program will be equipped to apply for introductory-level jobs as data analysts. No previous experience is necessary.

By the end of this course, you will:

- Learn the benefits and uses of case studies and portfolios in the job search.

- Explore real world job interview scenarios and common interview questions.

- Discover how case studies can be a part of the job interview process.

- Examine and consider different case study scenarios.

- Have the chance to complete your own case study for your portfolio.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved