Back to Courses

Data Analysis Courses - Page 73

Showing results 721-730 of 998

Building Data Visualization Tools

The data science revolution has produced reams of new data from a wide variety of new sources. These new datasets are being used to answer new questions in way never before conceived. Visualization remains one of the most powerful ways draw conclusions from data, but the influx of new data types requires the development of new visualization techniques and building blocks. This course provides you with the skills for creating those new visualization building blocks. We focus on the ggplot2 framework and describe how to use and extend the system to suit the specific needs of your organization or team. Upon completing this course, learners will be able to build the tools needed to visualize a wide variety of data types and will have the fundamentals needed to address new data types as they come about.

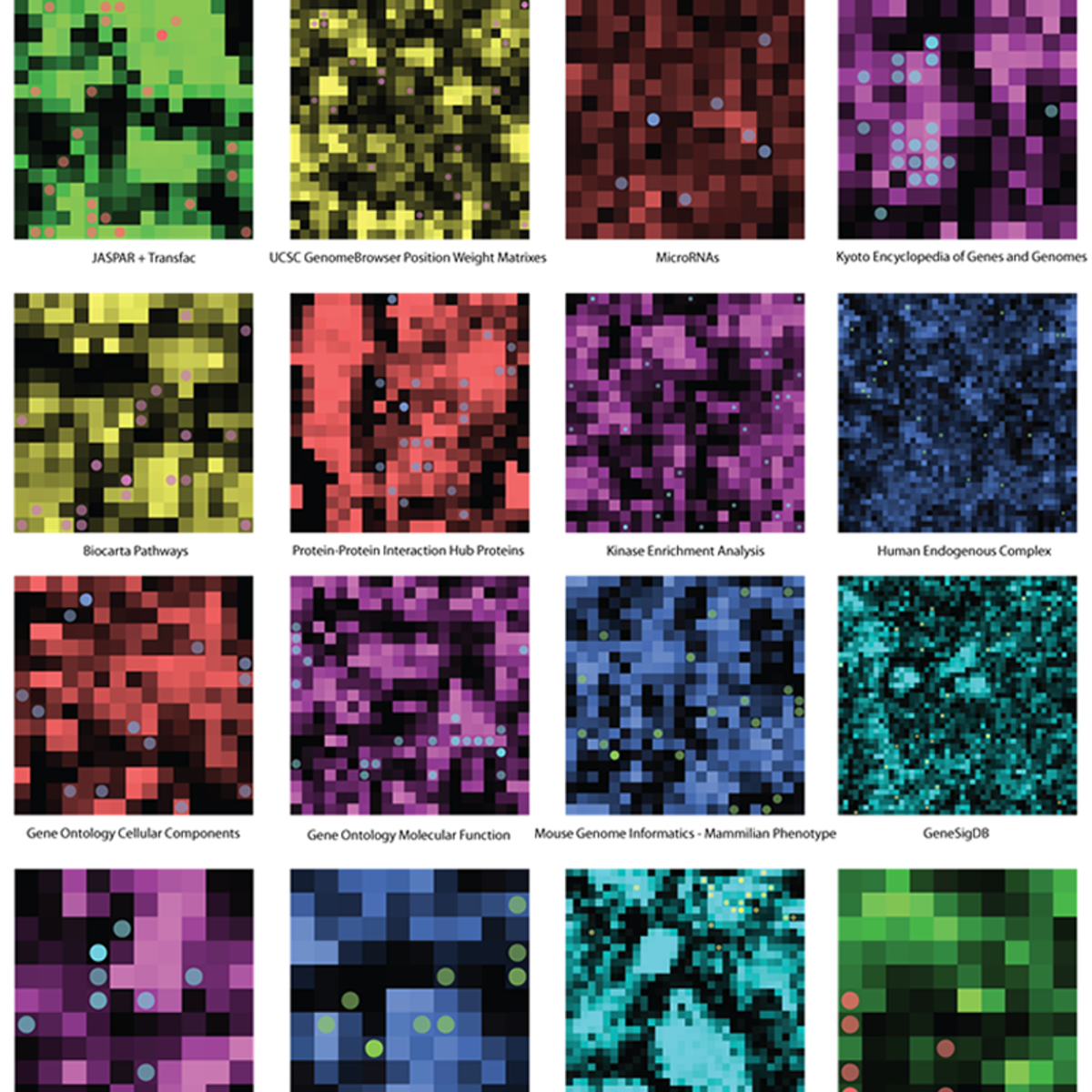

Big Data Science with the BD2K-LINCS Data Coordination and Integration Center

The Library of Integrative Network-based Cellular Signatures (LINCS) is an NIH Common Fund program. The idea is to perturb different types of human cells with many different types of perturbations such as: drugs and other small molecules; genetic manipulations such as knockdown or overexpression of single genes; manipulation of the extracellular microenvironment conditions, for example, growing cells on different surfaces, and more. These perturbations are applied to various types of human cells including induced pluripotent stem cells from patients, differentiated into various lineages such as neurons or cardiomyocytes. Then, to better understand the molecular networks that are affected by these perturbations, changes in level of many different variables are measured including: mRNAs, proteins, and metabolites, as well as cellular phenotypic changes such as changes in cell morphology. The BD2K-LINCS Data Coordination and Integration Center (DCIC) is commissioned to organize, analyze, visualize and integrate this data with other publicly available relevant resources. In this course we briefly introduce the DCIC and the various Centers that collect data for LINCS. We then cover metadata and how metadata is linked to ontologies. We then present data processing and normalization methods to clean and harmonize LINCS data. This follow discussions about how data is served as RESTful APIs. Most importantly, the course covers computational methods including: data clustering, gene-set enrichment analysis, interactive data visualization, and supervised learning. Finally, we introduce crowdsourcing/citizen-science projects where students can work together in teams to extract expression signatures from public databases and then query such collections of signatures against LINCS data for predicting small molecules as potential therapeutics.

Detect Fake News in Python with Tensorflow

"Fake News" is a word used to mean different things to different people. At its heart, we define "fake news" as any news stories which are false: the article itself is fabricated without verifiable evidence, citations or quotations. Often these stories may be lies and propaganda that is deliberately intended to confuse the viewer, or may be characterized as "click-bait" written for monetary incentives (the writer profits on the number of people who click on the story). In recent years, fake news stories have proliferated via social media, partially because they are so readily and widely spread online. Worse yet, Artificial Intelligence and natural language processing, or NLP, technology is ushering in an era of artificially-generated fake news. Both types of fake news are detectable with the use of NLP and deep learning.

In this project, you will learn multiple computational methods of identifying and classifying Fake News.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Excel Regression Models for Business Forecasting

This course allows learners to explore Regression Models in order to utilise these models for business forecasting. Unlike Time Series Models, Regression Models are causal models, where we identify certain variables in our business that influence other variables. Regressions model this causality, and then we can use these models in order to forecast, and then plan for our business' needs. We will explore simple regression models, multiple regression models, dummy variable regressions, seasonal variable regressions, as well as autoregressions. Each of these are different forms of regression models, tailored to unique business scenarios, in order to forecast and generate business intelligence for organisations.

Introduction to R Programming for Data Science

When working in the data science field you will definitely become acquainted with the R language and the role it plays in data analysis. This course introduces you to the basics of the R language such as data types, techniques for manipulation, and how to implement fundamental programming tasks.

You will begin the process of understanding common data structures, programming fundamentals and how to manipulate data all with the help of the R programming language.

The emphasis in this course is hands-on and practical learning . You will write a simple program using RStudio, manipulate data in a data frame or matrix, and complete a final project as a data analyst using Watson Studio and Jupyter notebooks to acquire and analyze data-driven insights.

No prior knowledge of R, or programming is required.

Evaluate Machine Learning Models with Yellowbrick

Welcome to this project-based course on Evaluating Machine Learning Models with Yellowbrick. In this course, we are going to use visualizations to steer our machine learning workflow. The problem we will tackle is to predict whether rooms in apartments are occupied or unoccupied based on passive sensor data such as temperature, humidity, light and CO2 levels. We will build a logistic regression model for binary classification. This is a continuation of the course on Room Occupancy Detection. With an emphasis on visual steering of our analysis, we will cover the following topics in our machine learning workflow: model evaluation with ROC/AUC plots, confusion matrices, cross-validation scores, and setting discrimination thresholds for logistic regression models.

This course runs on Coursera's hands-on project platform called Rhyme. On Rhyme, you do projects in a hands-on manner in your browser. You will get instant access to pre-configured cloud desktops containing all of the software and data you need for the project. Everything is already set up directly in your internet browser so you can just focus on learning. For this project, you’ll get instant access to a cloud desktop with Python, Jupyter, Yellowbrick, and scikit-learn pre-installed.

Notes:

- You will be able to access the cloud desktop 5 times. However, you will be able to access instructions videos as many times as you want.

- This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Inventory Management

Inventory is a strategic asset for organizations. The effective management of inventory can minimize a company’s spending while dramatically increasing its profit. In this course, we will explore how to use data science to manage inventory in uncertain environments, how to set inventory levels based on customer service requirements, and how to calculate inventory for products that have short sales cycles.

Translate Text with the Cloud Translation API

This is a self-paced lab that takes place in the Google Cloud console.

The Cloud Translation allows you to translate an arbitrary string into any supported language. In this hands-on lab you’ll learn how to use the Cloud Translation API to translate text and detect the language of text if the language is unknown.

Exploring Dataset Metadata Between Projects with Data Catalog

This is a self-paced lab that takes place in the Google Cloud console. In this lab, you will explore existing datasets with Data Catalog and mine the table and column metadata for insights.

Using Advanced Formulas and Functions in Excel

In this project, you will learn the advanced formulas and functions in Excel to perform analysis on several different topics. First, we will review basic formulas and functions and take a tour through the many choices in the Excel Formulas tab. Then we will explore the advanced financial formulas and functions followed by logic and text. Last, we will learn many ways to perform lookup and reference type queries. Throughout the project, you will work through some examples that will show you how to apply the formulas and functions you have learned.

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved