Back to Courses

Data Analysis Courses - Page 68

Showing results 671-680 of 998

Extract Text Data with Python and Regex

By the end of this project you will learn what is regular expressions and how it works. during this project we are going to learn about basic to advanced concepts of regex by formatting phone numbers, email addresses and URLs. after that we will learn how to use regular expressions for data cleaning. and finally in the final task we are going to work with a dataset consists of daily personal notes, and we are going to use RegEx to pull out useful information out of our raw text data.

BigQuery Soccer Data Ingestion

This is a self-paced lab that takes place in the Google Cloud console. Get started with sports data science by importing soccer data on matches, teams, players, and match events into BigQuery tables.

Information access uses multiple formats, and BigQuery makes working with multiple data sources simple. In this lab you will get started with sports data science by importing external sports data sources into BigQuery tables. This will give you the basis for building more sophisticated analytics in subsequent labs.

Process Mining: Data science in Action

Process mining is the missing link between model-based process analysis and data-oriented analysis techniques. Through concrete data sets and easy to use software the course provides data science knowledge that can be applied directly to analyze and improve processes in a variety of domains.

Data science is the profession of the future, because organizations that are unable to use (big) data in a smart way will not survive. It is not sufficient to focus on data storage and data analysis. The data scientist also needs to relate data to process analysis. Process mining bridges the gap between traditional model-based process analysis (e.g., simulation and other business process management techniques) and data-centric analysis techniques such as machine learning and data mining. Process mining seeks the confrontation between event data (i.e., observed behavior) and process models (hand-made or discovered automatically). This technology has become available only recently, but it can be applied to any type of operational processes (organizations and systems). Example applications include: analyzing treatment processes in hospitals, improving customer service processes in a multinational, understanding the browsing behavior of customers using booking site, analyzing failures of a baggage handling system, and improving the user interface of an X-ray machine. All of these applications have in common that dynamic behavior needs to be related to process models. Hence, we refer to this as "data science in action".

The course explains the key analysis techniques in process mining. Participants will learn various process discovery algorithms. These can be used to automatically learn process models from raw event data. Various other process analysis techniques that use event data will be presented. Moreover, the course will provide easy-to-use software, real-life data sets, and practical skills to directly apply the theory in a variety of application domains.

This course starts with an overview of approaches and technologies that use event data to support decision making and business process (re)design. Then the course focuses on process mining as a bridge between data mining and business process modeling. The course is at an introductory level with various practical assignments.

The course covers the three main types of process mining.

1. The first type of process mining is discovery. A discovery technique takes an event log and produces a process model without using any a-priori information. An example is the Alpha-algorithm that takes an event log and produces a process model (a Petri net) explaining the behavior recorded in the log.

2. The second type of process mining is conformance. Here, an existing process model is compared with an event log of the same process. Conformance checking can be used to check if reality, as recorded in the log, conforms to the model and vice versa.

3. The third type of process mining is enhancement. Here, the idea is to extend or improve an existing process model using information about the actual process recorded in some event log. Whereas conformance checking measures the alignment between model and reality, this third type of process mining aims at changing or extending the a-priori model. An example is the extension of a process model with performance information, e.g., showing bottlenecks. Process mining techniques can be used in an offline, but also online setting. The latter is known as operational support. An example is the detection of non-conformance at the moment the deviation actually takes place. Another example is time prediction for running cases, i.e., given a partially executed case the remaining processing time is estimated based on historic information of similar cases.

Process mining provides not only a bridge between data mining and business process management; it also helps to address the classical divide between "business" and "IT". Evidence-based business process management based on process mining helps to create a common ground for business process improvement and information systems development.

The course uses many examples using real-life event logs to illustrate the concepts and algorithms. After taking this course, one is able to run process mining projects and have a good understanding of the Business Process Intelligence field.

After taking this course you should:

- have a good understanding of Business Process Intelligence techniques (in particular process mining),

- understand the role of Big Data in today’s society,

- be able to relate process mining techniques to other analysis techniques such as simulation, business intelligence, data mining, machine learning, and verification,

- be able to apply basic process discovery techniques to learn a process model from an event log (both manually and using tools),

- be able to apply basic conformance checking techniques to compare event logs and process models (both manually and using tools),

- be able to extend a process model with information extracted from the event log (e.g., show bottlenecks),

- have a good understanding of the data needed to start a process mining project,

- be able to characterize the questions that can be answered based on such event data,

- explain how process mining can also be used for operational support (prediction and recommendation), and

- be able to conduct process mining projects in a structured manner.

Microsoft Power Platform Fundamentals

In this course, you will learn the business value and product capabilities of Power Platform. You will create simple Power Apps, connect data with Microsoft Dataverse, build a Power BI Dashboard, automate a process with Power Automate, and build a chatbot with Power Virtual Agents.

By the end of this course, you will be able to:

• Describe the business value of Power Platform

• Identify the core components of Power Platform

• Demonstrate the capabilities of Power BI

• Describe the capabilities of Power Apps

• Demonstrate the business value of Power Virtual Agents

Microsoft's Power Platform is not just for programmers and specialist technologists. If you, or the company that you work for, aspire to improve productivity by automating business processes, analyzing data to produce business insights, and acting more effectively by creating simple app experiences or even chatbots, this is the right course to kick-start your career and learn amazing skills.

Microsoft Power Platform Fundamentals will act as a bedrock of fundamental knowledge to prepare you for the Microsoft Certification: Power Platform Fundamentals - PL900 Exam. You will be able to demonstrate your real-world knowledge of the fundamentals of Microsoft Power Platform.

This course can accelerate your progress and give your career a boost, as you use your Microsoft skills to improve your team’s productivity.

Python for Data Analysis: Pandas & NumPy

In this hands-on project, we will understand the fundamentals of data analysis in Python and we will leverage the power of two important python libraries known as Numpy and pandas. NumPy and Pandas are two of the most widely used python libraries in data science. They offer high-performance, easy to use structures and data analysis tools.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Supply Chain Analytics Essentials

Welcome to Supply Chain Analytics - an exciting area that is in high demand!

In this introductory course to Supply Chain Analytics, I will take you on a journey to this fascinating area where supply chain management meets data analytics. You will learn real life examples on how analytics can be applied to various domains of a supply chain, from selling, to logistics, production and sourcing, to generate a significant social / economic impact. You will also learn job market trend, job requirement and preparation. Lastly, you will master a job intelligence tool to find preferred job(s) by region, industry and company.

Upon completing this course, you will

1. Understand why analytics is critical to supply chain management and its financial / economic impact.

2. See the pain points of a supply chain and how analytics may relieve them.

3. Learn supply chain analytics job opportunities, and use a job intelligence tool to make data-driven career decisions.

I hope you enjoy the course!

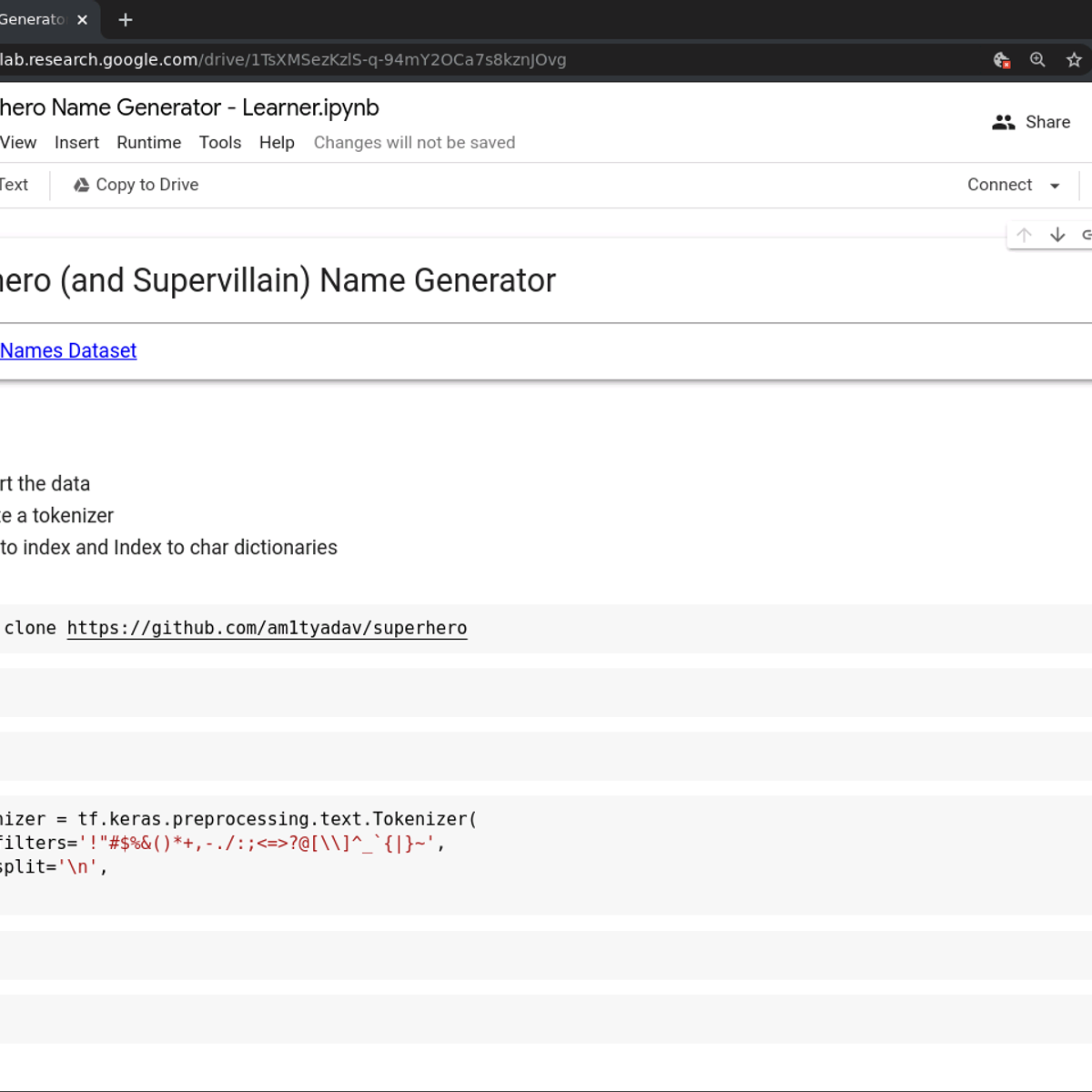

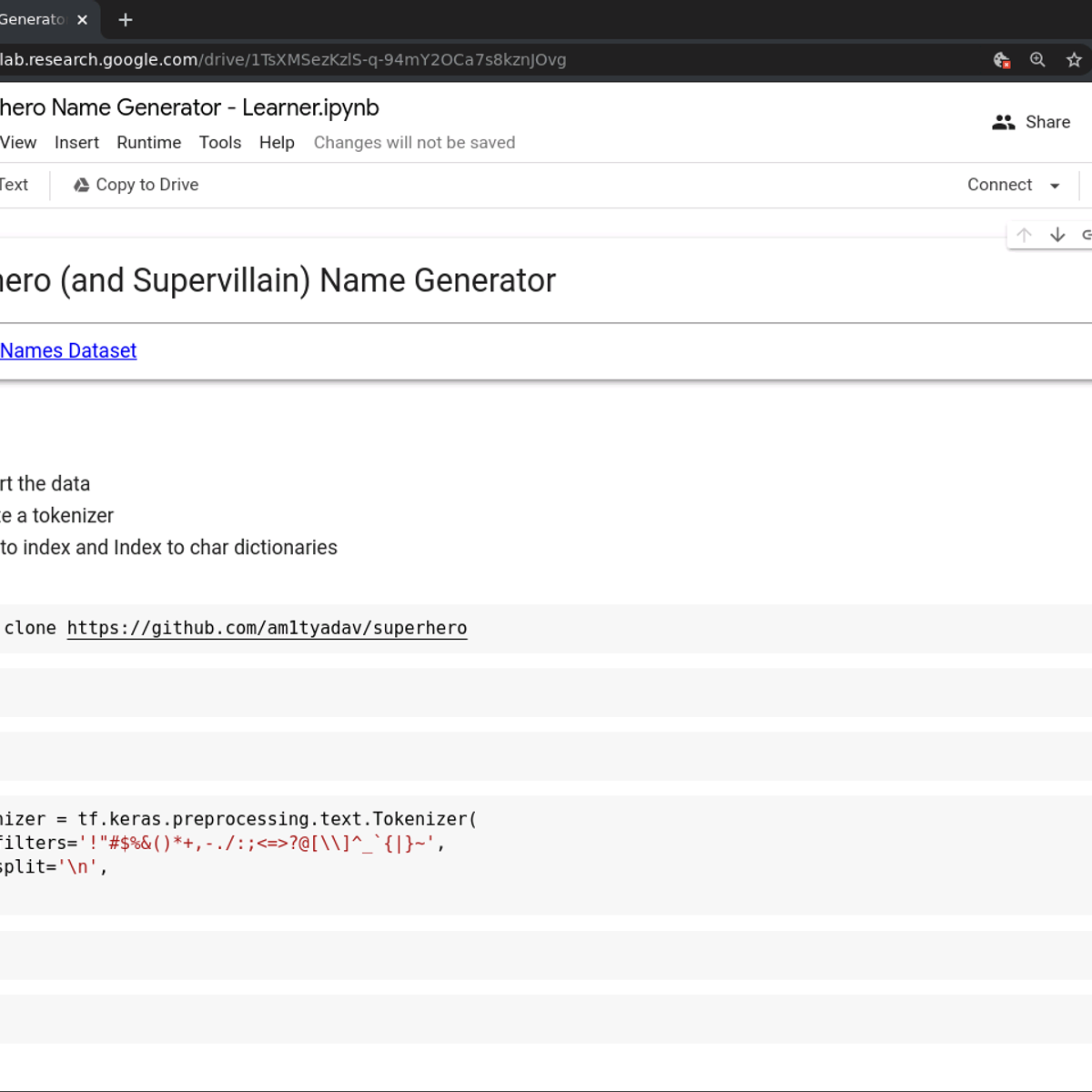

Create a Superhero Name Generator with TensorFlow

In this guided project, we are going to create a neural network and train it on a small dataset of superhero names to learn to generate similar names. The dataset has over 9000 names of superheroes, supervillains and other fictional characters from a number of different comic books, TV shows and movies.

Text generation is a common natural language processing task. We will create a character level language model that will predict the next character for a given input sequence. In order to get a new predicted superhero name, we will need to give our model a seed input - this can be a single character or a sequence of characters, and the model will then generate the next character that it predicts should after the input sequence. This character is then added to the seed input to create a new input, which is then used again to generate the next character, and so on.

You will need prior programming experience in Python. Some experience with TensorFlow is recommended. This is a practical, hands on guided project for learners who already have theoretical understanding of Neural Networks, Recurrent Neural Networks, and optimization algorithms like gradient descent but want to understand how to use the TensorFlow to start performing natural language processing tasks like text classification or text generation.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Basic Data Processing and Visualization

This is the first course in the four-course specialization Python Data Products for Predictive Analytics, introducing the basics of reading and manipulating datasets in Python. In this course, you will learn what a data product is and go through several Python libraries to perform data retrieval, processing, and visualization.

This course will introduce you to the field of data science and prepare you for the next three courses in the Specialization: Design Thinking and Predictive Analytics for Data Products, Meaningful Predictive Modeling, and Deploying Machine Learning Models. At each step in the specialization, you will gain hands-on experience in data manipulation and building your skills, eventually culminating in a capstone project encompassing all the concepts taught in the specialization.

Foundations of mining non-structured medical data

The goal of this course is to understand the foundations of Big Data and the data that is being generated in the health domain and how the use of technology would help to integrate and exploit all those data to extract meaningful information that can be later used in different sectors of the health domain from physicians to management, from patients to caregivers, etc.

The course offers a high-level perspective of the importance of the medical context within the European context, the types of data that are managed in the health (clinical) context, the challenges to be addressed in the mining of unstructured medical data (text and image) as well as the opportunities from the analytical point of view with an introduction to the basics of data analytics field.

Create Mapping Data Flows in Azure Data Factory

In this 1 hour long project-based course, we’ll learn to create a mapping data flow on the azure data factory. First, we’ll learn to create an azure data factory on the Azure portal. Then we’ll learn to create an azure storage account so that we could store the source data on the blob containers. We’ll learn to configure the source and the sink transformation. We’ll learn to work with basic data flow transformations such as select, filters, sort, joins , derived columns, and conditional split transformations. We’ll learn to create a simple mapping data flow in the azure data factory. We’ll also learn to create and combine multiple streams of data on mapping data flows. Finally, we’ll also learn to store the transformed data to the destination.

You must have an Azure account prior.

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved