Back to Courses

Data Analysis Courses - Page 67

Showing results 661-670 of 998

Reproducible Research

This course focuses on the concepts and tools behind reporting modern data analyses in a reproducible manner. Reproducible research is the idea that data analyses, and more generally, scientific claims, are published with their data and software code so that others may verify the findings and build upon them. The need for reproducibility is increasing dramatically as data analyses become more complex, involving larger datasets and more sophisticated computations. Reproducibility allows for people to focus on the actual content of a data analysis, rather than on superficial details reported in a written summary. In addition, reproducibility makes an analysis more useful to others because the data and code that actually conducted the analysis are available. This course will focus on literate statistical analysis tools which allow one to publish data analyses in a single document that allows others to easily execute the same analysis to obtain the same results.

Creating a Data Warehouse Through Joins and Unions

This is a self-paced lab that takes place in the Google Cloud console. This lab focuses on how to create new reporting tables using SQL JOINS and UNIONs.

Getting Started with Spatial Analysis in GeoDa

By the end of this project, learners will know how to start out with GeoDa to use it for spatial analyses. This includes how to access and download the software, import multiple layers, and a basic overview of GeoDa. Spatial analysis, as a type of data analysis, has been getting increasingly important. The beginnings are often dated back to John Snow’s cholera outbreak maps from the mid-1800s. In 2003, Dr. Luc Anselin at the University of Chicago developed GeoDa, together with his team, to provide free software that digitizes old school pin maps. Today, it is used in various fields to plan cities and infrastructure, create crime maps, emergency management, and visualize finds at archaeological sites.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

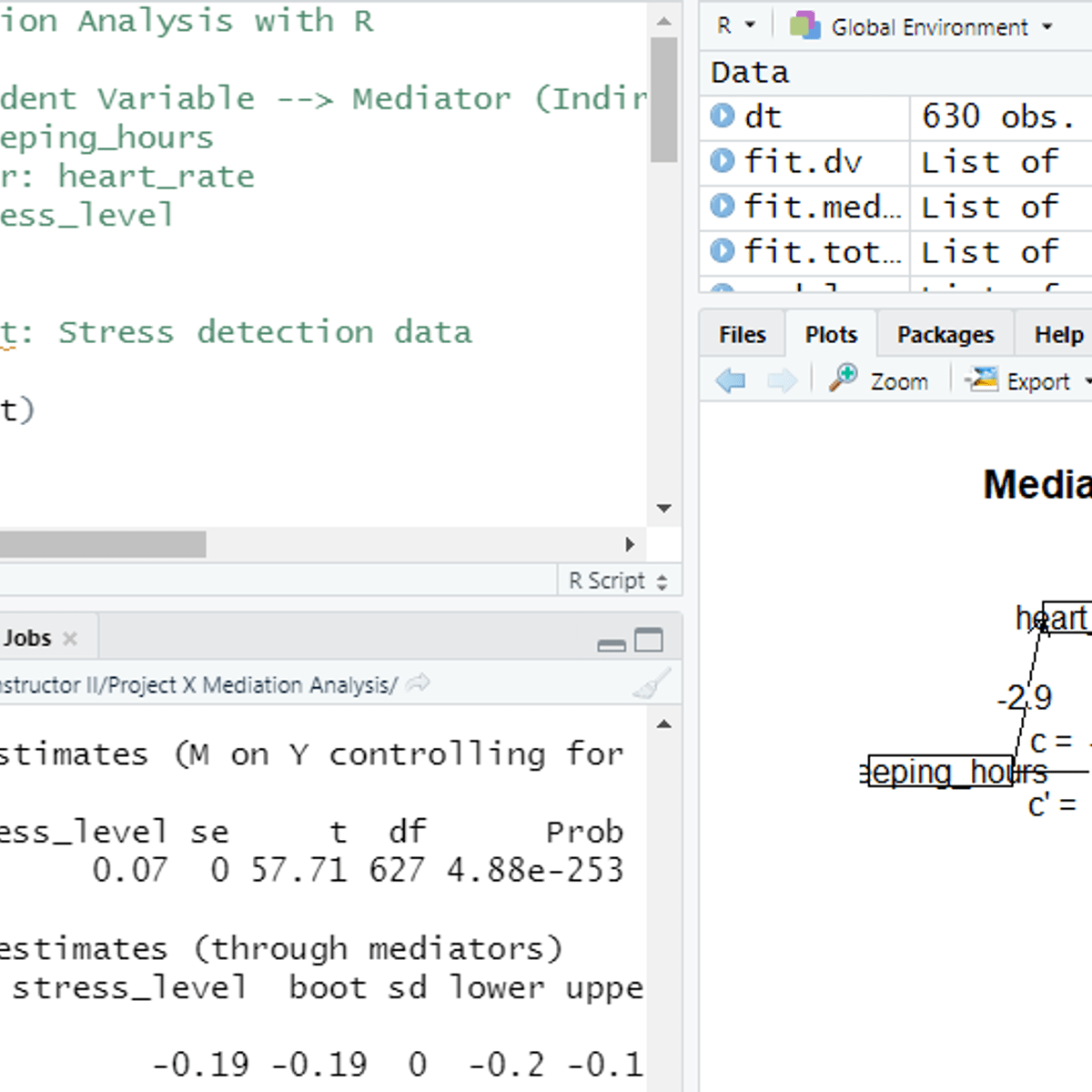

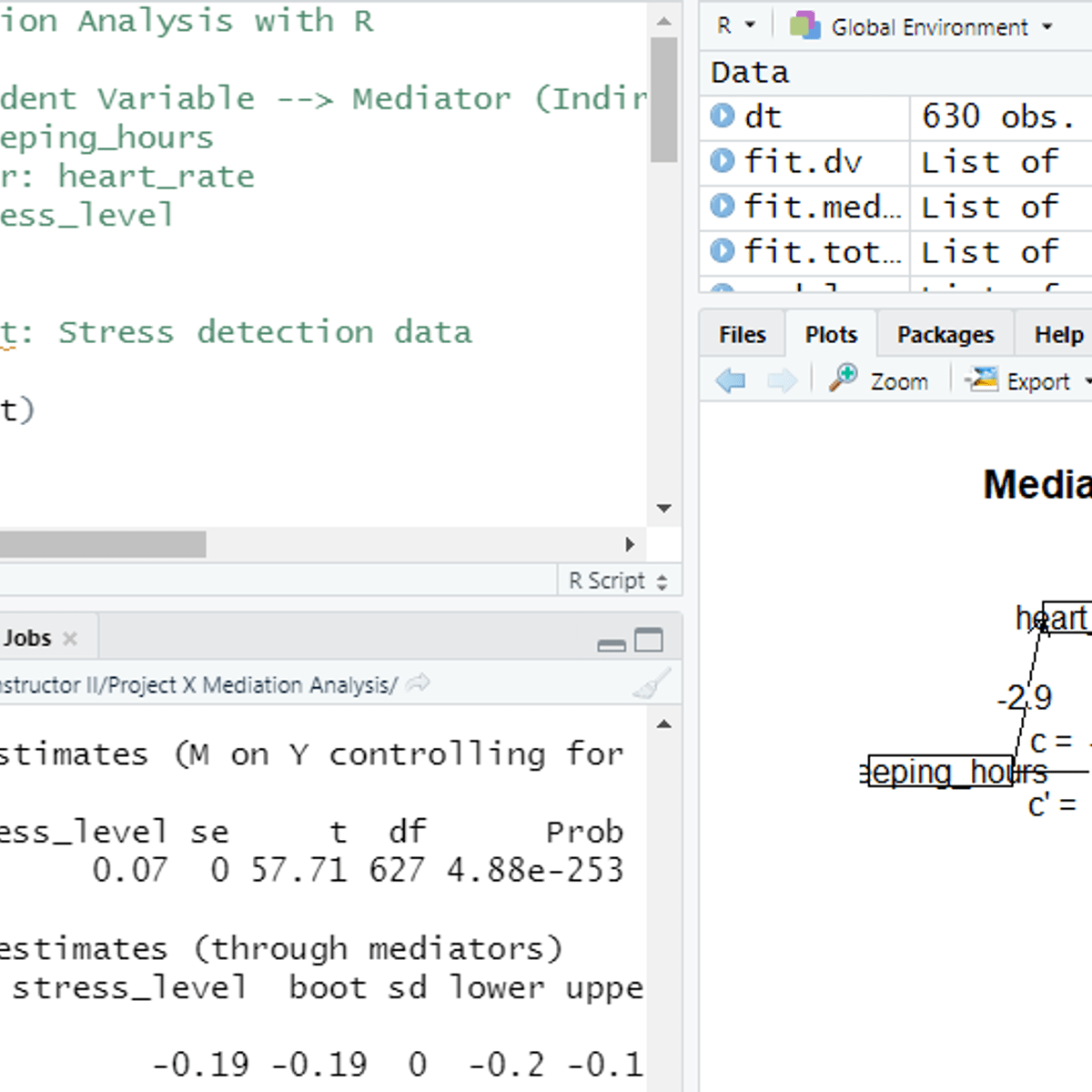

Mediation Analysis with R

In this project, you will learn to perform mediation analysis in RStudio. The project explains the theoretical concepts of mediation and illustrates the process with sample stress detection data. It covers the distinction between mediation and moderation process, explains the selection criteria for a suitable mediator. The project describes the mediation process with statistical models, diagnostic measures and conceptual diagram.

Exploring the Public Cryptocurrency Datasets Available in BigQuery

This is a self-paced lab that takes place in the Google Cloud console. In this hands-on lab you’ll learn how to use BigQuery to explore the cryptocurrency public datasets now available. This is a challange lab, and you are required to complete some simple SQL statements.

Predicting the Weather with Artificial Neural Networks

In this one hour long project-based course, you will tackle a real-world prediction problem using machine learning. The dataset we are going to use comes from the Australian government. They recorded daily weather observations from a number of Australian weather stations. We will use this data to train an artificial neural network to predict whether it will rain tomorrow.

By the end of this project, you will have created a machine learning model using industry standard tools, including Python and sklearn.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

DataOps Methodology

DataOps is defined by Gartner as "a collaborative data management practice focused on improving the communication, integration and automation of data flows between data managers and consumers across an organization. Much like DevOps, DataOps is not a rigid dogma, but a principles-based practice influencing how data can be provided and updated to meet the need of the organization’s data consumers.”

The DataOps Methodology is designed to enable an organization to utilize a repeatable process to build and deploy analytics and data pipelines. By following data governance and model management practices they can deliver high-quality enterprise data to enable AI. Successful implementation of this methodology allows an organization to know, trust and use data to drive value.

In the DataOps Methodology course you will learn about best practices for defining a repeatable and business-oriented framework to provide delivery of trusted data. This course is part of the Data Engineering Specialization which provides learners with the foundational skills required to be a Data Engineer.

What is Financial Accounting?

Students are introduced to the field of financial accounting through defining the foundational activities, tools, and users of financial accounting. Students learn to use the accounting equation and are introduced to the four major financial statements. Additional topics include ethical considerations, recording business transactions, and the application of credit/debit rules.

Exploratory Data Analysis with MATLAB

In this course, you will learn to think like a data scientist and ask questions of your data. You will use interactive features in MATLAB to extract subsets of data and to compute statistics on groups of related data. You will learn to use MATLAB to automatically generate code so you can learn syntax as you explore. You will also use interactive documents, called live scripts, to capture the steps of your analysis, communicate the results, and provide interactive controls allowing others to experiment by selecting groups of data.

These skills are valuable for those who have domain knowledge and some exposure to computational tools, but no programming background is required. To be successful in this course, you should have some knowledge of basic statistics (e.g., histograms, averages, standard deviation, curve fitting, interpolation).

By the end of this course, you will be able to load data into MATLAB, prepare it for analysis, visualize it, perform basic computations, and communicate your results to others. In your last assignment, you will combine these skills to assess damages following a severe weather event and communicate a polished recommendation based on your analysis of the data. You will be able to visualize the location of these events on a geographic map and create sliding controls allowing you to quickly visualize how a phenomenon changes over time.

Measuring Stock Liquidity

In this 1-hour long project-based course, you will learn how to use Average Daily Traded Volume and Share Turnover to measure liquidity, use Depth of Market (DOM) and Bid-Ask Spread to compare liquidity, and use Variance Ratio to quantify liquidity.

Note: This course works best for learners who are based in the North America region. We're currently working on providing the same experience in other regions.

This course's content is not intended to be investment advice and does not constitute an offer to perform any operations in the regulated or unregulated financial market.

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved