Back to Courses

Data Management Courses - Page 6

Showing results 51-60 of 399

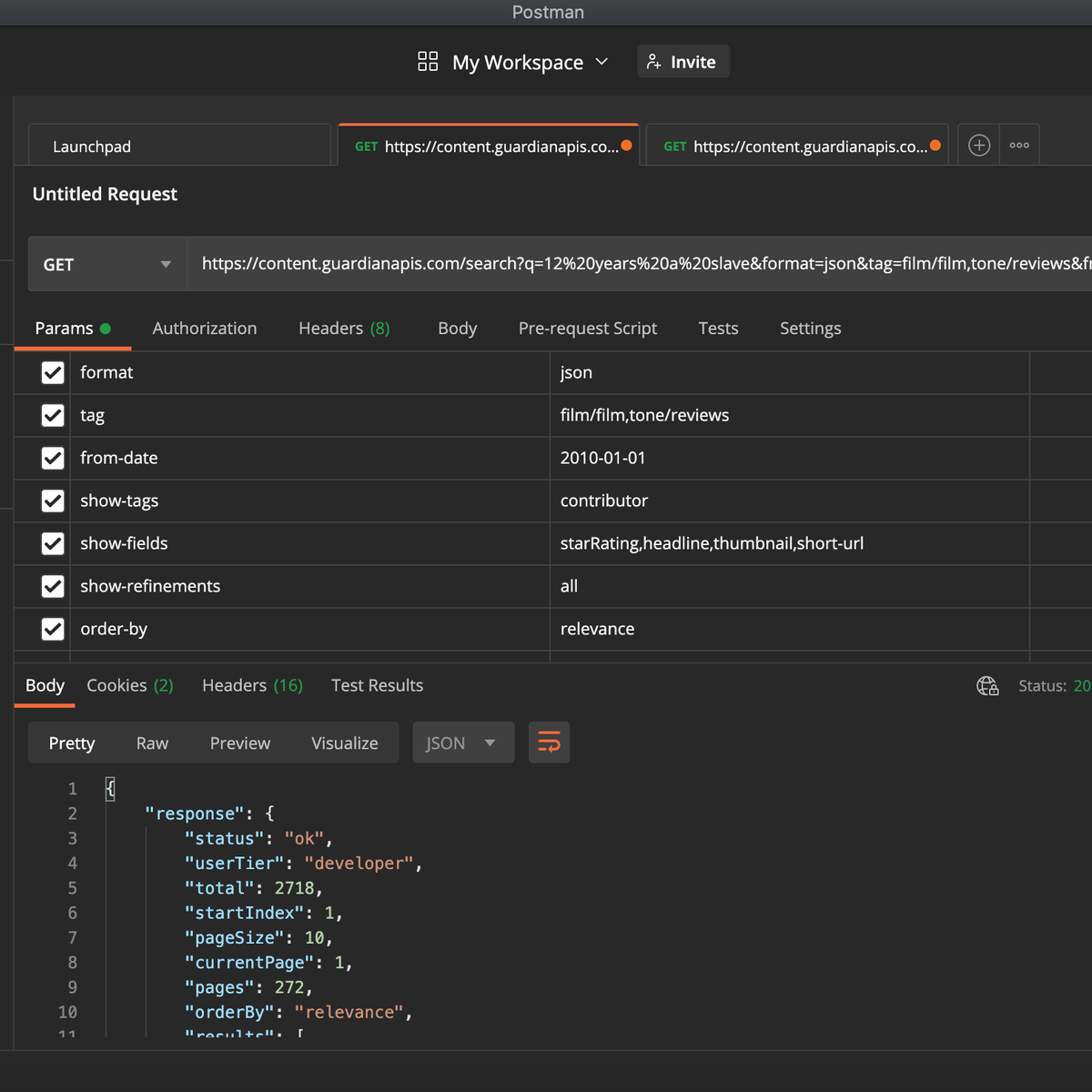

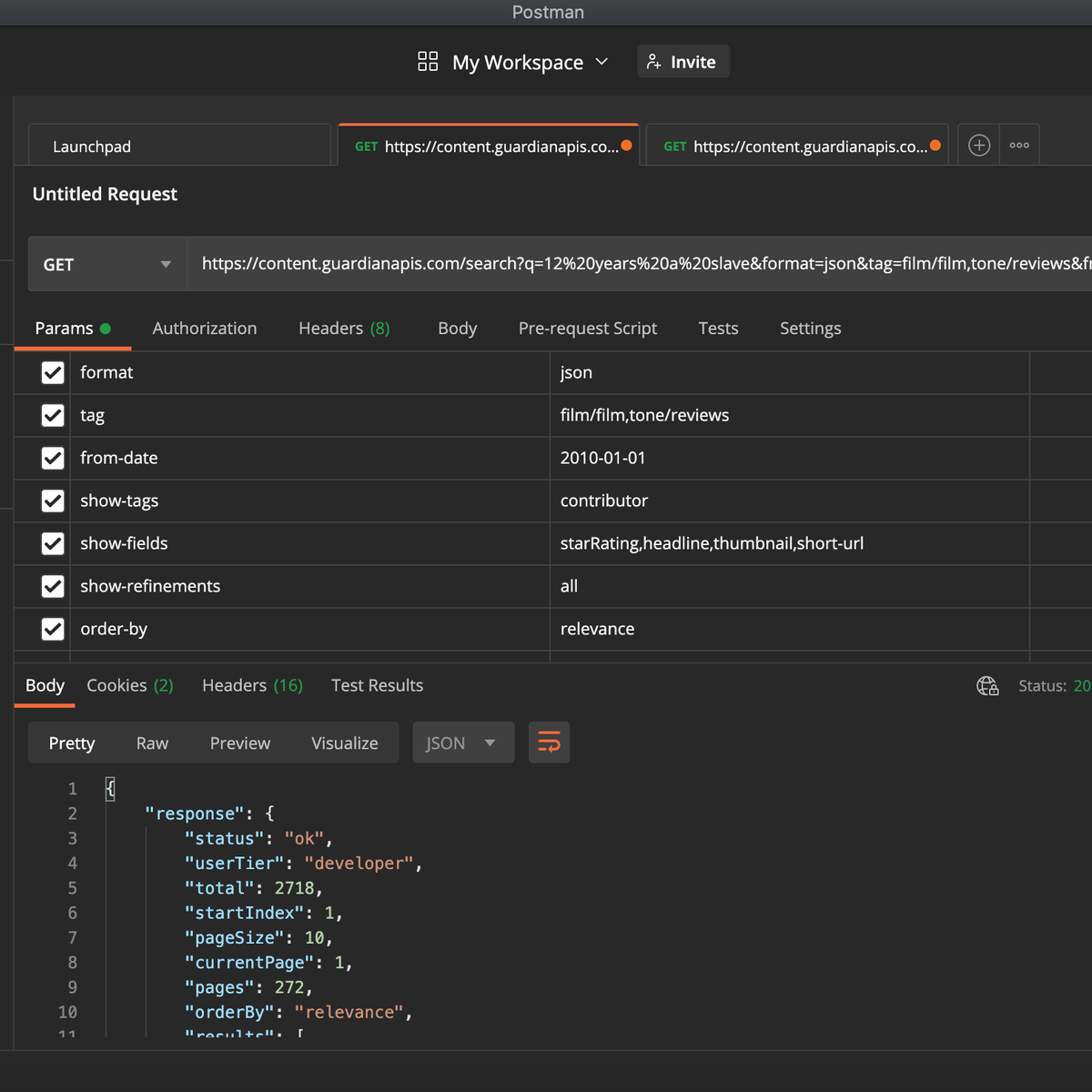

Postman - Intro to APIs (without coding)

We use APIs everyday - when we check the news, when we log into online service - because APIs are used by many companies as a way to interact with their product or service. Being able understand and send API requests is helpful in many roles across the business - including product, marketing and data. If you work alongside or interact with APIs in your job, or you want to use APIs in your tech or data projects, this course is a great introduction to interacting with APIs without writing could (using a program called Postman). By the end of this project, you will understand what APIs are and what they are used for. You will have interacted with a number of APIs, and recognise the different parts which make up an API. You will feel comfortable reading API documentation and writing your own requests.

Using DAX throughout PowerBI to create robust data scenarios

If you don't use Data Analysis Expressions (DAX) Language, you will miss out on 95% of Power BI's potential as a fantastic analytical tool, and the journey to becoming a DAX master starts with the right step. This project-based course, "Using DAX throughout Power BI to create robust data scenarios," is intended for novice data analysts willing to advance their knowledge and skills.

This 2-hour project-based course will teach you how to create columns, measures, and tables using DAX codes while understanding the importance of context in DAX formulas. Finally, we'll round off the course by introducing time-intelligence functions and show you how to use Quick Measures to create complex DAX code. This course is structured in a systematic way and very practical, where you get an option to practice as you progress.

This project-based course is a beginner-level course in Power BI. Therefore, you should be familiar with the Power BI interface to get the most out of this project. Please join me on this beautiful ride! Let's take the first step in your DAX mastery journey!

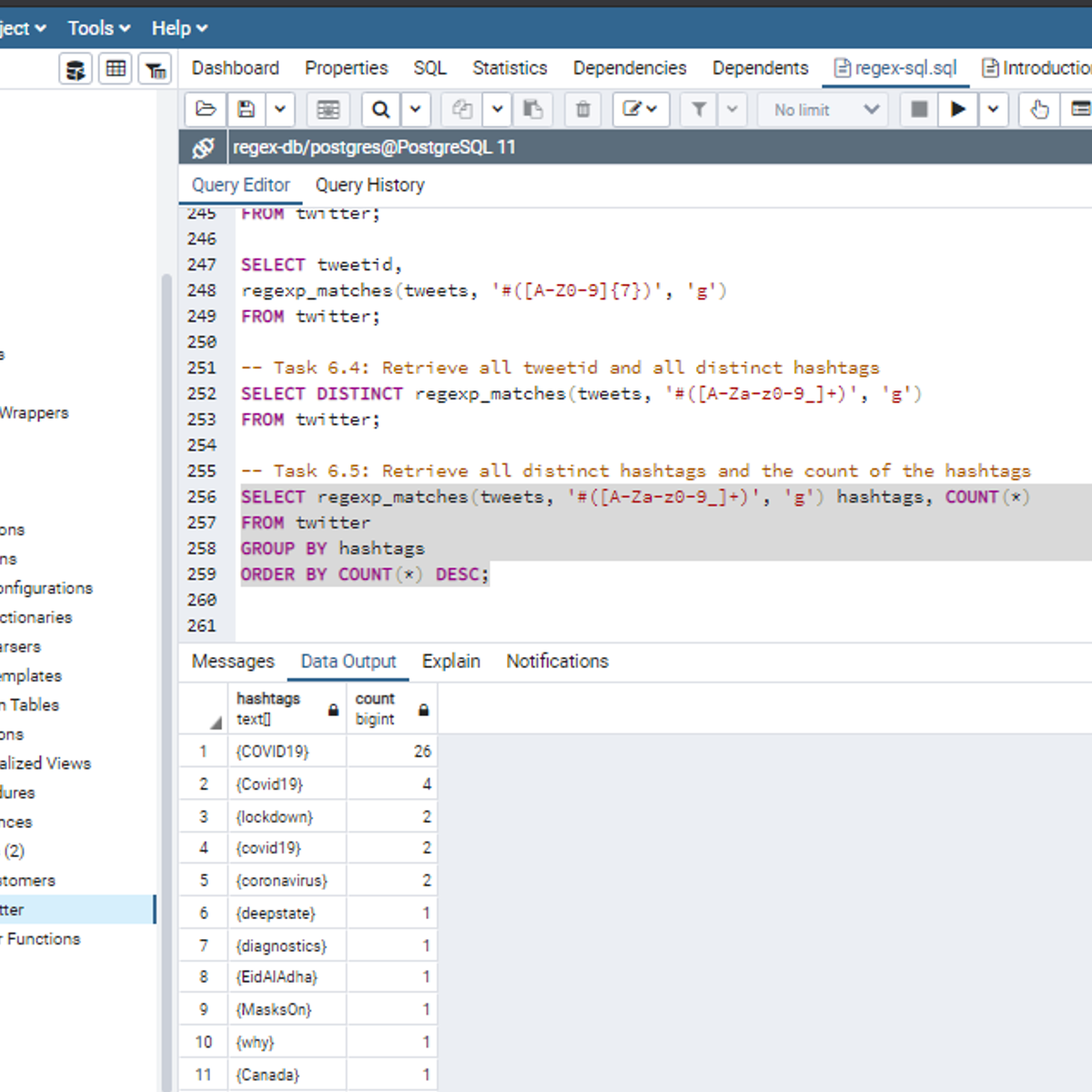

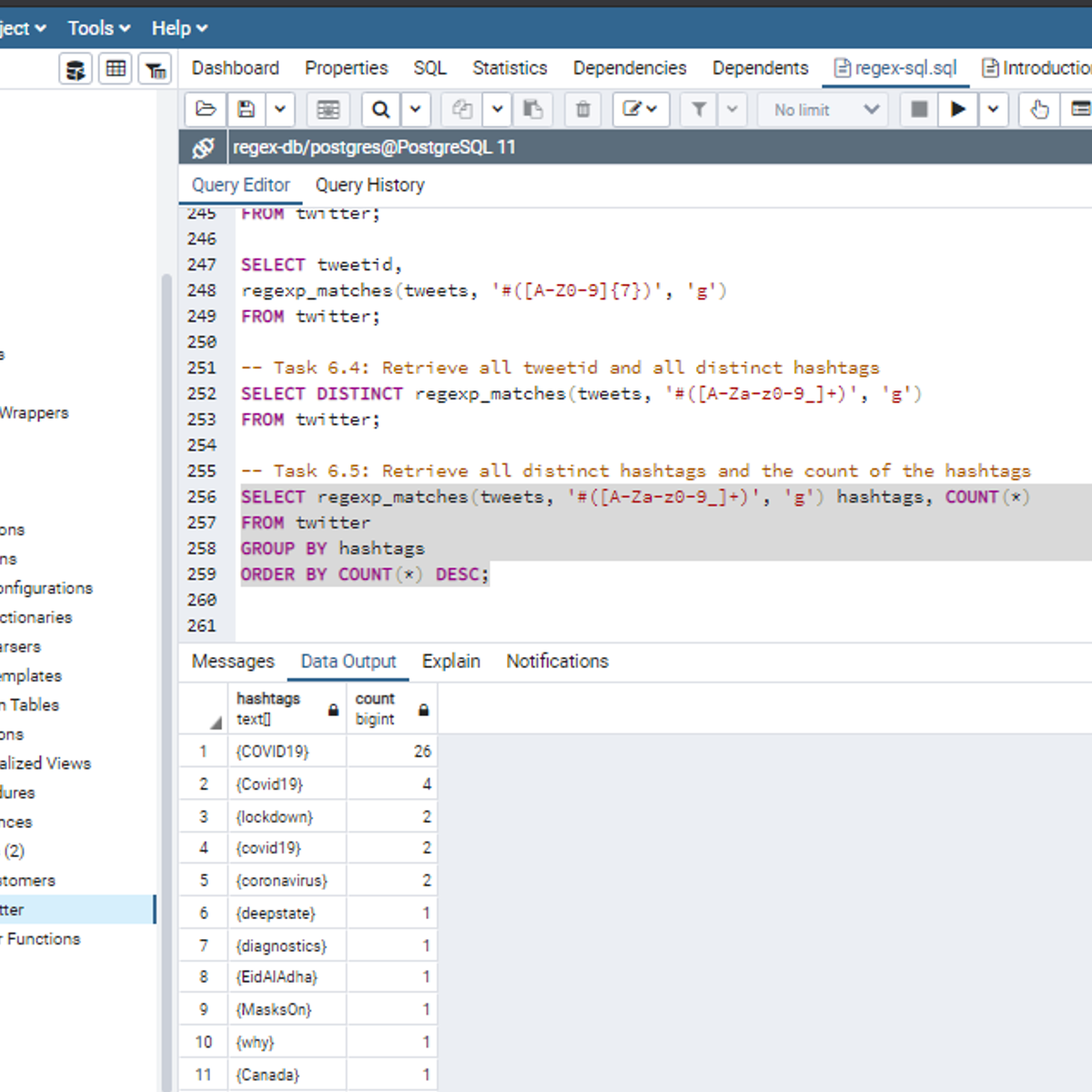

Introduction to Regular Expressions in SQL

Welcome to this project-based course, Introduction to Regular Expressions in SQL. In this project, you will learn how to use SQL regular expressions extensively for pattern matching to query tables in a database.

By the end of this 2-and-a-half-hour-long project, you will be able to use POSIX regular expressions together with meta (special) characters in the WHERE clause and the SELECT clause to retrieve the desired result from a database. In this project, we will move systematically by first revising the use of the LIKE and NOT LIKE operators in the WHERE clause. Then, we will use different regular expression metacharacters together with POSIX operators in the WHERE clause. Also, we will use regular expressions to work on tweets from Twitter data. Be assured that you will get your hands really dirty in this project because you will get to work on a lot of exercises to reinforce your knowledge of the concepts.

Also, for this hands-on project, we will use PostgreSQL as our preferred database management system (DBMS). Therefore, to complete this project, it is required that you have prior experience with using PostgreSQL. Similarly, this project is an intermediate SQL concept; so, a good foundation for writing SQL queries is vital to complete this project.

If you are not familiar with writing queries in SQL and want to learn these concepts, start with my previous guided projects titled “Querying Databases using SQL SELECT statement." I taught this guided project using PostgreSQL. So, taking these projects will give the needed requisite to complete this Introduction to Regular Expressions in SQL project. However, if you are comfortable writing queries in PostgreSQL, please join me on this wonderful ride! Let’s get our hands dirty!

Data Publishing on BigQuery using Authorized Views for Data Sharing Partners

This is a self-paced lab that takes place in the Google Cloud console. In this lab you will learn how Authorized Views in BigQuery can be used to share customer specific data from a Data Sharing Partner.

Extract Text Data with Bash and Regex

By the end of this project, you will extract email text data from a file using a regular expression in a bash script.

The bash shell is a widely used shell within Linux distributions. One of the Linux tools often needed is file data extraction to obtain specific fields from files. For example, email files containing email addresses can often be difficult to analyze because of extraneous data. Error log files may also be more easily analyzed by matching specific data fields.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Pivot Tables in Google Sheets

This is a self-paced lab that takes place in the Google Cloud console. Create pivot tables to quickly summarize large amounts of data and reference data using named ranges. Use functions and formulas to calculate descriptive statistics.

Create Fault Tolerant MongoDB Cluster

In this course, you will create a MongoDB replica set on a single Linux server to become familiar with the setup. There will be three servers, one primary server and two secondary servers. You will then populate the database collection from a csv file using the Mongo shell. You will then retrieve data from the collection to verify the data. Finally, one of the servers will be taken down and you will observe that the data is still available through a new primary server.

Database Replication is an important aspect of Data Management. By keeping copies of a database on multiple servers, it allows continuous access to data when a database server goes down. Each replica database server should be kept on a separate physical server. This ensures that if one entire physical server become unavailable for some reason, the other database servers can still be accessed. MongoDB includes the means to handle replica in a straight-forward manner. There is one primary database server through which an application may connect to the database. Any time a write occurs to the primary server, the secondary servers are updated with the new data. When the primary server goes down, one of the secondary servers takes over as the primary server. There is a minimum of three servers required for a replica set.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

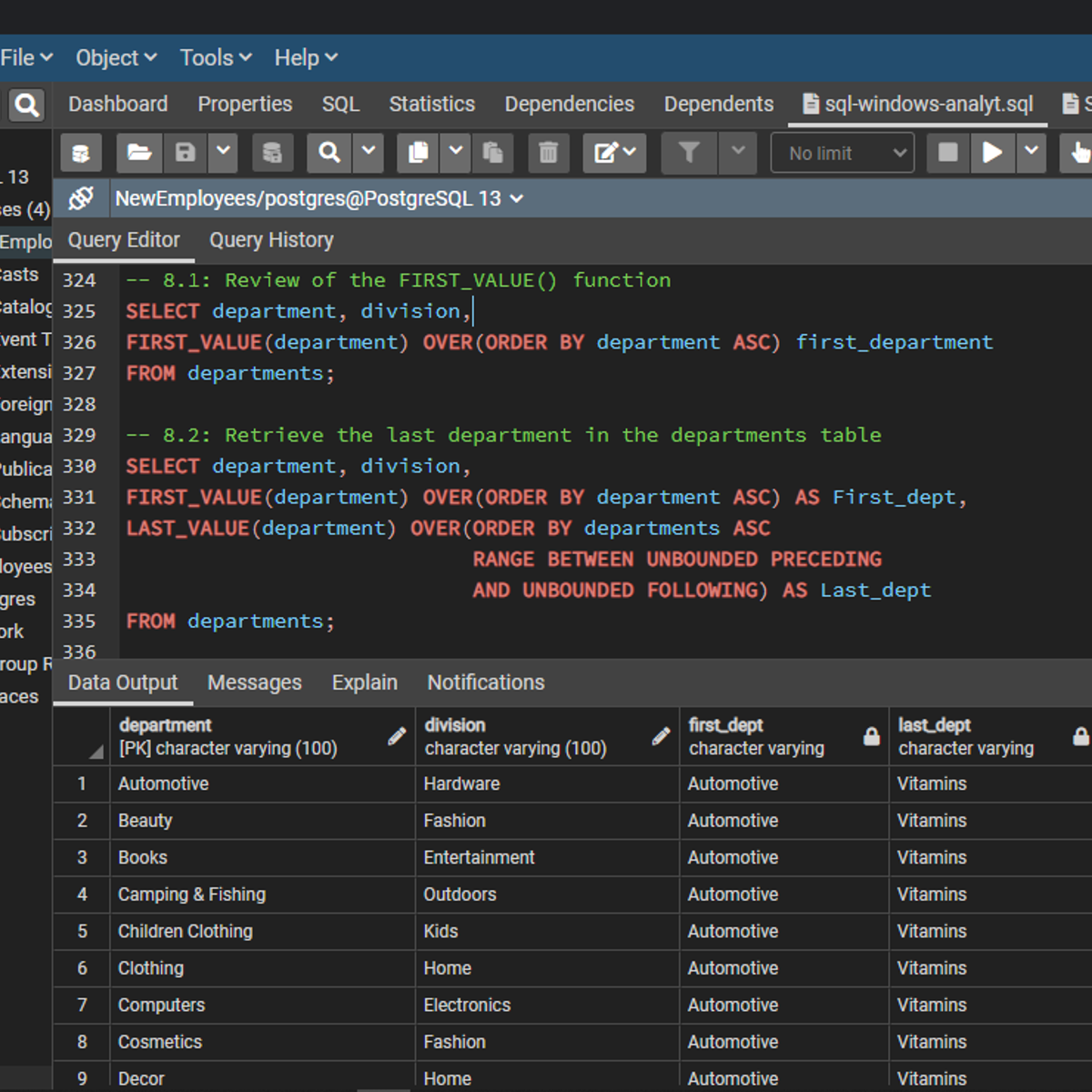

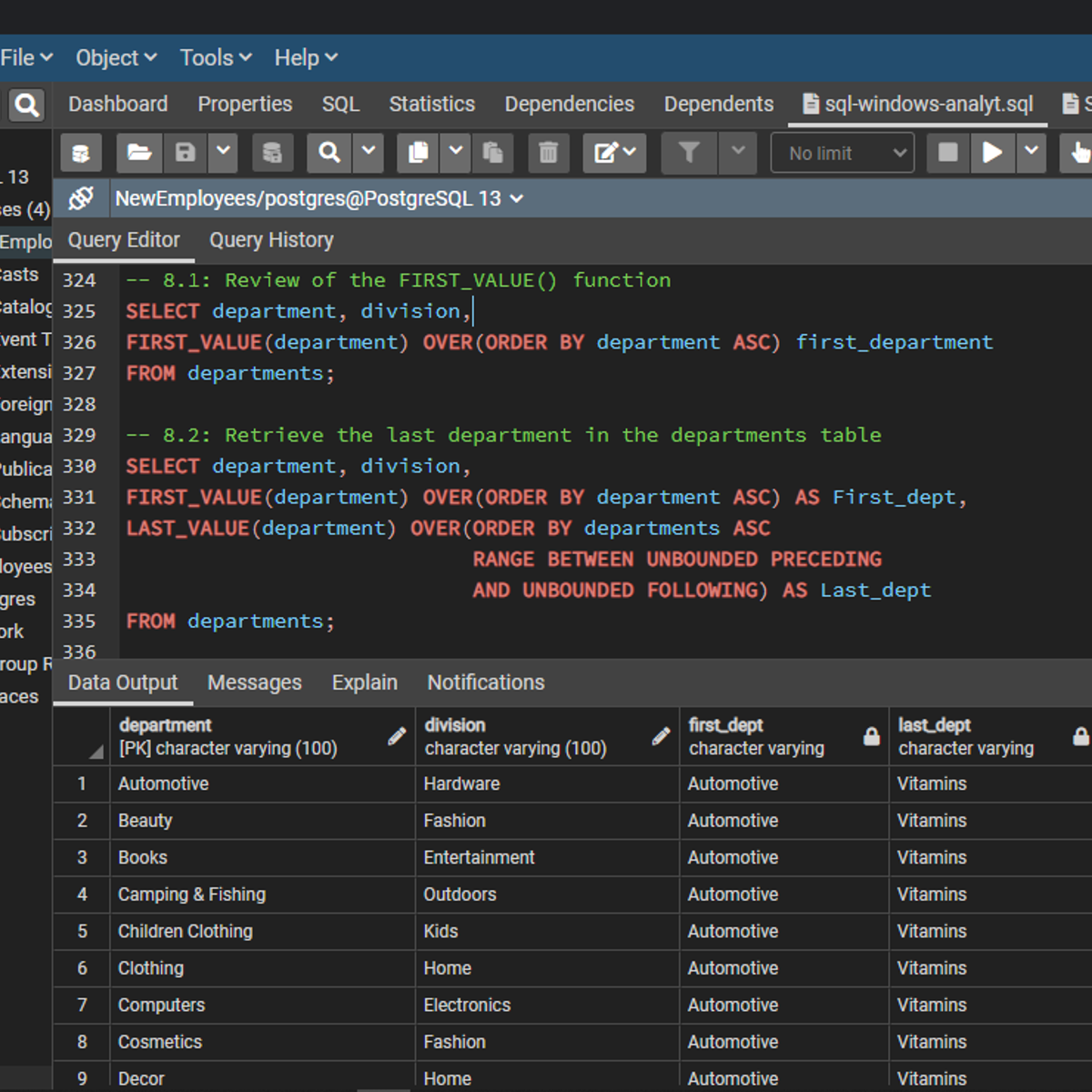

SQL Window Functions for Analytics

Welcome to this project-based course SQL Window Functions for Analytics. This is a hands-on project that will help SQL users use window functions extensively for database insights. In this project, you will learn how to explore and query the project-db database extensively. We will start this hands-on project by retrieving the data in the table in the database.

By the end of this 2-hour-and-a-half-long project, you will be able to use different window functions to retrieve the desired result from a database. In this project, you will learn how to use SQL window functions like ROW_NUMBER(), RANK(), DENSE_RANK(), NTILE(), and LAST_VALUE() to manipulate data in the project-db database. Also, we will consider how to use aggregate window functions. These window functions will be used together with the OVER() clause to query this database. By extension, we will use grouping functions like GROUPING SETS(), ROLLUP(), and CUBE() to retrieve sublevel and grand totals.

Joining Data in R using dplyr

You will need to join or merge two or more data sets at different points in your work as a data enthusiast. The dplyr package offers very sophisticated functions to help you achieve the join operation you desire. This project-based course, "Joining Data in R using dplyr" is for R users willing to advance their knowledge and skills.

In this course, you will learn practical ways for data manipulation in R. We will talk about different join operations and spend a great deal of our time here joining the sales and customers data sets using the dplyr package. By the end of this 2-hour-long project, you will perform inner join, full (outer) join, right join, left join, cross join, semi join, and anti join using the merge() and dplyr functions.

This project-based course is an intermediate-level course in R. Therefore, to get the most of this project, it is essential to have prior experience using R for basic analysis. I recommend that you complete the project titled: "Data Manipulation with dplyr in R" before you take this current project.

Speech to Text Transcription with the Cloud Speech API

This is a self-paced lab that takes place in the Google Cloud console.

The Cloud Speech API lets you do speech to text transcription from audio files in over 80 languages. In this hands-on lab you’ll record your own audio file and send it to the Speech API for transcription.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved