Back to Courses

Data Science Courses - Page 72

Showing results 711-720 of 1407

Meaningful Marketing Insights

With marketers are poised to be the largest users of data within the organization, there is a need to make sense of the variety of consumer data that the organization collects. Surveys, transaction histories and billing records can all provide insight into consumers’ future behavior, provided that they are interpreted correctly. In Introduction to Marketing Analytics, we introduce the tools that learners will need to convert raw data into marketing insights. The included exercises are conducted using Microsoft Excel, ensuring that learners will have the tools they need to extract information from the data available to them. The course provides learners with exposure to essential tools including exploratory data analysis, as well as regression methods that can be used to investigate the impact of marketing activity on aggregate data (e.g., sales) and on individual-level choice data (e.g., brand choices).

To successfully complete the assignments in this course, you will require Microsoft Excel. If you do not have Excel, you can download a free 30-day trial here: https://products.office.com/en-us/try

Advanced Manufacturing Process Analysis

Variability is a fact of life in manufacturing environments, impacting product quality and yield. Through this course, students will learn why performing advanced analysis of manufacturing processes is integral for diagnosing and correcting operational flaws in order to improve yields and reduce costs.

Gain insights into the best ways to collect, prepare and analyze data, as well as computational platforms that can be leveraged to collect and process data over sustained periods of time. Become better prepared to participate as a member of an advanced analysis team and share valuable inputs on effective implementation.

Main concepts of this course will be delivered through lectures, readings, discussions and various videos.

This is the fourth course in the Digital Manufacturing & Design Technology specialization that explores the many facets of manufacturing’s “Fourth Revolution,” aka Industry 4.0, and features a culminating project involving creation of a roadmap to achieve a self-established DMD-related professional goal. To learn more about the Digital Manufacturing and Design Technology specialization, please watch the overview video by copying and pasting the following link into your web browser: https://youtu.be/wETK1O9c-CA

Explainable Machine Learning with LIME and H2O in R

Welcome to this hands-on, guided introduction to Explainable Machine Learning with LIME and H2O in R. By the end of this project, you will be able to use the LIME and H2O packages in R for automatic and interpretable machine learning, build classification models quickly with H2O AutoML and explain and interpret model predictions using LIME.

Machine learning (ML) models such as Random Forests, Gradient Boosted Machines, Neural Networks, Stacked Ensembles, etc., are often considered black boxes. However, they are more accurate for predicting non-linear phenomena due to their flexibility. Experts agree that higher accuracy often comes at the price of interpretability, which is critical to business adoption, trust, regulatory oversight (e.g., GDPR, Right to Explanation, etc.). As more industries from healthcare to banking are adopting ML models, their predictions are being used to justify the cost of healthcare and for loan approvals or denials. For regulated industries that use machine learning, interpretability is a requirement. As Finale Doshi-Velez and Been Kim put it, interpretability is "The ability to explain or to present in understandable terms to a human.".

To successfully complete the project, we recommend that you have prior experience with programming in R, basic machine learning theory, and have trained ML models in R.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Measuring Total Data Quality

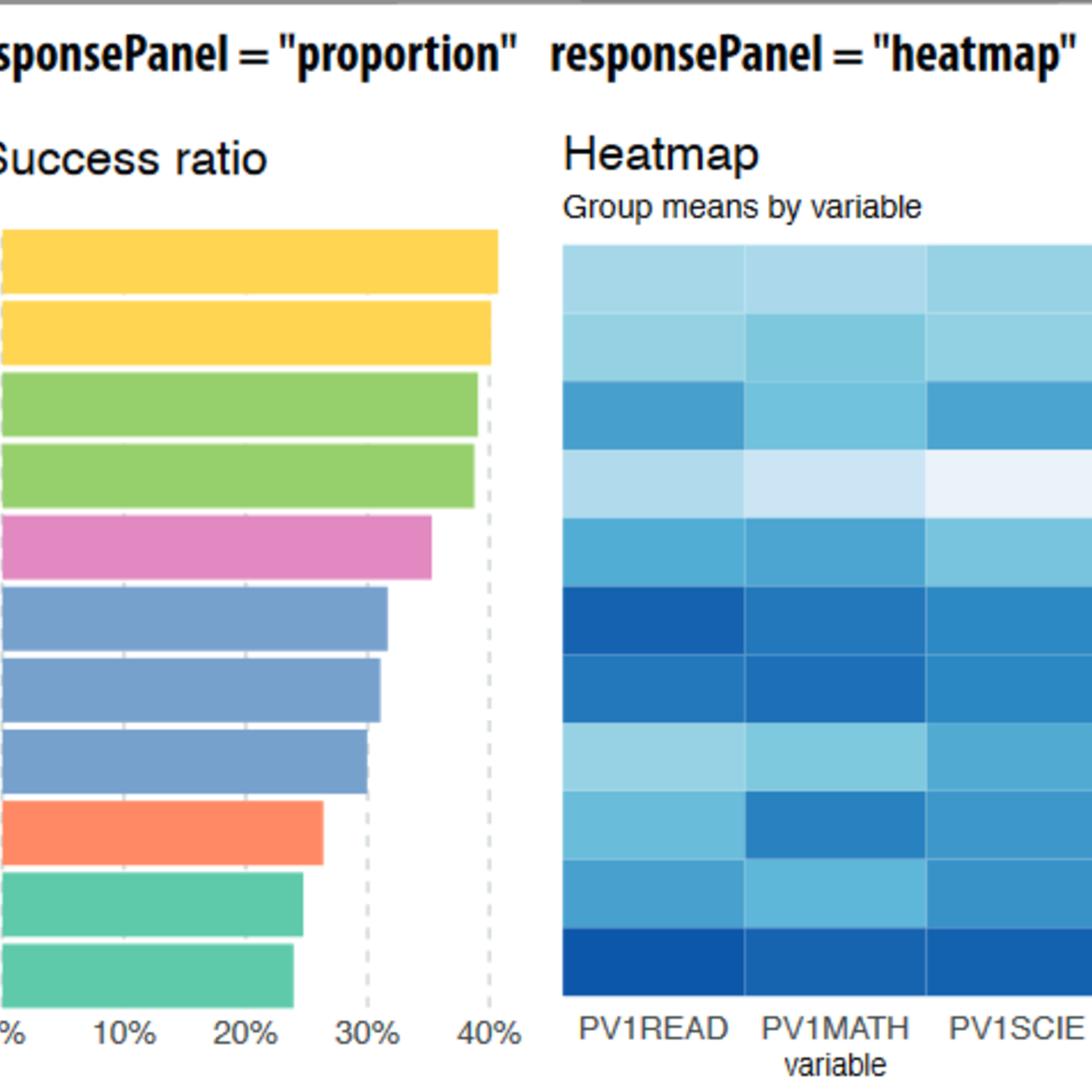

By the end of this second course in the Total Data Quality Specialization, learners will be able to:

1. Learn various metrics for evaluating Total Data Quality (TDQ) at each stage of the TDQ framework.

2. Create a quality concept map that tracks relevant aspects of TDQ from a particular application or data source.

3. Think through relative trade-offs between quality aspects, relative costs and practical constraints imposed by a particular project or study.

4. Identify relevant software and related tools for computing the various metrics.

5. Understand metrics that can be computed for both designed and found/organic data.

6. Apply the metrics to real data and interpret their resulting values from a TDQ perspective.

This specialization as a whole aims to explore the Total Data Quality framework in depth and provide learners with more information about the detailed evaluation of total data quality that needs to happen prior to data analysis. The goal is for learners to incorporate evaluations of data quality into their process as a critical component for all projects. We sincerely hope to disseminate knowledge about total data quality to all learners, such as data scientists and quantitative analysts, who have not had sufficient training in the initial steps of the data science process that focus on data collection and evaluation of data quality. We feel that extensive knowledge of data science techniques and statistical analysis procedures will not help a quantitative research study if the data collected/gathered are not of sufficiently high quality.

This specialization will focus on the essential first steps in any type of scientific investigation using data: either generating or gathering data, understanding where the data come from, evaluating the quality of the data, and taking steps to maximize the quality of the data prior to performing any kind of statistical analysis or applying data science techniques to answer research questions. Given this focus, there will be little material on the analysis of data, which is covered in myriad existing Coursera specializations. The primary focus of this specialization will be on understanding and maximizing data quality prior to analysis.

Matrix Factorization and Advanced Techniques

In this course you will learn a variety of matrix factorization and hybrid machine learning techniques for recommender systems. Starting with basic matrix factorization, you will understand both the intuition and the practical details of building recommender systems based on reducing the dimensionality of the user-product preference space. Then you will learn about techniques that combine the strengths of different algorithms into powerful hybrid recommenders.

Probabilistic Deep Learning with TensorFlow 2

Welcome to this course on Probabilistic Deep Learning with TensorFlow!

This course builds on the foundational concepts and skills for TensorFlow taught in the first two courses in this specialisation, and focuses on the probabilistic approach to deep learning. This is an increasingly important area of deep learning that aims to quantify the noise and uncertainty that is often present in real world datasets. This is a crucial aspect when using deep learning models in applications such as autonomous vehicles or medical diagnoses; we need the model to know what it doesn't know.

You will learn how to develop probabilistic models with TensorFlow, making particular use of the TensorFlow Probability library, which is designed to make it easy to combine probabilistic models with deep learning. As such, this course can also be viewed as an introduction to the TensorFlow Probability library.

You will learn how probability distributions can be represented and incorporated into deep learning models in TensorFlow, including Bayesian neural networks, normalising flows and variational autoencoders. You will learn how to develop models for uncertainty quantification, as well as generative models that can create new samples similar to those in the dataset, such as images of celebrity faces.

You will put concepts that you learn about into practice straight away in practical, hands-on coding tutorials, which you will be guided through by a graduate teaching assistant. In addition there is a series of automatically graded programming assignments for you to consolidate your skills.

At the end of the course, you will bring many of the concepts together in a Capstone Project, where you will develop a variational autoencoder algorithm to produce a generative model of a synthetic image dataset that you will create yourself.

This course follows on from the previous two courses in the specialisation, Getting Started with TensorFlow 2 and Customising Your Models with TensorFlow 2. The additional prerequisite knowledge required in order to be successful in this course is a solid foundation in probability and statistics. In particular, it is assumed that you are familiar with standard probability distributions, probability density functions, and concepts such as maximum likelihood estimation, change of variables formula for random variables, and the evidence lower bound (ELBO) used in variational inference.

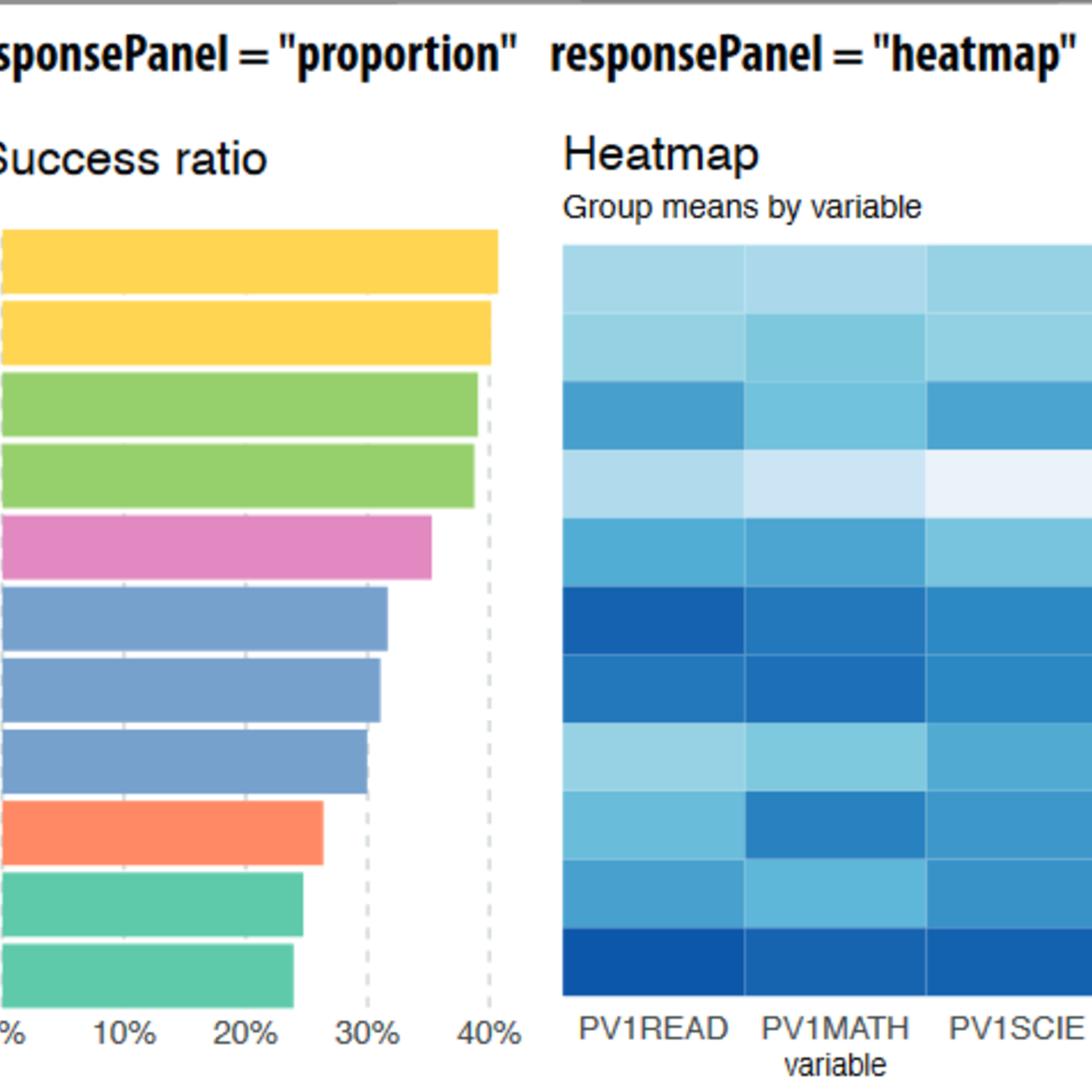

Fundamentals of Data Analytics in the Public Sector with R

Gain a foundational understanding of key terms and concepts in public administration and public policy while learning foundational programming techniques using the R programming language. You will learn how to execute functions to load, select, filter, mutate, and summarize data frames using the tidyverse libraries with an emphasis on the dplyr package. By the end of the course, you will create custom functions and apply them to population data which is commonly found in public sector analytics.

Throughout the course, you will work with authentic public datasets, and all programming can be completed in RStudio on the Coursera platform without additional software.

This is the first of four courses within the Data Analytics in the Public Sector with R Specialization. The series is ideal for current or early career professionals working in the public sector looking to gain skills in analyzing public data effectively. It is also ideal for current data analytics professionals or students looking to enter the public sector.

Introduction to Data Science and scikit-learn in Python

This course will teach you how to leverage the power of Python and artificial intelligence to create and test hypothesis. We'll start for the ground up, learning some basic Python for data science before diving into some of its richer applications to test our created hypothesis. We'll learn some of the most important libraries for exploratory data analysis (EDA) and machine learning such as Numpy, Pandas, and Sci-kit learn. After learning some of the theory (and math) behind linear regression, we'll go through and full pipeline of reading data, cleaning it, and applying a regression model to estimate the progression of diabetes. By the end of the course, you'll apply a classification model to predict the presence/absence of heart disease from a patient's health data.

Machine Learning for Telecom Customers Churn Prediction

In this hands-on project, we will train several classification algorithms such as Logistic Regression, Support Vector Machine, K-Nearest Neighbors, and Random Forest Classifier to predict the churn rate of Telecommunication Customers. Machine learning help companies analyze customer churn rate based on several factors such as services subscribed by customers, tenure rate, and payment method. Predicting churn rate is crucial for these companies because the cost of retaining an existing customer is far less than acquiring a new one.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Financial Risk Management with R

This course teaches you how to calculate the return of a portfolio of securities as well as quantify the market risk of that portfolio, an important skill for financial market analysts in banks, hedge funds, insurance companies, and other financial services and investment firms. Using the R programming language with Microsoft Open R and RStudio, you will use the two main tools for calculating the market risk of stock portfolios: Value-at-Risk (VaR) and Expected Shortfall (ES). You will need a beginner-level understanding of R programming to complete the assignments of this course.

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved