Back to Courses

Software Development Courses - Page 56

Showing results 551-560 of 1266

Use C# to Process XML Data

By the end of this project, you will Use C# to process XML data in a C# program. XML is an eXtensible Markup Language used to transport data across the internet for display or other processing. It provides a standard format so data may be validated as well. C#, like other languages, contains classes to read and validate XML documents.

Launch an auto-scaling AWS EC2 virtual machine

In this 1-hour long project-based course, you will learn how to Launch an autoscaling AWS EC2 virtual machine using the AWS console.

Amazon Elastic Compute Cloud is the service you use to create and run virtual machines (VM), also known as instances. By completing the steps in this guided project, you will successfully launch an auto-scaling Amazon EC2 virtual machine using the AWS console within the AWS Free Tier. You will also verify the auto-scalable EC2 virtual machine and then terminate your scaling infrastructure.

Note: This course works best for learners who are based in the North American region. We’re currently working on providing the same experience in other regions.

Machine Learning Algorithms

In this course you will:

a) understand the naïve Bayesian algorithm.

b) understand the Support Vector Machine algorithm.

c) understand the Decision Tree algorithm.

d) understand the Clustering.

Please make sure that you’re comfortable programming in Python and have a basic knowledge of mathematics including matrix multiplications, and conditional probability.

RPA Lifecycle: Development and Testing

To adopt RPA, you begin with the Discovery and Design phases and proceed onto the Development and Testing phase.

RPA Lifecycle – Development and Testing is the second course of the Specialization on Implementing RPA with Cognitive Solutions and Analytics.

In this course, you will learn how to develop and test bots. For this, you will use Automation Anywhere Enterprise Client (or AAE Client) to record, modify, and run tasks. AAE Client is a desktop application with an intuitive interface, that enables the creation of automated tasks with ease. It features ‘SMART’ Automation technology that quickly automates complex tasks without the need for any programming efforts. The learning will be reinforced through concept description, building bots, and guided practice.

App Engine: Qwik Start - Python

This is a self-paced lab that takes place in the Google Cloud console. This hands-on lab shows you how to create a small App Engine application that displays a short message.

Node.js Backend Basics with Best Practices

By the end of this project, you will create a backend using industry best practices that you will be able to cater to all of your different projects.

This project gives you a head start with one of the most widely used libraries used for the backend, express.js. The project will provide you with the steps on how to design a node.js architecture following the separation of concerns design pattern Learning Node.js and Express.js will open the door for you to create solid and scalable backend systems that will be customized to your projects.

This guided project is for intermediate software developers who would like to learn how to deliver a scalable and well-designed backend to apply in their projects or in their work in the future.

Use Process Advisor to Analyze and Automate Manual Process

Imagine working in a car rental company that manually tracks which cars are rented out and which ones are ready for rental. As employees, we are responsible for informing all other employees about available cars each day and we are performing a repetitive process each day: we have to open SharePoint, find a site and Excel file, download the file, open it in Excel, filter out available cars, export it as PDF and send it by email to other colleagues who are responsible for car rentals, so they know which cars can be offered to the customers. Of course, this process is time consuming, and we don’t know which part of that process can be automated or how to automate it.

Thankfully, with Power Automate Process Advisor, not only can we see where the bottlenecks are and what can be automated, but we can also get a recommendation for which actions a Power Automate flow could be used for achieving the wanted automatization of this business process. With that, we can easily give machines to handle the repetitive process and spend our time on other business tasks. So, from 7 manual steps which must be done each day, we will end up with only 1 manual step, triggering the flow which we will create. How cool is that?

This Guided Project " Use Process Advisor to Analyze and Automate Manual Process" is for any business professional who is looking to automate any kind of manual business process. In the project you will create a SharePoint site and a list (from a sample Excel file), and learn how to use Power Automate Process Advisor to analyze the manual process. You will also create a flow with the guidance of the Process Advisor analysis result. What’s great about SharePoint and Power Automate is that anyone can learn to use them regardless of their educational background!

Since this project uses Office 365 services (SharePoint, Excel, Outlook) and Power Automate (part of the Microsoft Power Platform), you will need access to a Microsoft account and a Microsoft 365 Developer Program subscription account. In the video at the beginning of the project you will be given instructions on how to sign up for both.

If you are ready to make your and your colleagues’ lives easier by starting to automate manual, time-consuming processes which are hard to track, then this project is for you! Let's get started!

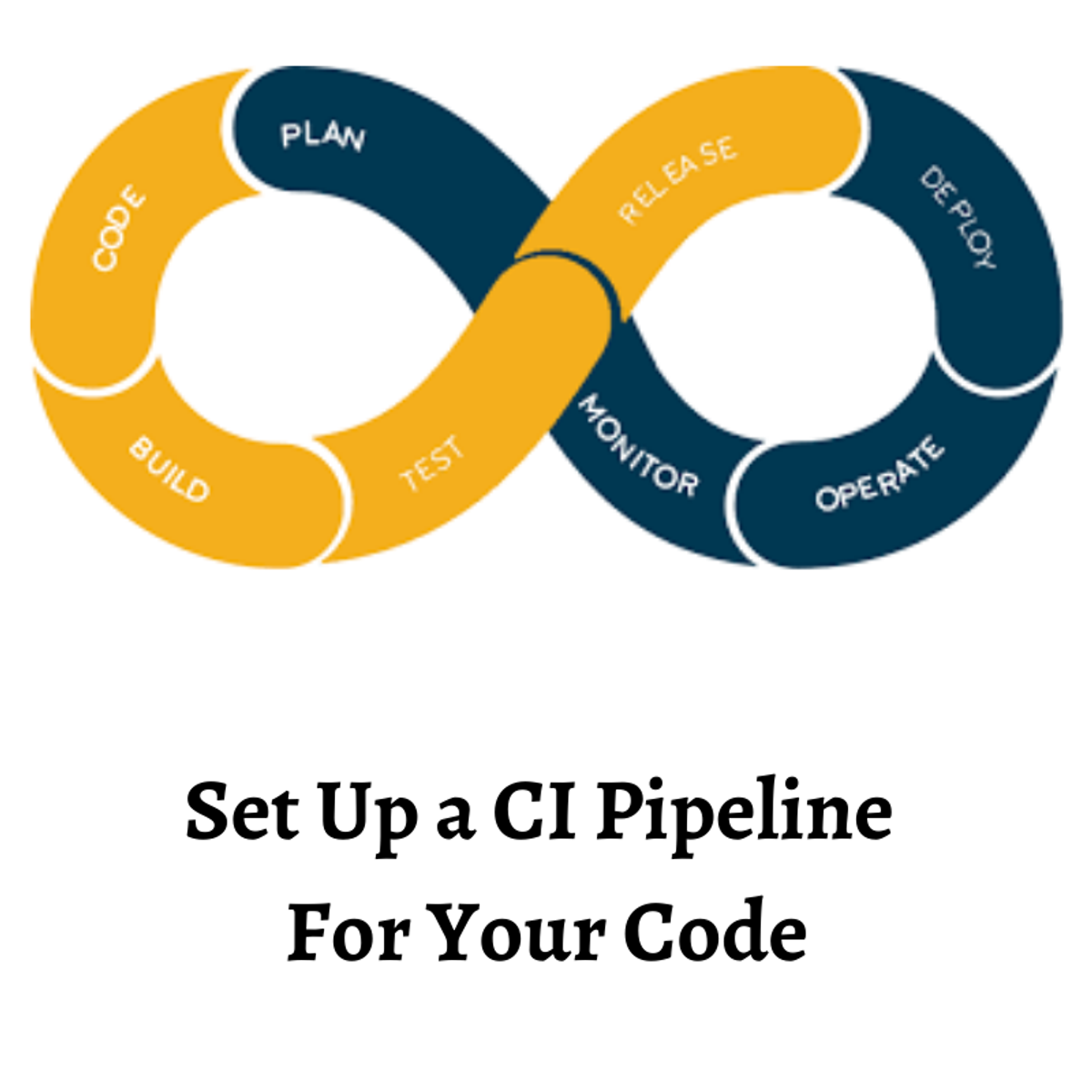

Set up a Continuous Integration (CI) workflow in CIrcleCI

In this 1-hour long project-based course on Setting up a Continuous Integration (CI) workflow in CircleCI, you will work through the complete workflow of getting a development project (nodeJS application) through version control (git and GitHub) and into a simple CI pipeline in CirclCI.

This course is designed for developers who have never worked with a CI tool before who want to understand how continuous integration can benefit their development processes and/or how it fits together in a development lifecycle.

By the end of this course, you will have a working pipeline of your own (in your own CircleCI user account) which will handle the building and testing of your code based on any pull requests made to your project repository in GitHub

This is a beginner course and as such is not designed for intermediate developers or DevOps professionals and students who already understand CI/CD and want a deep-dive on CircleCI and its various configuration capabilities.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Build an Online Auction Server with ExpressJS

Have you ever wanted to learn about backend (server) development and become a "full-stack" developer (someone who can do front-end and back-end development)? It is not as complicated as you think! In this 1.5 hours class, you will dive right in, learn the basics of one of the most popular web server frameworks, and write a server process to serve a simulated online auction website!

Recommended background: HTML, CSS, JavaScript, RESTful API.

DevOps on Alibaba Cloud

Course description:

The ACP DevOps Engineer Course is designed for developers and operations experts who will be deploying applications on Alibaba Cloud using DevOps tools and best practices. The course covers Alibaba Cloud's Kubernetes Container Service (ACK), the ARMS Prometheus monitoring service, Log Service, ActionTrail, Container Registry, and more. It’s recommended for developers, Operators & Maintainers.

The ACP DevOps Engineer Certification is designed for developers and operational experts who will be deploying applications on Alibaba Cloud using DevOps tools and best practices. The exam covers Alibaba Cloud's Kubernetes Container Service (ACK), the ARMS Prometheus monitoring service, Log Service, ActionTrail, Container Registry, and more.

To earn an official Alibaba Cloud certificate please find the register portal on Academy's website:

https://edu.alibabacloud.com/certification/acp_devops

Popular Internships and Jobs by Categories

Browse

© 2024 BoostGrad | All rights reserved