Back to Courses

Machine Learning Courses - Page 10

Showing results 91-100 of 485

Python for Data Visualization: Matplotlib & Seaborn

In this hands-on project, we will understand the fundamentals of data visualization with Python and leverage the power of two important python libraries known as Matplotlib and seaborn. We will learn how to generate line plots, scatterplots, histograms, distribution plot, 3D plots, pie charts, pair plots, countplots and many more!

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Scikit-Learn For Machine Learning Classification Problems

Hello everyone and welcome to this new hands-on project on Scikit-Learn Library for solving machine learning classification problems. In this project, we will learn how to build and train classifier models using Scikit-Learn library. Scikit-learn is a free machine learning library developed for python. Scikit-learn offers several algorithms for classification, regression, and clustering. Several famous machine learning models are included such as support vector machines, random forests, gradient boosting, and k-means.

Getting started with TensorFlow 2

Welcome to this course on Getting started with TensorFlow 2!

In this course you will learn a complete end-to-end workflow for developing deep learning models with Tensorflow, from building, training, evaluating and predicting with models using the Sequential API, validating your models and including regularisation, implementing callbacks, and saving and loading models.

You will put concepts that you learn about into practice straight away in practical, hands-on coding tutorials, which you will be guided through by a graduate teaching assistant. In addition there is a series of automatically graded programming assignments for you to consolidate your skills.

At the end of the course, you will bring many of the concepts together in a Capstone Project, where you will develop an image classifier deep learning model from scratch.

Tensorflow is an open source machine library, and is one of the most widely used frameworks for deep learning. The release of Tensorflow 2 marks a step change in the product development, with a central focus on ease of use for all users, from beginner to advanced level. This course is intended for both users who are completely new to Tensorflow, as well as users with experience in Tensorflow 1.x.

The prerequisite knowledge required in order to be successful in this course is proficiency in the python programming language, (this course uses python 3), knowledge of general machine learning concepts (such as overfitting/underfitting, supervised learning tasks, validation, regularisation and model selection), and a working knowledge of the field of deep learning, including typical model architectures (MLP/feedforward and convolutional neural networks), activation functions, output layers, and optimisation.

Linear Algebra Basics

Machine learning and data science are the most popular topics of research nowadays. They are applied in all the areas of engineering and sciences. Various machine learning tools provide a data-driven solution to various real-life problems. Basic knowledge of linear algebra is necessary to develop new algorithms for machine learning and data science. In this course, you will learn about the mathematical concepts related to linear algebra, which include vector spaces, subspaces, linear span, basis, and dimension. It also covers linear transformation, rank and nullity of a linear transformation, eigenvalues, eigenvectors, and diagonalization of matrices. The concepts of singular value decomposition, inner product space, and norm of vectors and matrices further enrich the course contents.

Medical Insurance Premium Prediction with Machine Learning

In this 1-hour long project-based course, you will learn how to predict medical insurance cost with machine learning. The objective of this case study is to predict the health insurance cost incurred by Individuals based on their age, gender, Body Mass Index (BMI), number of children, smoking habits, and geo-location.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Classification of COVID19 using Chest X-ray Images in Keras

In this 1 hour long project-based course, you will learn to build and train a convolutional neural network in Keras with TensorFlow as backend from scratch to classify patients as infected with COVID or not using their chest x-ray images. Our goal is to create an image classifier with Tensorflow by implementing a CNN to differentiate between chest x rays images with a COVID 19 infections versus without. The dataset contains the lungs X-ray images of both groups.We will be carrying out the entire project on the Google Colab environment.

Please be aware of the fact that the dataset and the model in this project, can not be used in the real-life. We are only using this data for educational purposes.

By the end of this project, you will be able to build and train the convolutional neural network using Keras with TensorFlow as a backend. You will also be able to perform data visualization. Additionally, you will also be able to use the model to make predictions on new data.

You should be familiar with the Python Programming language and you should have a theoretical understanding of Convolutional Neural Networks. You will need a free Gmail account to complete this project.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

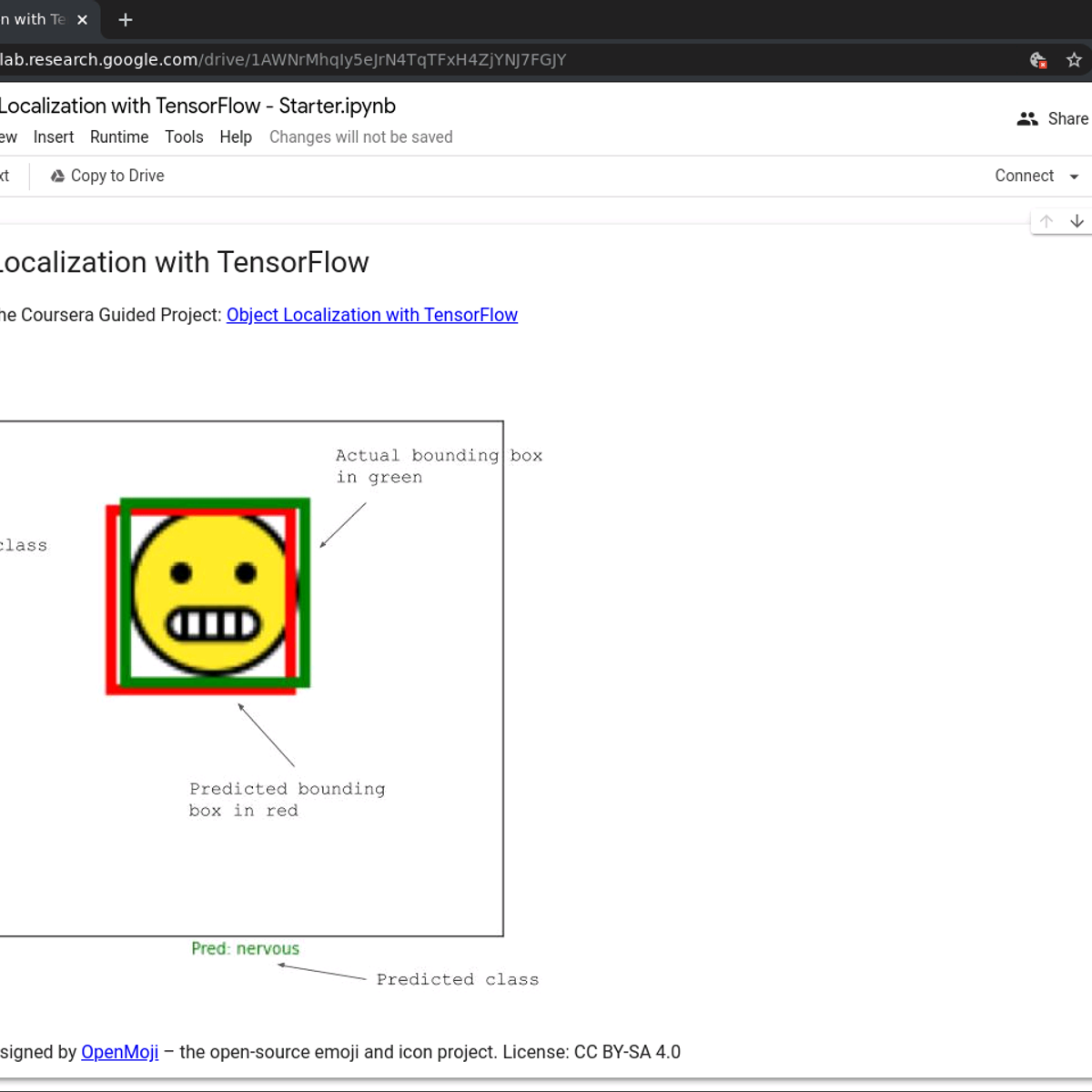

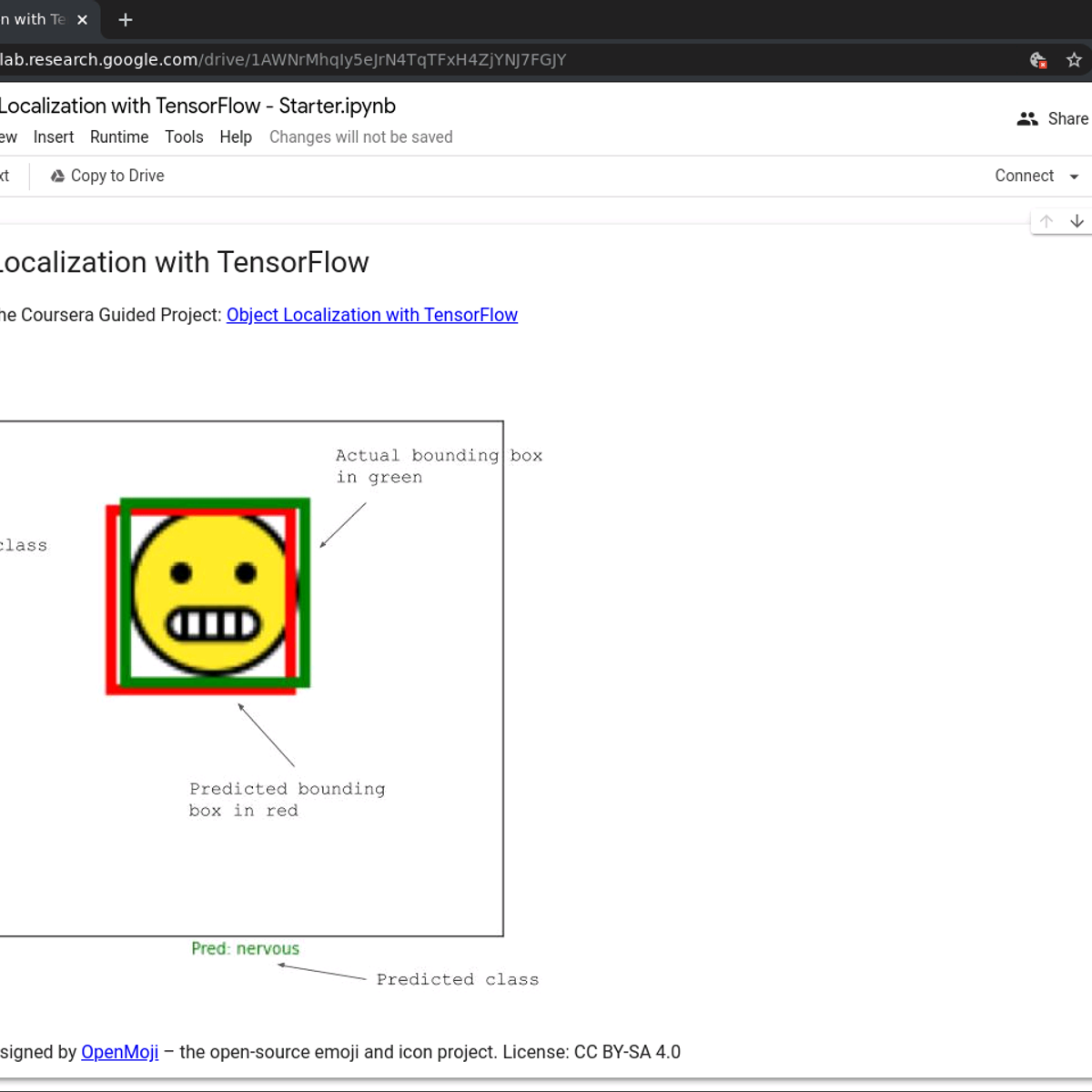

Object Localization with TensorFlow

Welcome to this 2 hour long guided project on creating and training an Object Localization model with TensorFlow. In this guided project, we are going to use TensorFlow's Keras API to create a convolutional neural network which will be trained to classify as well as localize emojis in images. Localization, in this context, means the position of the emojis in the images. This means that the network will have one input and two outputs. Think of this task as a simpler version of Object Detection. In Object Detection, we might have multiple objects in the input images, and an object detection model predicts the classes as well as bounding boxes for all of those objects. In Object Localization, we are working with the assumption that there is just one object in any given image, and our CNN model will classify and localize that object.

Please note that you will need prior programming experience in Python. You will also need familiarity with TensorFlow. This is a practical, hands on guided project for learners who already have theoretical understanding of Neural Networks, Convolutional Neural Networks, and optimization algorithms like Gradient Descent but want to understand how to use use TensorFlow to solve computer vision tasks like Object Localization.

Classify Radio Signals from Space using Keras

In this 1-hour long project-based course, you will learn the basics of using Keras with TensorFlow as its backend and use it to solve an image classification problem. The data we are going to use consists of 2D spectrograms of deep space radio signals collected by the Allen Telescope Array at the SETI Institute. We will treat the spectrograms as images to train an image classification model to classify the signals into one of four classes. By the end of the project, you will have built and trained a convolutional neural network from scratch using Keras to classify signals from space.

This course runs on Coursera's hands-on project platform called Rhyme. On Rhyme, you do projects in a hands-on manner in your browser. You will get instant access to pre-configured cloud desktops containing all of the software and data you need for the project. Everything is already set up directly in your internet browser so you can just focus on learning. For this project, you’ll get instant access to a cloud desktop with Python, Jupyter, and Tensorflow pre-installed.

Notes:

- You will be able to access the cloud desktop 5 times. However, you will be able to access instructions videos as many times as you want.

- This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Specialized Models: Time Series and Survival Analysis

This course introduces you to additional topics in Machine Learning that complement essential tasks, including forecasting and analyzing censored data. You will learn how to find analyze data with a time component and censored data that needs outcome inference. You will learn a few techniques for Time Series Analysis and Survival Analysis. The hands-on section of this course focuses on using best practices and verifying assumptions derived from Statistical Learning.

By the end of this course you should be able to:

Identify common modeling challenges with time series data

Explain how to decompose Time Series data: trend, seasonality, and residuals

Explain how autoregressive, moving average, and ARIMA models work

Understand how to select and implement various Time Series models

Describe hazard and survival modeling approaches

Identify types of problems suitable for survival analysis

Who should take this course?

This course targets aspiring data scientists interested in acquiring hands-on experience with Time Series Analysis and Survival Analysis.

What skills should you have?

To make the most out of this course, you should have familiarity with programming on a Python development environment, as well as fundamental understanding of Data Cleaning, Exploratory Data Analysis, Calculus, Linear Algebra, Supervised Machine Learning, Unsupervised Machine Learning, Probability, and Statistics.

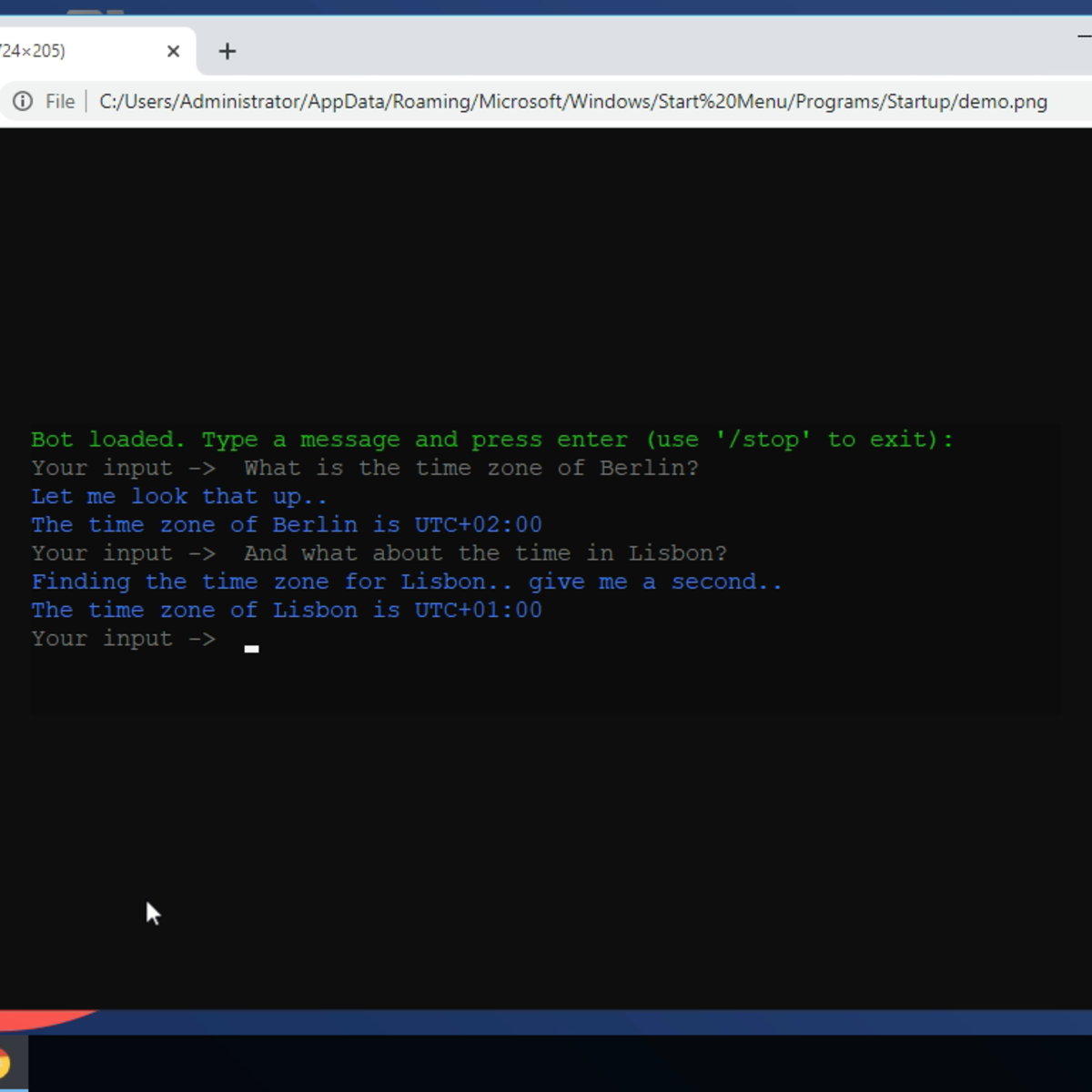

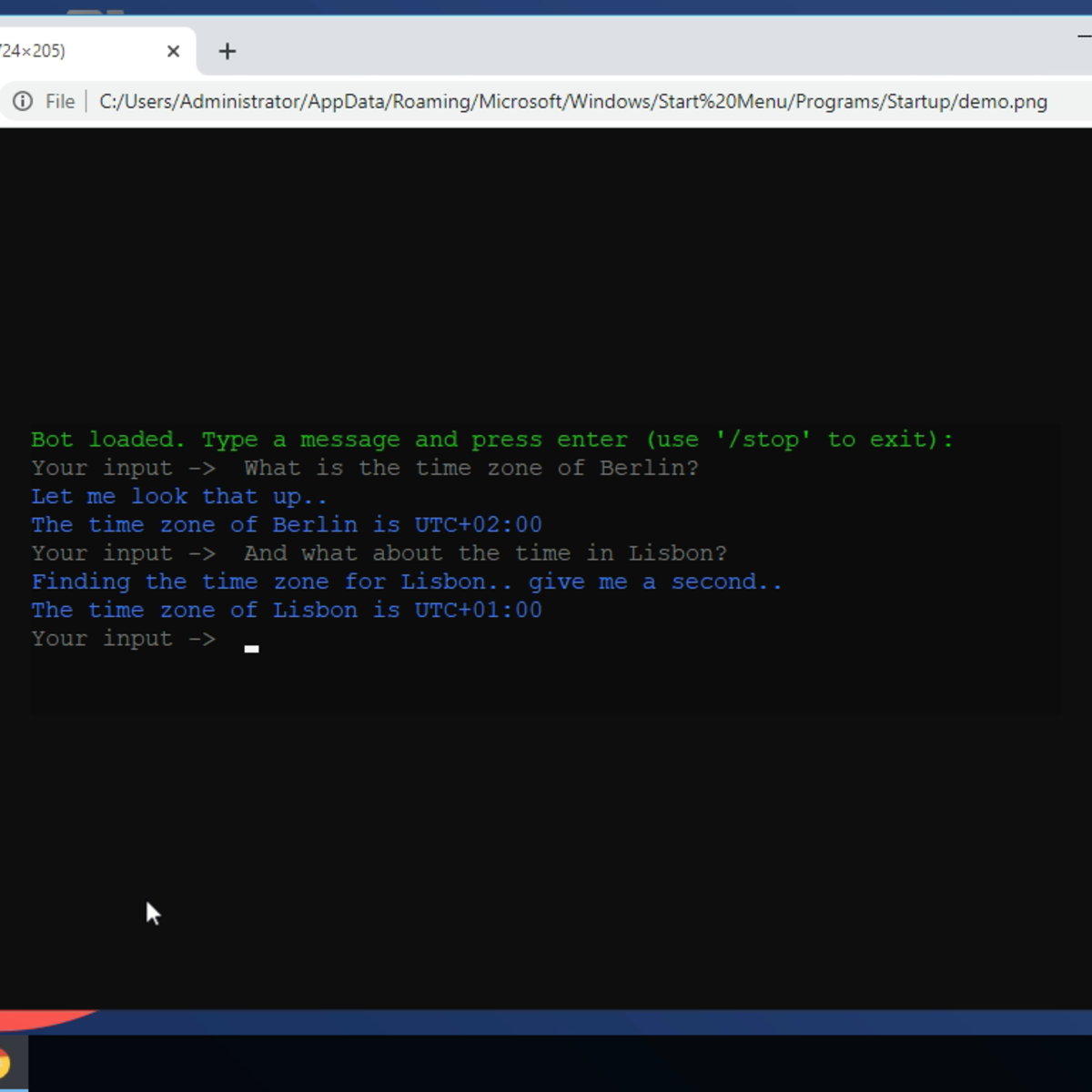

Create Your First Chatbot with Rasa and Python

In this 2 hour long project-based course, you will learn to create chatbots with Rasa and Python. Rasa is a framework for developing AI powered, industrial grade chatbots. It’s incredibly powerful, and is used by developers worldwide to create chatbots and contextual assistants. In this project, we are going to understand some of the most important basic aspects of the Rasa framework and chatbot development. Once you’re done with this project, you will be able to create simple AI powered chatbots on your own.

This project is ideal for programmers who want to get started with chatbot development. You don't need any machine learning or prior chatbot development experience. However, you should be familiar with Python programming.

Note: This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved