Back to Courses

Data Management Courses - Page 2

Showing results 11-20 of 399

Read an Input File with COBOL

In this project you will use COBOL code to read the data records from a sequential file. You will code, compile, and run programs using the PC-based COBOL IDE called OpenCobolIDE. Since COBOL is often used with large amounts of data, the ability to process files—to read the records in them as input and write the records as output—is a critical skill for any COBOL programmer.

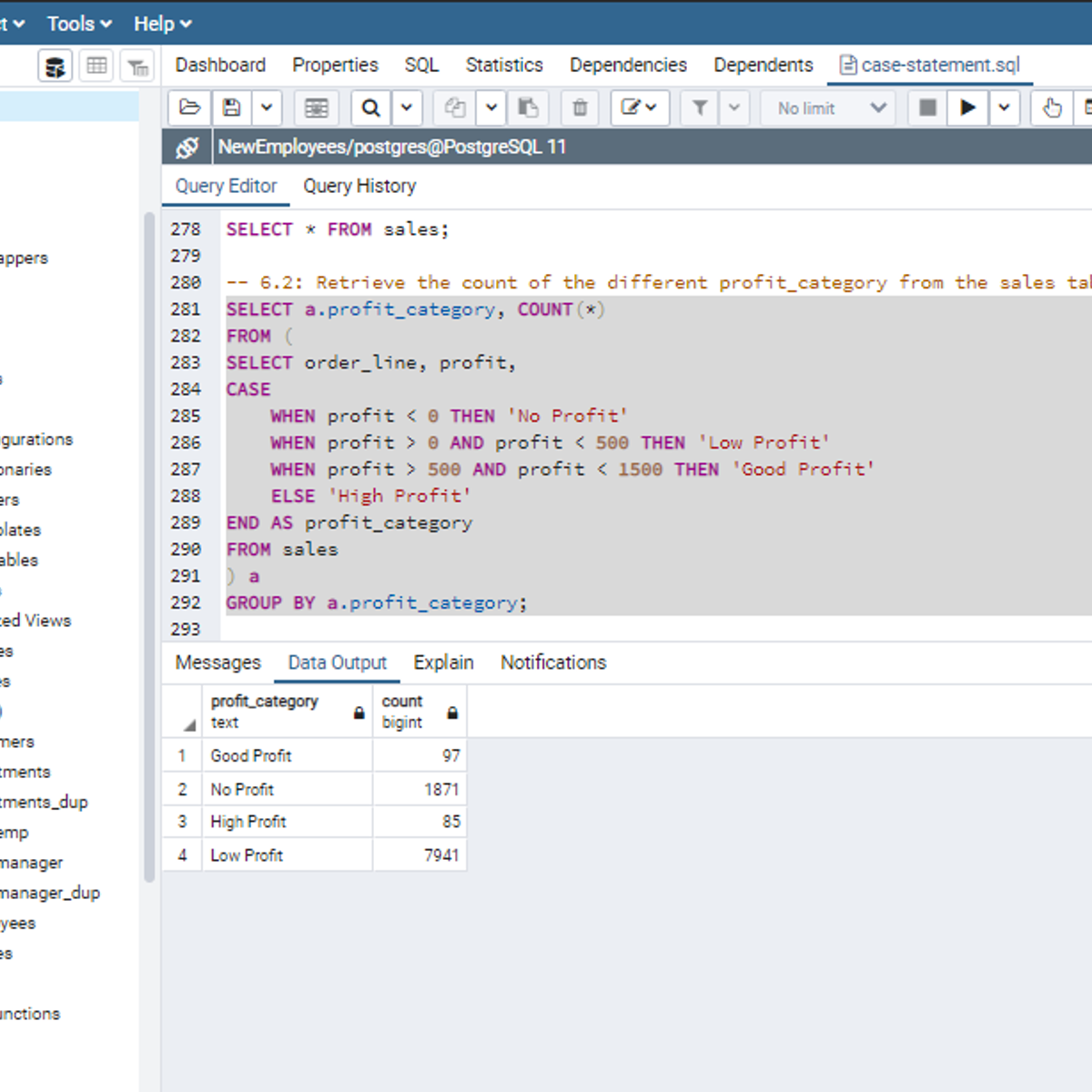

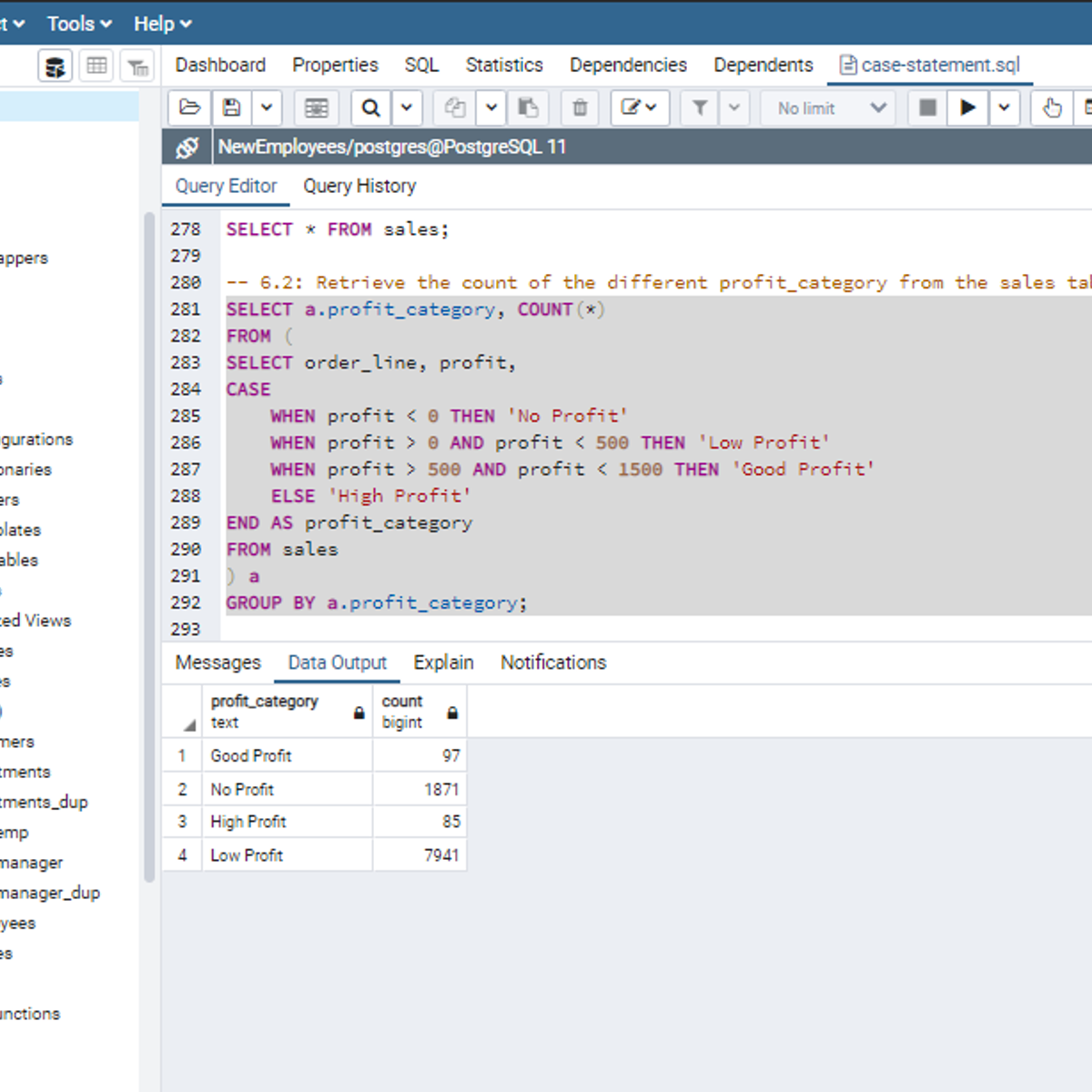

SQL CASE Statements

Welcome to this project-based course, SQL CASE Statements. In this project, you will learn how to use SQL CASE statements to query tables in a database.

By the end of this 2-hour long project, you will be able to write simple CASE statements to retrieve the desired result from a database. Then, we will move systematically to write more complex SQL CASE statements. Furthermore, we will see how to use the CASE clause together with aggregate functions, and SQL joins to get the desired result you want from tables in a database. Also, you will learn how to use the CASE clause to transpose the result of a query.

Also, for this hands-on project, we will use PostgreSQL as our preferred database management system (DBMS). Therefore, to complete this project, it is required that you have prior experience with using PostgreSQL. Similarly, this project is an advanced SQL concept; so, a good foundation for writing SQL queries, and performing joins in SQL is vital to complete this project.

If you are not familiar with writing queries in SQL and SQL joins and want to learn these concepts, start with my previous guided projects titled “Querying Databases using SQL SELECT statement", “Performing Data Aggregation using SQL Aggregate Functions” and “Mastering SQL Joins”. I taught these guided projects using PostgreSQL. So, taking these projects will give the needed requisite to complete this project on SQL CASE Statements. However, if you are comfortable writing queries in PostgreSQL, please join me on this wonderful ride! Let’s get our hands dirty!

Making the Case for Robotic Process Automation

Overview

Robotic Process Automation (RPA) is reshaping the accounting and finance profession. 40% of transactional accounting work is expected to be automated by 2020 and predicted to touch 230 million knowledge workers, 9% of the global workforce, according to McKinsey Research.

Driven by the need to stay competitive, decrease costs and increase efficiency, RPA is quickly making a significant impact on the profession. Companies no longer think about "if" but "when" to implement RPA.

Short Description:

• Robotic Process Automation (RPA) can transform business processes by eliminating the mundane, time-consuming, manual tasks that professionals complete; enabling them more time to focus on critical thinking. This course for accounting and finance professionals worldwide will show through use cases how robotic process automation can be utilized to decrease errors and increase productivity.

Course description/ Overarching Learning Goal

This course is intended to provide accounting and financial professionals with practical literacy on robotic process automation through a real-world, relevant data preparation use case. It will help identify potential uses and the benefits and considerations for robotic process automation. This course will help you make the business case by helping you assess requirements, define proof of value and measure and validate the ROI for automation.

Developing Data Models with LookML

This course empowers you to develop scalable, performant LookML (Looker Modeling Language) models that provide your business users with the standardized, ready-to-use data that they need to answer their questions. Upon completing this course, you will be able to start building and maintaining LookML models to curate and manage data in your organization’s Looker instance.

Analytics as a Service for Data Sharing Partners

This is a self-paced lab that takes place in the Google Cloud console. In this lab you will learn how Authorized Views in BigQuery can be shared and consumed to create customer-specific dashboards.

Use Bash Scripting on Linux to Execute Common commands

By the end of this project, you will use a bash script to execute commands and observe their output on a Linux system.

Bash, or Bourne Again Shell, is more than a shell running in a terminal on Linux; it is a programming language that is used to create powerful programs called shell scripts. Shell scripts are often used to capture common repetitive tasks so they can be executed without the need to memorize multiple individual commands.

Introduction to Data Engineering

This course introduces you to the core concepts, processes, and tools you need to know in order to get a foundational knowledge of data engineering. You will gain an understanding of the modern data ecosystem and the role Data Engineers, Data Scientists, and Data Analysts play in this ecosystem.

The Data Engineering Ecosystem includes several different components. It includes disparate data types, formats, and sources of data. Data Pipelines gather data from multiple sources, transform it into analytics-ready data, and make it available to data consumers for analytics and decision-making. Data repositories, such as relational and non-relational databases, data warehouses, data marts, data lakes, and big data stores process and store this data. Data Integration Platforms combine disparate data into a unified view for the data consumers. You will learn about each of these components in this course. You will also learn about Big Data and the use of some of the Big Data processing tools.

A typical Data Engineering lifecycle includes architecting data platforms, designing data stores, and gathering, importing, wrangling, querying, and analyzing data. It also includes performance monitoring and finetuning to ensure systems are performing at optimal levels. In this course, you will learn about the data engineering lifecycle. You will also learn about security, governance, and compliance.

Data Engineering is recognized as one of the fastest-growing fields today. The career opportunities available in the field and the different paths you can take to enter this field are discussed in the course.

The course also includes hands-on labs that guide you to create your IBM Cloud Lite account, provision a database instance, load data into the database instance, and perform some basic querying operations that help you understand your dataset.

Advanced Features with Relational Database Tables Using SQLiteStudio

In this course, you’ll increase your knowledge of and experience with relational tables as you explore alternative ways of getting data into tables. You’ll also look at some of the advanced features that can give relational tables super powers. As you learn about the new features, you’ll use SQLiteStudio to apply them to your tables. Those features will enable your tables to more efficiently manage data—while keeping your data safe and accurate.

Tables are great for data storage. The concept of organizing data in rows and columns is familiar to most people. Accountants use spreadsheets to organize financial data, making it easier to budget and track expenses. Parents use lists with columns to track their family’s schedules so that everyone gets to participate in outside activities. Even the Internal Revenue Service gets in the game by using tax tables to provide tax amounts for a variety of incomes. Even a simple grocery list is tabular in nature. Each row is an item, with one column having the item's name/description, and a second column noting the quantity needed. It’s no surprise that database designers like to use tables in a relational database to organize and store data. In the Design and Create a Relational Database Table Using SQLiteStudio course you learned about tables. You created and populated a relational table using the SQLiteStudio database management system. That was a great beginning. Now it's time for the next step!

Exploratory Data Analysis Using AI Platform

This is a self-paced lab that takes place in the Google Cloud console. Learn the process of analyzing a data set stored in BigQuery using AI Platform to perform queries and present the data using various statistical plotting techniques.

Troubleshooting and Solving Data Join Pitfalls

This is a self-paced lab that takes place in the Google Cloud console. This lab focuses on how to reverse-engineer the relationships between data tables and the pitfalls to avoid when joining them together.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved