Back to Courses

Machine Learning Courses - Page 44

Showing results 431-440 of 485

Machine Learning: Concepts and Applications

This course gives you a comprehensive introduction to both the theory and practice of machine learning. You will learn to use Python along with industry-standard libraries and tools, including Pandas, Scikit-learn, and Tensorflow, to ingest, explore, and prepare data for modeling and then train and evaluate models using a wide variety of techniques. Those techniques include linear regression with ordinary least squares, logistic regression, support vector machines, decision trees and ensembles, clustering, principal component analysis, hidden Markov models, and deep learning.

A key feature of this course is that you not only learn how to apply these techniques, you also learn the conceptual basis underlying them so that you understand how they work, why you are doing what you are doing, and what your results mean. The course also features real-world datasets, drawn primarily from the realm of public policy. It is based on an introductory machine learning course offered to graduate students at the University of Chicago and will serve as a strong foundation for deeper and more specialized study.

Introduction to Machine Learning in Production

In the first course of Machine Learning Engineering for Production Specialization, you will identify the various components and design an ML production system end-to-end: project scoping, data needs, modeling strategies, and deployment constraints and requirements; and learn how to establish a model baseline, address concept drift, and prototype the process for developing, deploying, and continuously improving a productionized ML application.

Understanding machine learning and deep learning concepts is essential, but if you’re looking to build an effective AI career, you need production engineering capabilities as well. Machine learning engineering for production combines the foundational concepts of machine learning with the functional expertise of modern software development and engineering roles to help you develop production-ready skills.

Week 1: Overview of the ML Lifecycle and Deployment

Week 2: Selecting and Training a Model

Week 3: Data Definition and Baseline

Integrating BigQuery ML with Dialogflow ES Chatbot

This is a self-paced lab that takes place in the Google Cloud console. In this lab you will train a simple machine learning model for predicting helpdesk response time using BigQuery Machine Learning.

Generative Deep Learning with TensorFlow

In this course, you will:

a) Learn neural style transfer using transfer learning: extract the content of an image (eg. swan), and the style of a painting (eg. cubist or impressionist), and combine the content and style into a new image.

b) Build simple AutoEncoders on the familiar MNIST dataset, and more complex deep and convolutional architectures on the Fashion MNIST dataset, understand the difference in results of the DNN and CNN AutoEncoder models, identify ways to de-noise noisy images, and build a CNN AutoEncoder using TensorFlow to output a clean image from a noisy one.

c) Explore Variational AutoEncoders (VAEs) to generate entirely new data, and generate anime faces to compare them against reference images.

d) Learn about GANs; their invention, properties, architecture, and how they vary from VAEs, understand the function of the generator and the discriminator within the model, the concept of 2 training phases and the role of introduced noise, and build your own GAN that can generate faces.

The DeepLearning.AI TensorFlow: Advanced Techniques Specialization introduces the features of TensorFlow that provide learners with more control over their model architecture, and gives them the tools to create and train advanced ML models.

This Specialization is for early and mid-career software and machine learning engineers with a foundational understanding of TensorFlow who are looking to expand their knowledge and skill set by learning advanced TensorFlow features to build powerful models.

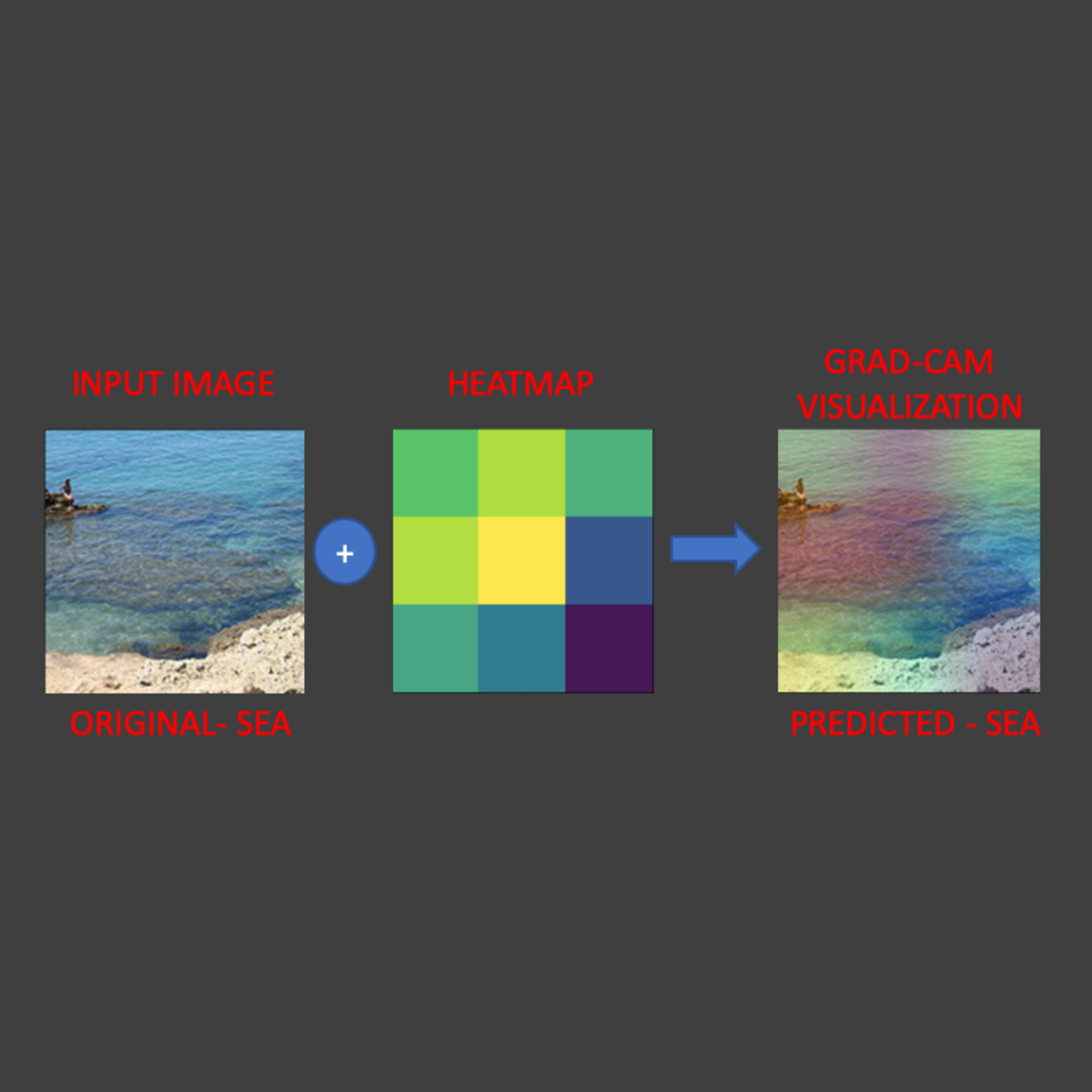

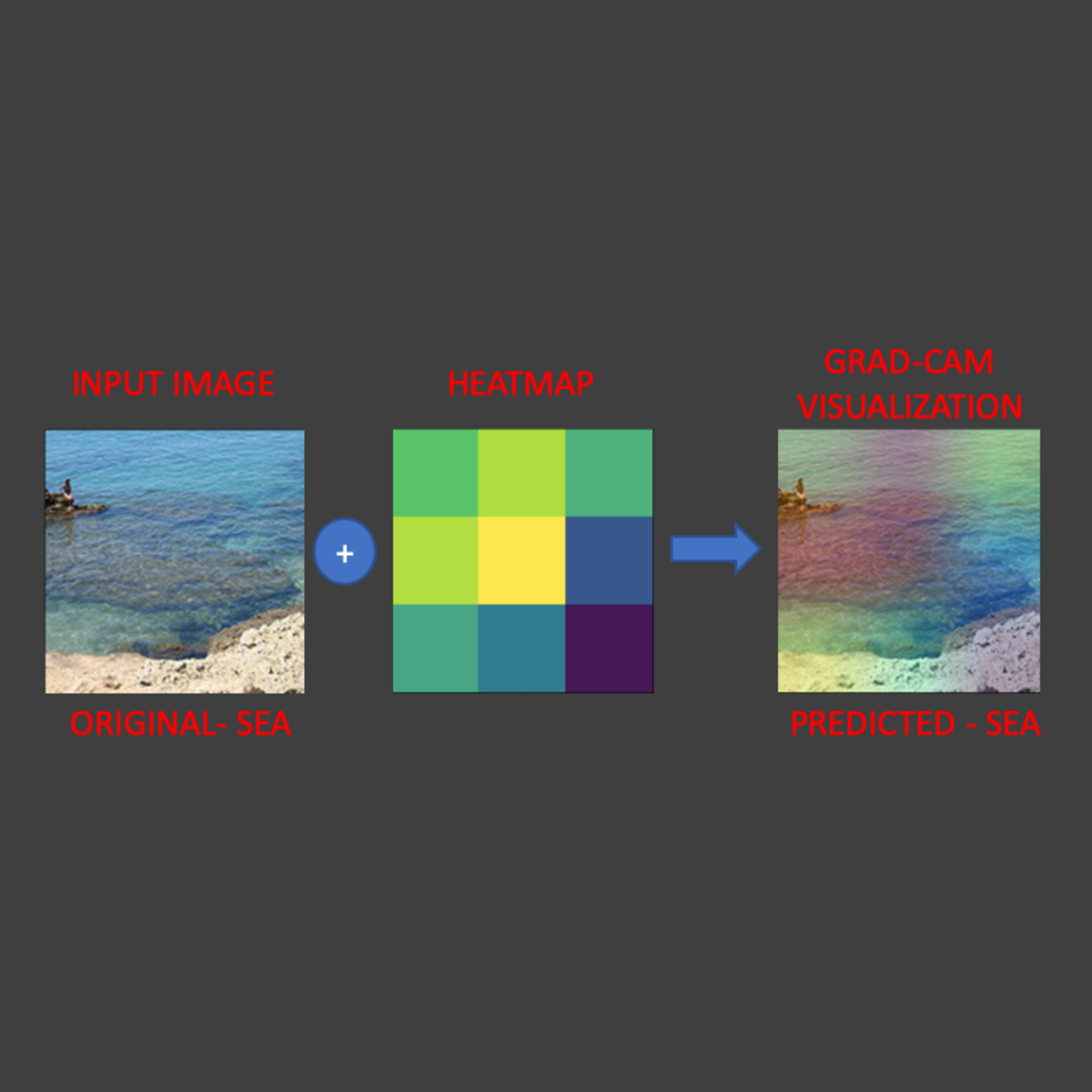

Explainable AI: Scene Classification and GradCam Visualization

In this 2 hour long hands-on project, we will train a deep learning model to predict the type of scenery in images. In addition, we are going to use a technique known as Grad-Cam to help explain how AI models think. This project could be practically used for detecting the type of scenery from the satellite images.

Interpretable machine learning applications: Part 3

In this 50 minutes long project-based course, you will learn how to apply a specific explanation technique and algorithm for predictions (classifications) being made by inherently complex machine learning models such as artificial neural networks. The explanation technique and algorithm is based on the retrieval of similar cases with those individuals for which we wish to provide explanations. Since this explanation technique is model agnostic and treats the predictions model as a 'black-box', the guided project can be useful for decision makers within business environments, e.g., loan officers at a bank, and public organizations interested in using trusted machine learning applications for automating, or informing, decision making processes.

The main learning objectives are as follows:

Learning objective 1: You will be able to define, train and evaluate an artificial neural network (Sequential model) based classifier by using keras as API for TensorFlow. The pediction model will be trained and tested with the HELOC dataset for approved and rejected mortgage applications.

Learning objective 2: You will be able to generate explanations based on similar profiles for a mortgage applicant predicted either as of "Good" or "Bad" risk performance.

Learning objective 3: you will be able to generate contrastive explanations based on feature and pertinent negative values, i.e., what an applicant should change in order to turn a "rejected" application to an "approved" one.

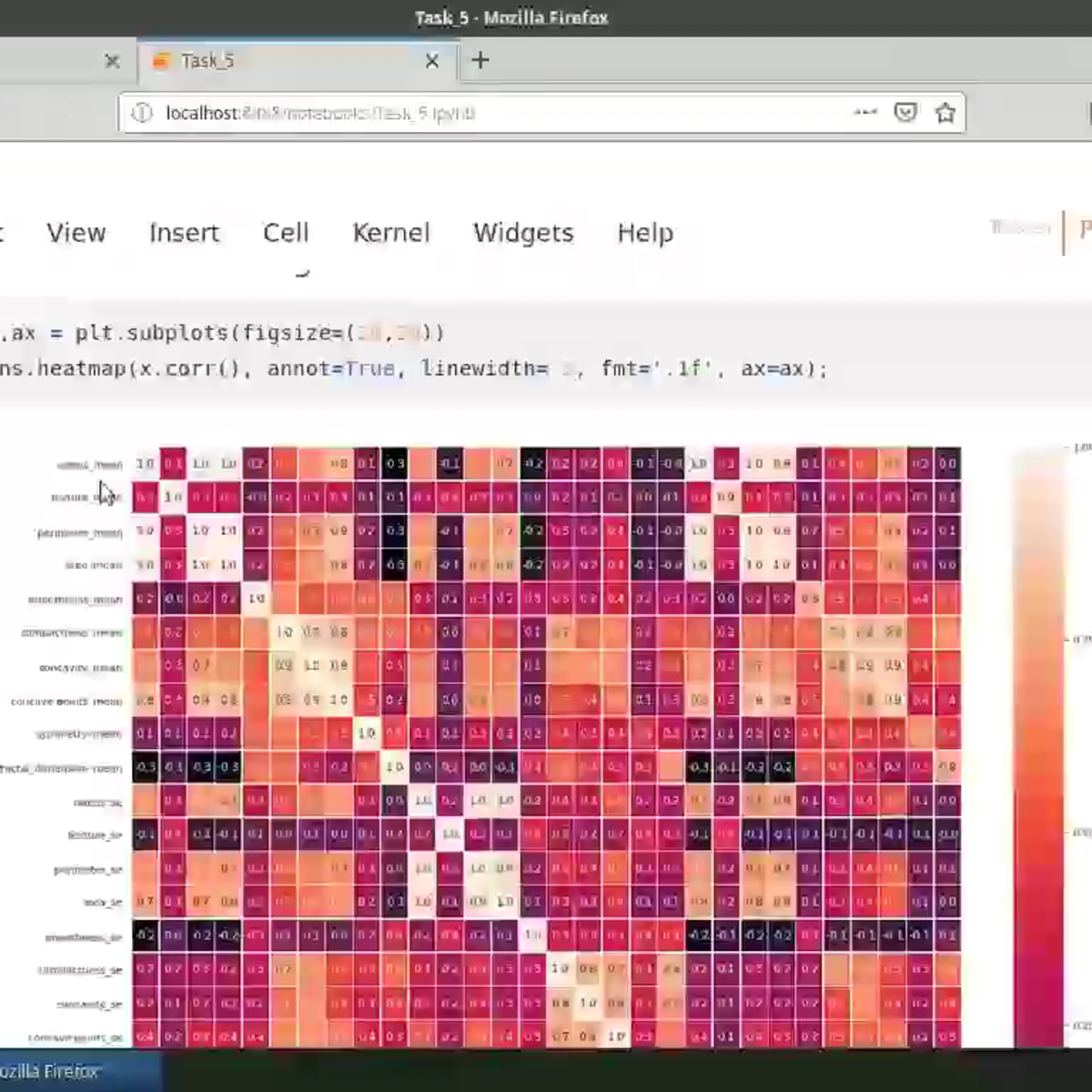

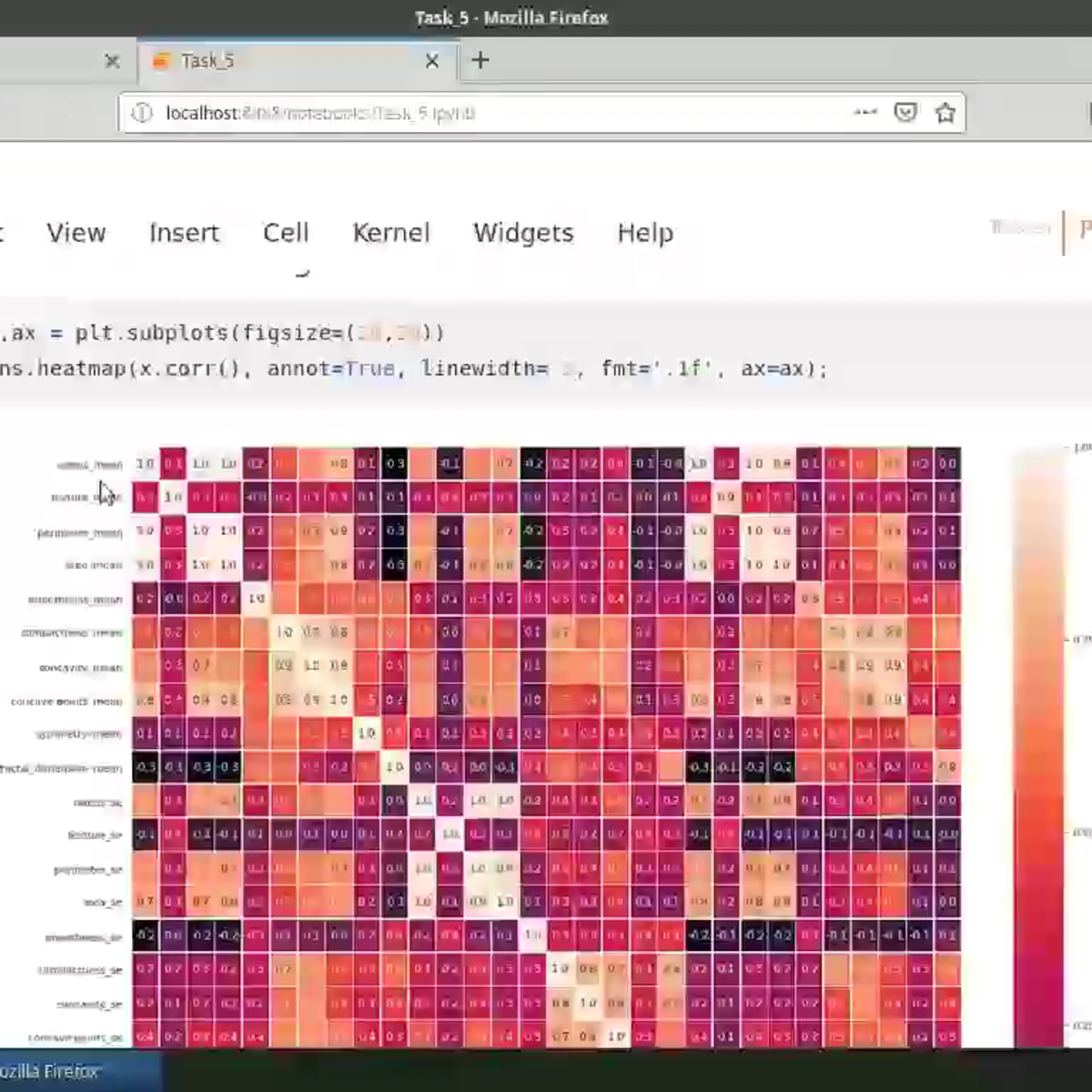

Exploratory Data Analysis with Seaborn

Producing visualizations is an important first step in exploring and analyzing real-world data sets. As such, visualization is an indispensable method in any data scientist's toolbox. It is also a powerful tool to identify problems in analyses and for illustrating results.In this project-based course, we will employ the statistical data visualization library, Seaborn, to discover and explore the relationships in the Breast Cancer Wisconsin (Diagnostic) Data Set. We will cover key concepts in exploratory data analysis (EDA) using visualizations to identify and interpret inherent relationships in the data set, produce various chart types including histograms, violin plots, box plots, joint plots, pair grids, and heatmaps, customize plot aesthetics and apply faceting methods to visualize higher dimensional data.

This course runs on Coursera's hands-on project platform called Rhyme. On Rhyme, you do projects in a hands-on manner in your browser. You will get instant access to pre-configured cloud desktops containing all of the software and data you need for the project. Everything is already set up directly in your internet browser so you can just focus on learning. For this project, you’ll get instant access to a cloud desktop with Python, Jupyter, and scikit-learn pre-installed.

Notes:

- You will be able to access the cloud desktop 5 times. However, you will be able to access instructions videos as many times as you want.

- This course works best for learners who are based in the North America region. We’re currently working on providing the same experience in other regions.

Neural Networks and Random Forests

In this course, we will build on our knowledge of basic models and explore advanced AI techniques. We’ll start with a deep dive into neural networks, building our knowledge from the ground up by examining the structure and properties. Then we’ll code some simple neural network models and learn to avoid overfitting, regularization, and other hyper-parameter tricks. After a project predicting likelihood of heart disease given health characteristics, we’ll move to random forests. We’ll describe the differences between the two techniques and explore their differing origins in detail. Finally, we’ll complete a project predicting similarity between health patients using random forests.

Introduction to PyMC3 for Bayesian Modeling and Inference

The objective of this course is to introduce PyMC3 for Bayesian Modeling and Inference, The attendees will start off by learning the the basics of PyMC3 and learn how to perform scalable inference for a variety of problems. This will be the final course in a specialization of three courses .Python and Jupyter notebooks will be used throughout this course to illustrate and perform Bayesian modeling with PyMC3.. The course website is located at https://sjster.github.io/introduction_to_computational_statistics/docs/index.html. The course notebooks can be downloaded from this website by following the instructions on page https://sjster.github.io/introduction_to_computational_statistics/docs/getting_started.html.

The instructor for this course will be Dr. Srijith Rajamohan.

Classify Radio Signals with PyTorch

In this 2-hour long guided-project course, you will load a pretrained state of the art model CNN and you will train in PyTorch to classify radio signals with input as spectogram images. The data that you will use, consists of spectogram images (spectogram is a representation of audio signals) and there are targets such as ( Squiggle, Noises, Narrowband, etc). Furthermore, you will apply spectogram augmentation for classification task to augment spectogram images. Moreover, you are going to create train and evaluator function which will be helpful to write training loop. Lastly, you will use best trained model to classify radio signals given any 2D Spectogram of radio signal input images.

Popular Internships and Jobs by Categories

Find Jobs & Internships

Browse

© 2024 BoostGrad | All rights reserved