3D Reconstruction - Single Viewpoint

Overview

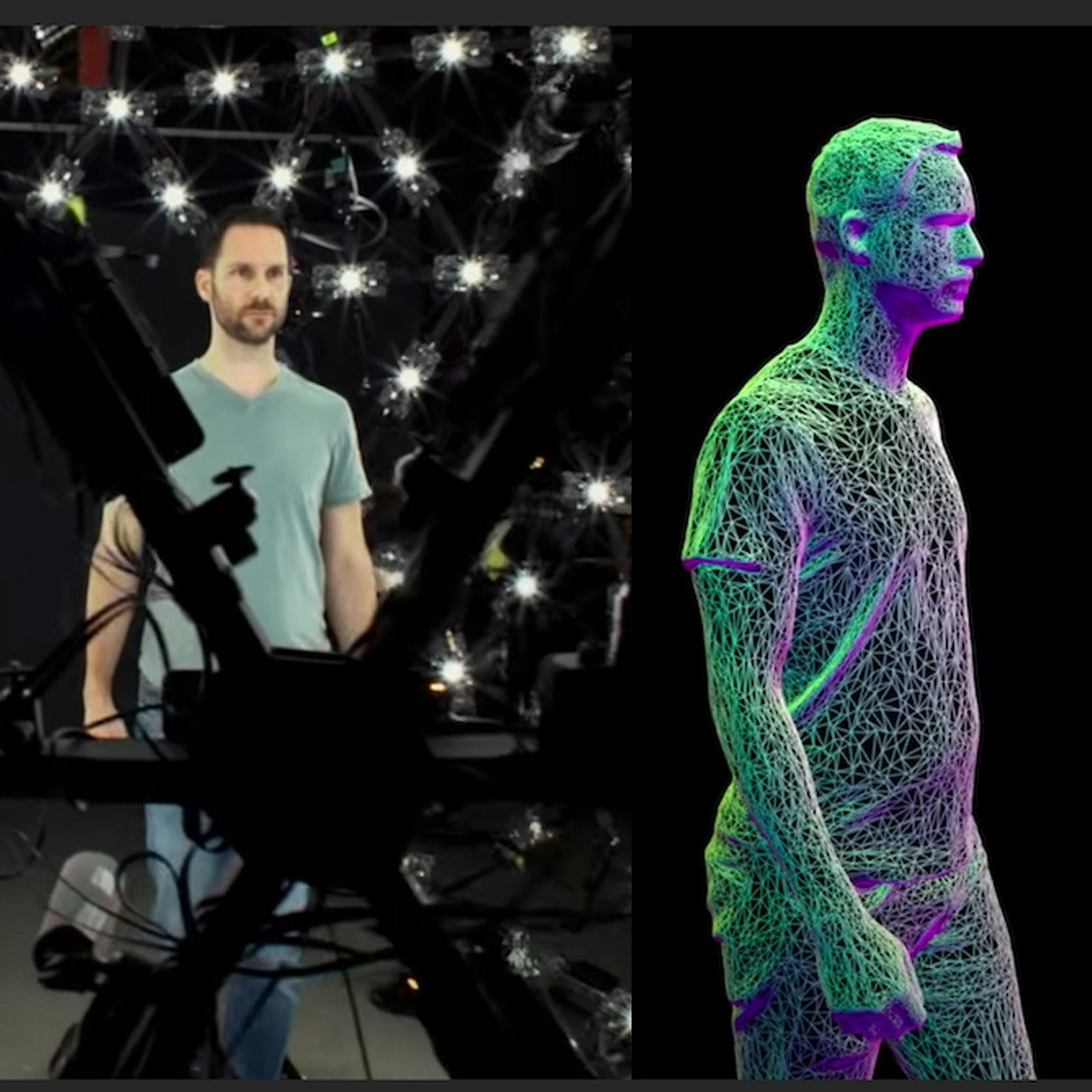

This course focuses on the recovery of the 3D structure of a scene from its 2D images. In particular, we are interested in the 3D reconstruction of a rigid scene from images taken by a stationary camera (same viewpoint). This problem is interesting as we want the multiple images of the scene to capture complementary information despite the fact that the scene is rigid and the camera is fixed. To this end, we explore several ways of capturing images where each image provides additional information about the scene. In order to estimate scene properties (depth, surface orientation, material properties, etc.) we first define several important radiometric concepts, such as, light source intensity, surface illumination, surface brightness, image brightness and surface reflectance. Then, we tackle the challenging problem of shape from shading - recovering the shape of a surface from its shading in a single image. Next, we show that if multiple images of a scene of known reflectance are taken while changing the illumination direction, the surface normal at each scene point can be computed. This method, called photometric stereo, provides a dense surface normal map that can be integrated to obtain surface shape. Next, we discuss depth from defocus, which uses the limited depth of field of the camera to estimate scene structure. From a small number of images taken by changing the focus setting of the lens, a dense depth of the scene is recovered. Finally, we present a suite of techniques that use active illumination (the projection of light patterns onto the scene) to get precise 3D reconstructions of the scene. These active illumination methods are the workhorse of factory automation. They are used on manufacturing lines to assemble products and inspect their visual quality. They are also extensively used in other domains such as driverless cars, robotics, surveillance, medical imaging and special effects in movies.